and the distribution of digital products.

Researchers Develop Promising Method for Collecting Facial Expression Data Through Gamification

:::info Authors:

(1) Krist Shingjergji, Educational Sciences, Open University of the Netherlands, Heerlen, The Netherlands ([email protected]);

(2) Deniz Iren, Center for Actionable Research, Open University of the Netherlands, Heerlen, The Netherlands ([email protected]);

(3) Felix Bottger, Center for Actionable Research, Open University of the Netherlands, Heerlen, The Netherlands;

(4) Corrie Urlings, Educational Sciences, Open University of the Netherlands, Heerlen, The Netherlands;

(5) Roland Klemke, Educational Sciences, Open University of the Netherlands, Heerlen, The Netherlands.

:::

:::tip Editor's note: This is Part 6 of 6 of a study detailing the development of a gamified method of acquiring annotated facial emotion data. Read the rest below.

:::

Table of Links- Abstract and I. Introduction

- II. Related Work

- III. Gamified Data Collection and interpretable Fer

- IV. Methodology

- V. Results

- VI. Discussion and Conclusion, Ethical Impact statement and References

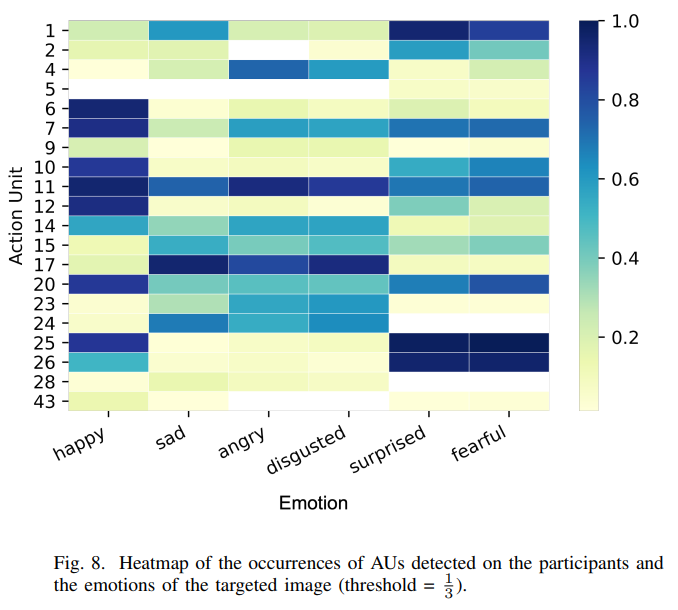

In this paper, we introduced a novel approach for explainable FER that promises three related contributions. First, by means of gamification, we have developed a method for collecting annotated face expression data continuously, which allows us to describe the facial expressions of six basic emotions as a distribution of AUs. Secondly, we proposed and evaluated an interpretable FER explainability method that uses AUs as features to describe the outcomes of FER models, i.e., facial emotion classes. The experimental observations indicate that the natural language explanations of face expressions are interpretable by humans. Our quantitative and qualitative results highlight improvement opportunities regarding the design of Facegame and how we communicate the face expression explanations. Finally, we observed that the players were able to

\

\ improve their face expression and perception skills by playing the game.

\ Our results have potential theoretical and practical implications. Our method of acquiring nuanced face expressions (i.e., distributions of AUs) that correlate with facial emotion classes provides a means to improve the performance of inthe-wild FER models. Such performance improvements may pave the way for novel practical approaches in many domains, such as online synchronous learning [46]. Moreover, the gamification approach offers a sustainable, continuous selftraining process of FER models. Our explainability method that uses AUs as intermediary features to describe facial emotions provides a novel approach towards achieving interpretable, human-friendly explanations of FER models. In this study, we conducted our experiment and analyses on a limited sample. In the future, based on the observations and feedback collected during this study, we will continue this line of research and improve the ways of providing prescriptions to the players of Facegame by combining the natural language explanations with graphical methods. Finally, the quality of the data was assured by using a threshold of the score as a selection criterion. The score of the trial was calculated by the presence/absence of the AUs regardless of the intensity which has the risk of including data with exaggerating facial expressions and introducing bias to the data set [47]. In future studies, we aim to include the intensity of the AUs as well as a higher threshold in the selection criteria to increase the accuracy and fairness of the collected data set.

\ The goal of this study is limited to the evaluation of gamification as a data collection method. In the future, we aim to collect more data by crowdsourcing a large number of players which will result in a more in-depth analysis of the collected data set. In this setting, the player was asked to mimic the facial expressions of a face displaying one emotion and not triggered to experience that emotion. This approach has limitations considering that there are differences between the posed and spontaneous facial expressions in morphological and dynamic aspects of certain emotions [48] as well as demographical mismatches between the target image and the player. In future studies, we aim to increase the variance of the dataset by including in the set of the target images faces with spontaneous facial expressions as well as increasing the demographic diversity of the target images.

ETHICAL IMPACT STATEMENTIn this study, the personal data collection was limited to facial images of the players. The collected data were securely stored in the server of the research institute which can only be accessed by the researchers of this study, The participants were informed regarding the experiment and data collection, and they provided consent prior to taking part in the experiment.

REFERENCES[1] K. R. Scherer, and P. H. Tannenbaum, “Emotional experiences in everyday life: A survey approach,” Motivation and Emotion, vol. 10, pp. 295–314, 1986

\ [2] A.J. Fridlund, “Human facial expression: An evolutionary view,” Academic press, March 2014.

\ [3] D. Matsumoto, K. Dacher, M. N. Shiota, M. O’Sullivan, and M. Frank, “Facial expressions of emotion,”The Guilford Press, 2008.

\ [4] R. W. Picard, “Affective computing,” MIT press, July 2000.

\ [5] M. Maithri, U. Raghavendra, A. Gudigar, J. Samanth, D. B. Prabal, M. Murugappan, et al. “Automated Emotion Recognition: Current Trends and Future Perspectives,” Computer Methods and Programs in Biomedicine, pp. 106646, 2022.

\ [6] B. C. Ko, “A brief review of facial emotion recognition based on visual information,” sensors, vol. 18, pp.401, February 2018.

\ [7] S. Li, and D. Weihong, “Deep facial expression recognition: A survey,” IEEE transactions on affective computing, 2020.

\ [8] S. Li, and D. Weihong, “Reliable crowdsourcing and deep localitypreserving learning for unconstrained facial expression recognition,” IEEE Transactions on Image Processing, vol. 28, pp.356–370, 2018.

\ [9] L.H. Gilpin, D. Bau, B.Z. Yuan BZ, A. Bajwa, M. Specter, and L. Kagal. “Explaining explanations: An overview of interpretability of machine learning,” 2018 IEEE 5th International Conference on Data Science and Advanced Analytics (DSAA), pp. 80-89, October 2018.

\ [10] P. Kumar P, Kaushik V, and B. Raman. “Towards the Explainability of Multimodal Speech Emotion Recognition,” Interspeech, pp 1748–1752, 2021 [11] E. Ghaleb, A. Mertens, S. Asteriadis, and G. Weiss, “Skeleton-Based Explainable Bodily Expressed Emotion Recognition Through Graph Convolutional Networks,” 2021 16th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2021), pp. 1–8, 2021.

\ [12] J.V. Jeyakumar, J. Noor, Y.H Cheng, L. Garcia, and M. Srivastava, “How can i explain this to you? an empirical study of deep neural network explanation methods,” Advances in Neural Information Processing Systems, vol. 33, pp. 4211–4222, 2020. [13] P. Ekman, and W. Friesen, “Facial action coding system,” Environmental Psychology & Nonverbal Behavior, 1978.

\ [14] P. Ekman, “Facial expression and emotion,” American psychologist vol. 48, pp. 384, April 1993.

\ [15] R. Reisenzein, M. Studtmann, and G. Horstmann. “Coherence between emotion and facial expression: Evidence from laboratory experiments,” Emotion Review, vol. 5, pp. 16–23, January 2013.

\ [16] M. Wegrzyn, M. Vogt, B. Kireclioglu, J. Schneider, and J. Kissler, “Mapping the emotional face. How individual face parts contribute to successful emotion recognition,” PloS one, vol. 12, pp. 11–12, May 2017.

\ [17] N. Borges, L. Lindblom, B. Clarke, A. Gander, and R. Lowe, “Classifying confusion: autodetection of communicative misunderstandings using facial action units,” 2019 8th International Conference on Affective Computing and Intelligent Interaction Workshops and Demos (ACIIW), pp. 401–406, September 2019.

\ [18] P. Lucey, J. F. Cohn, T. Kanade, J. Saragih, Z. Ambadar, and I. Matthews, “The extended cohn-kanade dataset (CK+): A complete dataset for action unit and emotion-specified expression,” 2010 IEEE computer society conference on computer vision and pattern recognitionworkshops, pp. 94–101, June 2010.

\ [19] S.M. Mavadati, M.H Mahoor, K. Bartlett, P. Trinh, and J.F. Cohn, “Disfa: A spontaneous facial action intensity database,” IEEE Transactions on Affective Computing, pp. 151–160 2013 Mar 7;4(2):151–60.

\ [20] T. Baltrusaitis, M. Mahmoud, and P. Robinson, “Cross-dataset learning ˇ and person-specific normalisation for automatic action unit detection,” 2015 11th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), vol. 6, pp. 1–6, May 2015.

\ [21] N. Dalal, and B. Triggs, “Histograms of oriented gradients for human detection,” In 2005 IEEE computer society conference on computer vision and pattern recognition (CVPR’05), vol. 1, pp. 886–893, June 2005.

\ [22] O. C¸ eliktutan, S. Ulukaya, and B. Sankur, “A comparative study of face landmarking techniques,” EURASIP Journal on Image and Video Processing, pp. 1–27, December 2013.

\ [23] Z. Shao, Z. Liu, J. Cai, Y. Wu, and L, Ma, “Facial action unit detection using attention and relation learning,” IEEE transactions on affective computing, October 2019.

\ [24] G.M. Jacob, and B. Stenger, “Facial action unit detection with transformers,” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7680–7689, 2021.

\ [25] Z. Shao, Z. Liu, J. Cai, Y. Wu, and L, Ma, “JAA-Net: joint facial action unit detection and face alignment via adaptive attention,” International Journal of Computer Vision, vol. 129, pp. 321–340, February 2021.

\ [26] K. Zhao, W.S Chu, and Zhang, “Deep region and multi-label learning for facial action unit detection,” Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 3391–3399, 2016.

\ [27] A. Rosenfeld, and A. Richardson, “Explainability in human–agent systems,” Autonomous Agents and Multi-Agent Systems, vol. 33, pp. 673–705 November 2019.

\ [28] R. Guidotti, A. Monreale, S. Ruggieri, F. Turini, F. Giannotti, and D. Pedreschi, “A survey of methods for explaining black box models,” ACM computing surveys (CSUR). 2018 Aug 22;51(5):1-42.

\ [29] A. Rosenfeld, “Better metrics for evaluating explainable artificial intelligence,” Proceedings of the 20th international conference on autonomous agents and multiagent systems, pp. 45–55, May 2021. [30] J. Howe J, “The rise of crowdsourcing,” Wired magazine, vol. 6, pp. 1–4, June 2006.

\ [31] D. Iren, S. Bilgen, “Cost of quality in crowdsourcing,” Human Computation, vol. 1, December 2014.

\ [32] A.J Quinn, and B.B Bederson, “Human computation: a survey and taxonomy of a growing field,” Proceedings of the SIGCHI conference on human factors in computing systems, pp. 1403–1412, May 2011.

\ [33] M. Hosseini, A. Shahri, K. Phalp, J. Taylor, and R. Ali, “Crowdsourcing: A taxonomy and systematic mapping study,” Computer Science Review, vol. 17, pp. 43–69, August 2015.

\ [34] S. Deterding, D. Dixon, R. Khaled, and L. Nacke, “From game design elements to gamefulness: defining” gamification,” Proceedings of the 15th international academic MindTrek conference: Envisioning future media environments, pp. 9–15, September 2011

\ [35] A.F. Aparicio, F.L. Vela, J.L. Sanchez, and J.L Montes, “Analysis ´ and application of gamification,” Proceedings of the 13th International Conference on Interaccion Persona-Ordenador, pp. 1–2, October 2012. ´

\ [36] V. Gurav, M. Parkar, and P. Kharwar, “Accessible and Ethical Data Annotation with the Application of Gamification,” International Conference on Recent Developments in Science, Engineering and Technology, pp. 68–78, November 2019.

\ [37] J.J Lopez-Jim ´ enez, J.L. Fern ´ andez-Alem ´ an, L.L Gonz ´ alez, O.G Se- ´ queros, B.M Valle, J.A Garc´ıa-Berna, et al. “Taking the pulse of ´ a classroom with a gamified audience response system,” Computer Methods and Programs in Biomedicine, vol. 213, pp. 106459, January 2022.

\ [38] A.I. Wang, and R. Tahir, “The effect of using Kahoot! for learning–A literature review” Computers & Education, vol. 149, pp. 103818, May 2020.

\ [39] P. Tobien, L. Lischke, M. Hirsch, R. Kruger, P. Lukowicz, A. Schmidt, ¨ “Engaging people to participate in data collection,” Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct, pp. 209-212, September 2016.

\ [40] J.H. Cheong, T. Xie, S. Byrne, and L.J. Chang, “Py-feat: Python facial expression analysis toolbox,” arXiv preprint arXiv:2104.03509, April 2021.

\ [41] D. E. King, “Dlib-ml: A machine learning toolkit,” The Journal of Machine Learning Research, vol. 10, pp. 1755–1758, 2009.

\ [42] I .J. Goodfellow, D. Erhan, P. L. Carrier, A. Courville, M. Mirza, B. Hamner et al, “Challenges in representation learning: A report on three machine learning contests,” International conference on neural information processing, Springer, Berlin, pp. 117–124, November 2013.

\ [43] S. Li, W. Deng, and J. Du, “Reliable crowdsourcing and deep localitypreserving learning for expression recognition in the wild,” IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2852– 2861, 2017.

\ [44] S. Li, and W. Deng, “Reliable Crowdsourcing and Deep LocalityPreserving Learning for Unconstrained Facial Expression Recognition,” IEEE Transactions on Image Processing, vol. 28, pp. 356–370, 2019.

\ [45] M. Bishay, A. Ghoneim, M. Ashraf, M. Mavadati, “Which CNNs and Training Settings to Choose for Action Unit Detection? A Study Based on a Large-Scale Dataset,” 16th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2021), pp. 1–5, 2021.

\ [46] K. Shingjergji, D. Iren, C. Urlings, and R. Klemke, “Sense the classroom: AI-supported synchronous online education for a resilient new normal,” EC-TEL (Doctoral Consortium), pp. 64–70, January 2021.

\ [47] M. Mak¨ ar¨ ainen, J. K ¨ atsyri, and T. Takala, “Exaggerating facial expres- ¨ sions: A way to intensify emotion or a way to the uncanny valley?,” Cognitive Computation, vol. 6. pp. 708–721, Decembe 2014.

\ [48] S. Namba, S. Makihara, R.S. Kabir, M. Miyatani, and T. Nakao, “Spontaneous Facial Expressions Are Different from Posed Facial Expressions: Morphological Properties and Dynamic Sequences,” Current Psychology, vol 36, pp. 593–605, 2017

\

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

\

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.