and the distribution of digital products.

DM Television

Here's How the Pros Choose the Right Load Balancer

In today’s digital landscape, the demand for high availability and performance is more crucial than ever. Whether you’re running a small web application or managing a complex microservices architecture, ensuring that traffic is efficiently routed and balanced across your infrastructure is vital to providing a seamless user experience. This is where load balancers come into play.

\ Load balancers are a foundational component in modern systems, distributing network or application traffic across multiple servers to ensure no single server bears too much load. This not only improves performance but also ensures high availability by redirecting traffic in case of server failures.

\ In this article, we will dive deep into the concepts, features, and best practices surrounding load balancers. From understanding the various load balancing algorithms to optimizing for high availability and performance, by the end of this guide, you’ll have the knowledge to leverage load balancers effectively in your own infrastructure.

What Are Load Balancers?A load balancer is a crucial component in distributed systems, acting as an intermediary that distributes incoming traffic across multiple servers or backend services. By doing so, it ensures no single server becomes overwhelmed, enhancing both system performance and reliability. Load balancers are particularly valuable in environments where uptime and responsiveness are paramount.

\ There are different types of load balancers based on where they operate within the OSI model:

- Layer 4 Load Balancers: These operate at the transport layer (TCP/UDP) and make routing decisions based on IP addresses and port numbers. They are fast and efficient but offer less granularity in traffic management.

- Layer 7 Load Balancers: These operate at the application layer and can inspect HTTP headers, URLs, and cookies to make more informed routing decisions. This allows for more sophisticated traffic distribution but at the cost of higher processing overhead.

\ Load balancers can be implemented as hardware devices, but more commonly today, they exist as software solutions that can run in the cloud or on-premises. They are used in various scenarios such as distributing web traffic, managing microservices, and handling APIs. By balancing the load, these systems prevent downtime and ensure a smoother user experience.

Key Features of Load BalancersLoad balancers are more than just traffic directors; they come equipped with a range of features that enhance both system performance and availability. Understanding these key features is essential to fully leveraging the potential of load balancing in your infrastructure.

1. Load Distribution StrategiesLoad balancers use various algorithms to distribute incoming traffic across available servers. Some common strategies include:

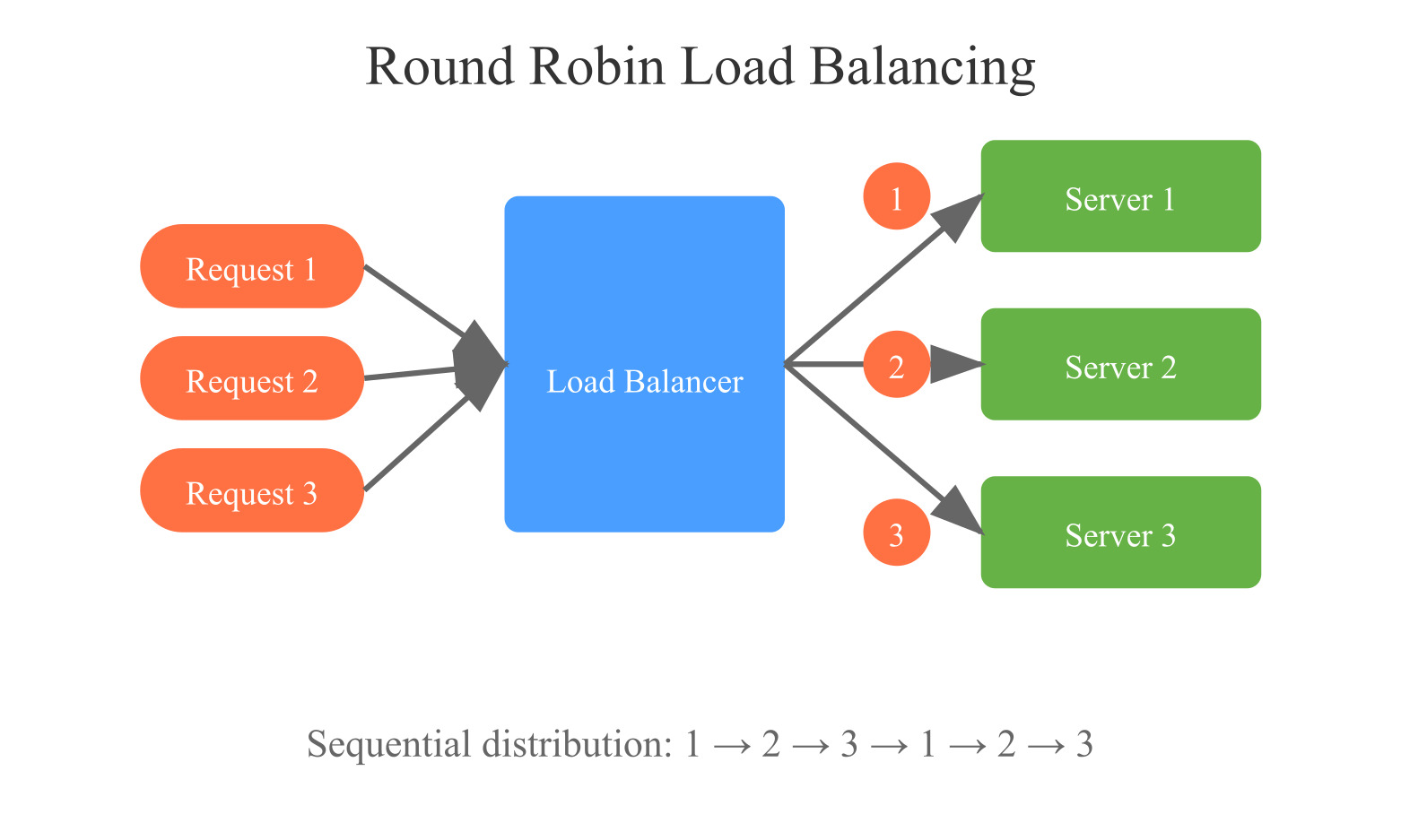

- Round Robin: Simple cyclic distribution: Server 1 → 2 → 3 → 1.

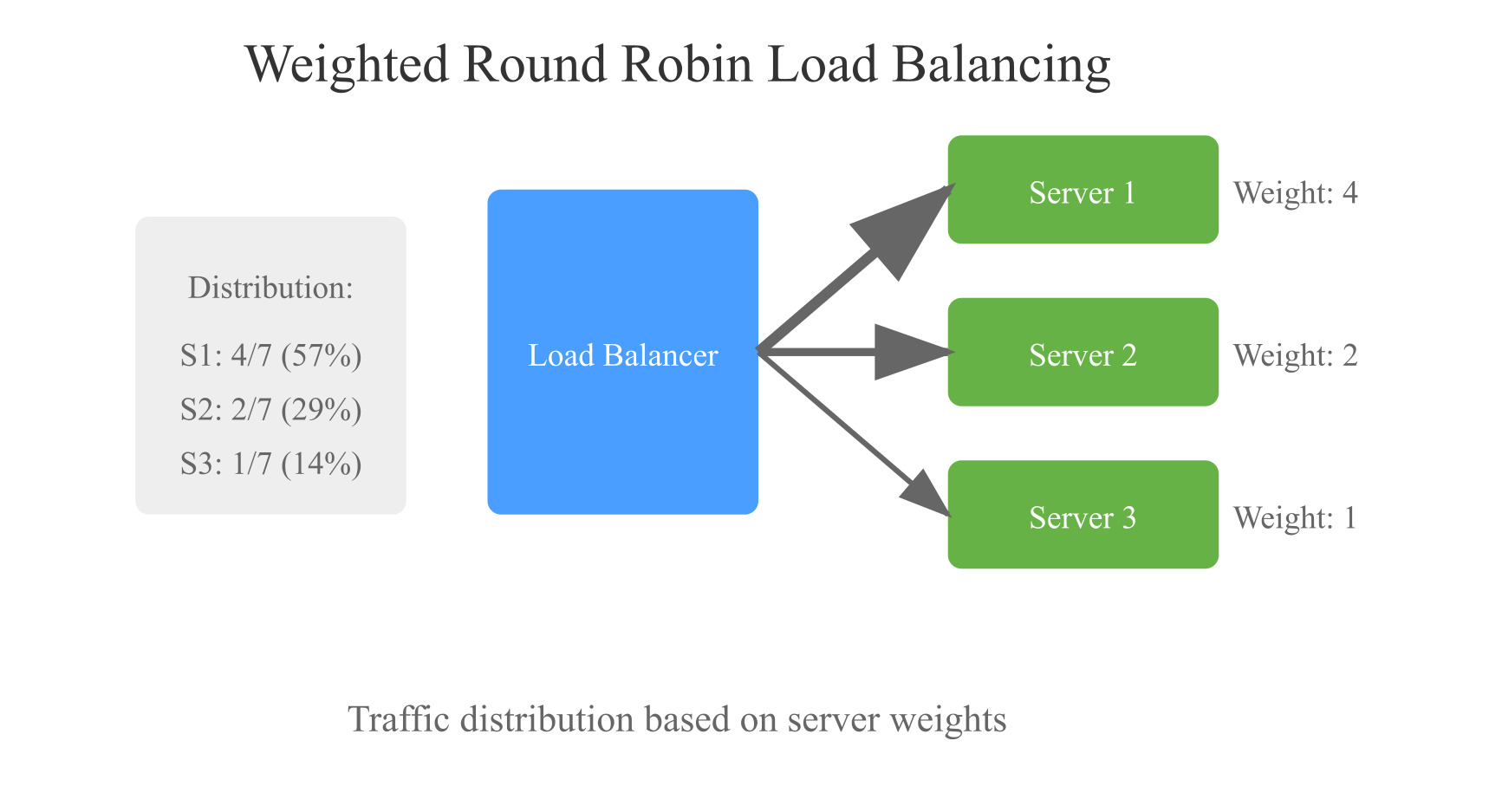

- Weighted Round Robin: Servers get traffic based on capacity. Stronger servers receive more requests.

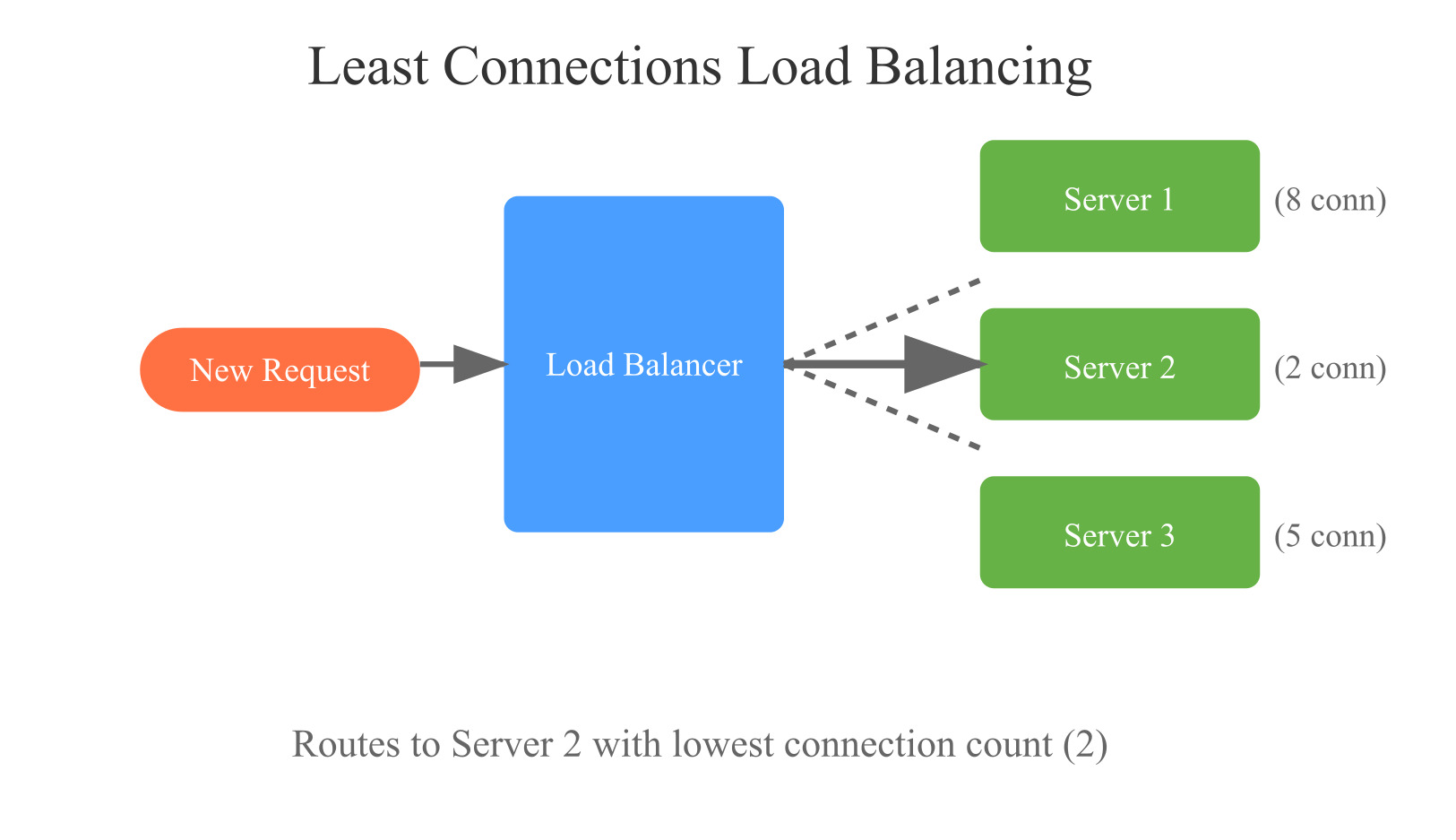

- Least Connections: Sends traffic to a server handling the fewest current requests.

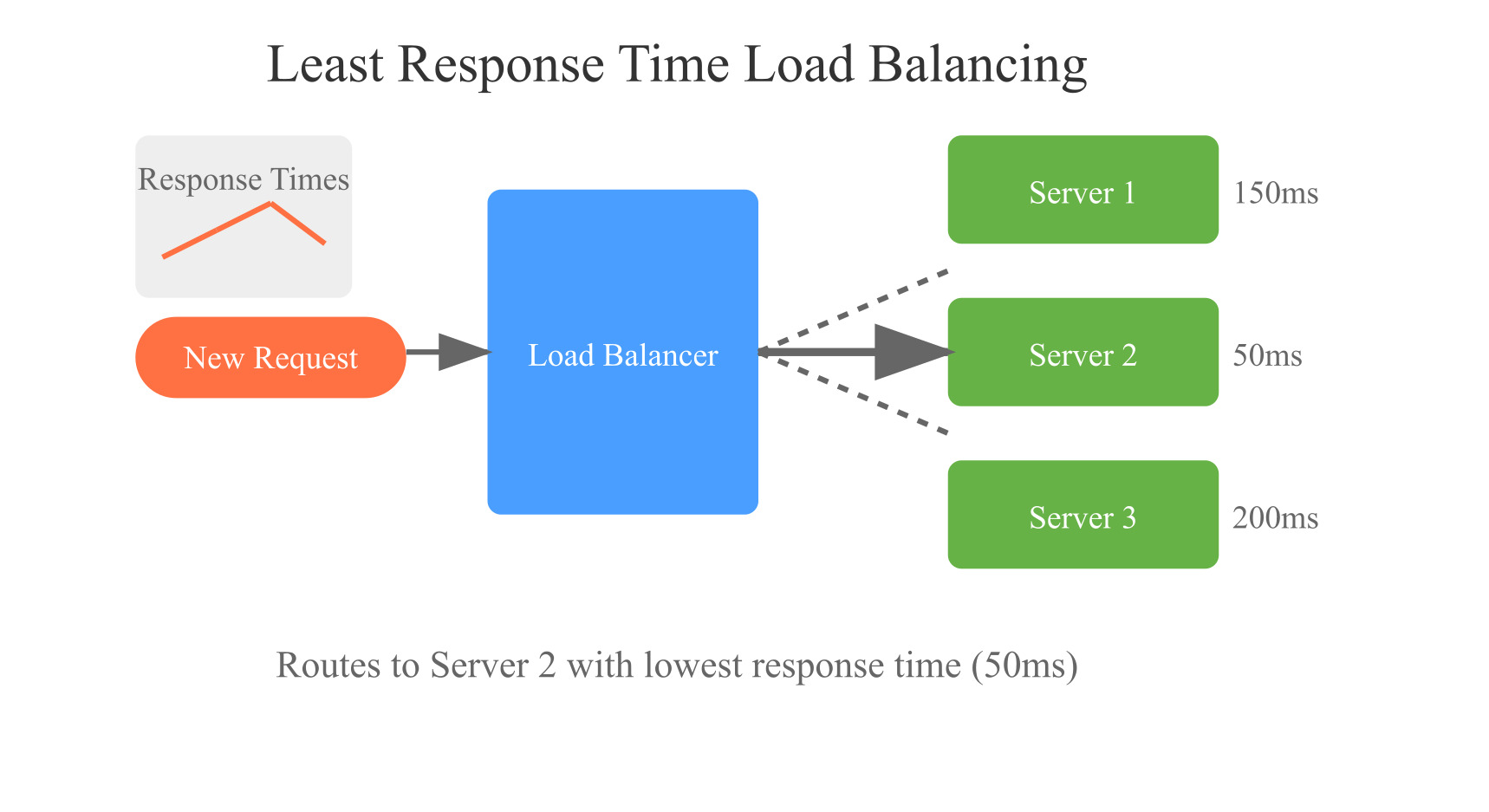

- Least Response Time: Routes to fastest-responding server.

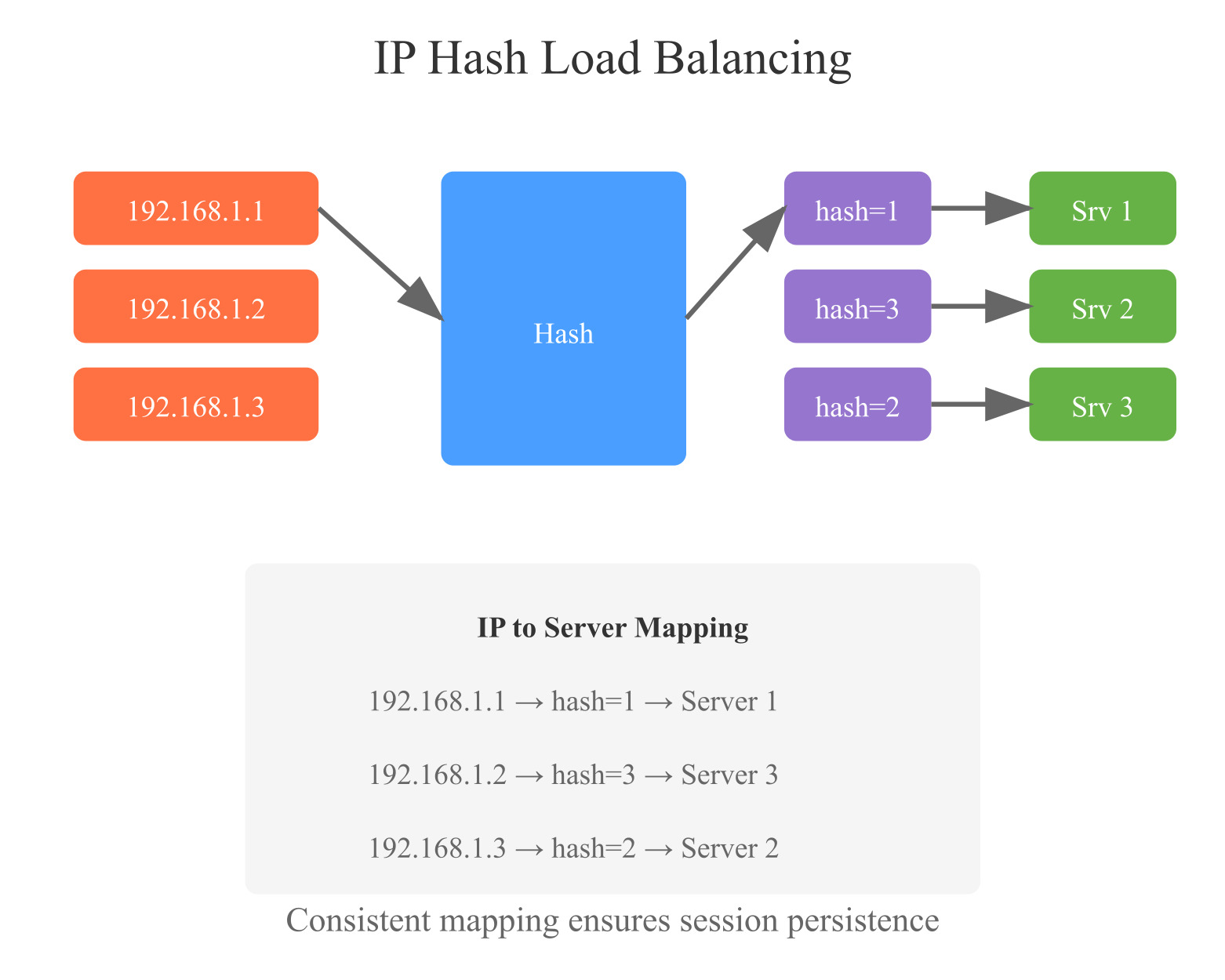

- IP Hash: Maps client IPs to specific servers, maintaining session persistence.

- Resource-Based: Distributes based on server metrics (CPU, memory).

- Random: Randomly picks any available server.

One of the primary functions of a load balancer is to ensure traffic is only routed to healthy servers. It performs regular health checks on the backend servers, monitoring factors like CPU load, response times, or even specific application metrics. If a server fails a health check, the load balancer redirects traffic to the remaining healthy servers, ensuring continuous service without downtime.

3. Session Persistence (Sticky Sessions)In certain scenarios, it is essential to maintain user sessions on the same server for consistency, such as during a shopping cart transaction. Load balancers offer session persistence, also known as sticky sessions, where requests from a particular user are consistently routed to the same server based on cookies or other identifiers.

4. SSL TerminationLoad balancers can handle SSL (Secure Sockets Layer) encryption and decryption, offloading this task from the backend servers. This process, known as SSL termination, reduces the processing overhead on application servers and improves performance, especially for HTTPS traffic.

\ These features collectively make load balancers highly effective in managing traffic, reducing server overload, and ensuring that systems are robust, resilient, and perform optimally under heavy load.

Load Balancer AlgorithmsLoad balancers rely on various algorithms to determine how traffic is distributed across multiple servers. Choosing the right algorithm can significantly impact the performance and availability of your system. Below are some of the most commonly used load balancing algorithms, each suited to different scenarios.

1. Round RobinHow it works: Traffic is evenly circularly distributed across servers, one server at a time.

Use case: Ideal for environments where servers have similar specifications and loads are relatively consistent. It’s simple and easy to implement but may not account for servers with varying capacities.

How it works: Requests are routed to the server with the fewest active connections at the time.

Use case: Best suited for situations where traffic is uneven, or some requests require longer processing times. It helps balance traffic based on real-time server load.

How it works: Similar to round robin, but servers are assigned weights based on their processing capabilities. Servers with higher weights receive more requests.

Use case: Useful in environments with servers of different capacities, where you want to direct more traffic to more powerful servers.

How it works: The client’s IP address is hashed to determine which server will handle the request. This ensures that requests from the same client are always sent to the same server.

Use case: Effective in maintaining session persistence without requiring sticky sessions, ensuring the same server handles all requests from a particular user.

How it works: Requests are routed to the server with the lowest average response time.

Use case: Ideal for applications where latency is a key concern, ensuring users are directed to the fastest-responding server.

How it works: Traffic is randomly assigned to any server in the pool.

Use case: While simple, this method is rarely used as it doesn’t account for server load or capacity, leading to potential performance issues.

\ Each algorithm has its strengths and weaknesses, and selecting the best one depends on your infrastructure, server specifications, and the type of workload your application handles. In many cases, a combination of algorithms may be used to optimize both performance and reliability.

Load Balancers and High AvailabilityHigh availability is one of the primary reasons organizations implement load balancers. Ensuring that applications and services remain available despite traffic surges or server failures is critical in modern infrastructure. Here’s how load balancers contribute to high availability:

1. Eliminating Single Points of Failure- Load balancers distribute traffic across multiple backend servers, ensuring that no single server is responsible for all incoming requests. If one server fails, the load balancer automatically redirects traffic to healthy servers. This redundancy prevents system outages due to server failure.

- For even greater resilience, some infrastructures implement multiple load balancers to avoid a single point of failure at the load balancer level. This often involves using active-active or active-passive load balancer configurations.

- Modern cloud environments often integrate load balancers with auto-scaling systems. As traffic increases, new server instances can be automatically provisioned and added to the pool of servers behind the load balancer.

- The load balancer dynamically adjusts, routing traffic to new instances as they come online and removing them when they are no longer needed. This elasticity allows services to handle unexpected traffic spikes without impacting availability.

- For applications with a global user base, geographic load balancing ensures that users are connected to the nearest data center or server, minimizing latency and providing a faster, more reliable experience.

- In case of a data center failure or network outage in one region, the load balancer can automatically route traffic to another region, maintaining service availability for users.

- Load balancers continuously perform health checks on the backend servers. When a server becomes unresponsive or performs poorly, the load balancer marks it as unhealthy and stops sending traffic to it.

- In multi-data-center setups, load balancers can even handle failover between entire data centers, ensuring that your service remains available even during large-scale outages.

\ By intelligently routing traffic and enabling redundancy at multiple levels, load balancers play a crucial role in ensuring high availability. They provide the infrastructure necessary for 24/7 uptime, even in the face of server failures, traffic surges, or regional outages.

Load Balancers and Performance OptimizationIn addition to ensuring high availability, load balancers are essential for optimizing the performance of your infrastructure. By efficiently distributing traffic, reducing latency, and offloading certain tasks from backend servers, load balancers play a key role in enhancing user experience.

1. Reducing Latency- Load balancers distribute requests to the server that can respond the fastest, reducing response times for users. Algorithms such as Least Response Time ensure that users are connected to the server that can process their requests the quickest.

- Geographic load balancing also minimizes latency by routing users to the nearest available data center or server.

- SSL/TLS encryption and decryption can be resource-intensive for backend servers. By offloading this task to the load balancer (a process known as SSL termination), backend servers are freed up to focus on application logic and processing user requests.

- This improves the overall speed and efficiency of the system, particularly for applications with high volumes of HTTPS traffic.

- Load balancers can integrate with caching mechanisms to store frequently requested content closer to the user. By serving cached content directly from the load balancer, you can reduce the load on backend servers and deliver content more quickly.

- Similarly, load balancers can apply compression techniques to reduce the size of transmitted data, leading to faster delivery times and improved performance for users with slower connections.

- In scenarios where different types of traffic have different performance requirements, load balancers can prioritize traffic. For example, real-time services such as video conferencing or gaming can be prioritized over standard web traffic.

- This ensures that critical services remain responsive, even during times of high load or network congestion.

- Load balancers can pool connections to backend servers, reducing the overhead of opening and closing connections for every client request. This improves performance, especially in high-traffic environments where connection management can become a bottleneck.

- Load balancers can work alongside CDNs to serve static content (such as images, videos, and stylesheets) from edge servers located closer to the end users. By integrating with CDNs, load balancers help reduce the load on backend servers and accelerate content delivery.

\ By optimizing how traffic is handled, load balancers significantly enhance system performance. They reduce the strain on backend servers, improve response times, and ensure that users experience smooth, uninterrupted service, even under heavy load. These performance optimizations are especially important in large-scale, global applications.

Choosing the Right Load BalancerSelecting the right load balancer for your system is critical to optimizing traffic management and ensuring both performance and availability. There are several factors to consider when choosing between hardware-based, software-based, or cloud-based load balancing solutions, as well as which specific technology to implement.

1. Hardware vs. Software Load Balancers- Hardware Load Balancers: These are dedicated physical devices designed to manage network traffic. They offer high performance, scalability, and security features. However, they can be expensive to implement and maintain, and may not offer the flexibility required for dynamic, cloud-based environments.

- Software Load Balancers: These are flexible, cost-effective solutions that run on commodity hardware or virtual machines. Software load balancers such as Nginx, HAProxy, and Traefik are popular choices in many environments because they can be customized and scaled according to your specific needs.

- For cloud-native environments, cloud providers offer integrated load balancing solutions that are easy to deploy and scale. These include:

- AWS Elastic Load Balancer (ELB): Provides three types — Application Load Balancer (Layer 7), Network Load Balancer (Layer 4), and Gateway Load Balancer.

- Google Cloud Load Balancing: Offers both global and regional load balancing, along with support for both TCP/UDP and HTTP(S) traffic.

- Azure Load Balancer: Provides load balancing at both Layer 4 (transport) and Layer 7 (application), as well as the ability to integrate with virtual machines and containerized services.

When choosing a load balancer, consider the following criteria:

- Traffic Type: Determine whether you need Layer 4 (transport-level) or Layer 7 (application-level) load balancing. If you need more granular control over HTTP/HTTPS traffic, a Layer 7 load balancer is essential.

- Scalability: Consider whether your load balancer needs to handle traffic spikes and large-scale operations. Cloud-based solutions tend to be more scalable, as they can automatically adapt to varying traffic loads.

- Deployment Flexibility: Cloud-based load balancers offer easy integration with cloud-native environments, while on-premise solutions give you more control over configuration and security.

- Cost: Cloud-based load balancers are typically priced based on usage, which can be more cost-effective for smaller applications or variable traffic loads. Hardware load balancers involve significant upfront and maintenance costs.

- Nginx: A versatile open-source solution known for its reverse proxy and Layer 7 load balancing capabilities.

- HAProxy: A high-performance, open-source load balancer that supports both Layer 4 and Layer 7 traffic, widely used in enterprise environments.

- AWS Elastic Load Balancer (ELB): A scalable, fully managed solution with options for both Layer 4 and Layer 7 load balancing.

- F5 Networks: A well-known provider of enterprise-grade hardware load balancers that offer advanced security and performance features.

- Traefik: A modern cloud-native solution designed for containerized applications, commonly used in Kubernetes environments.

\ Choosing the right load balancer involves balancing performance, scalability, cost, and flexibility based on your application’s specific needs. Carefully evaluating these options will ensure that you select a solution capable of handling both current and future traffic demands.

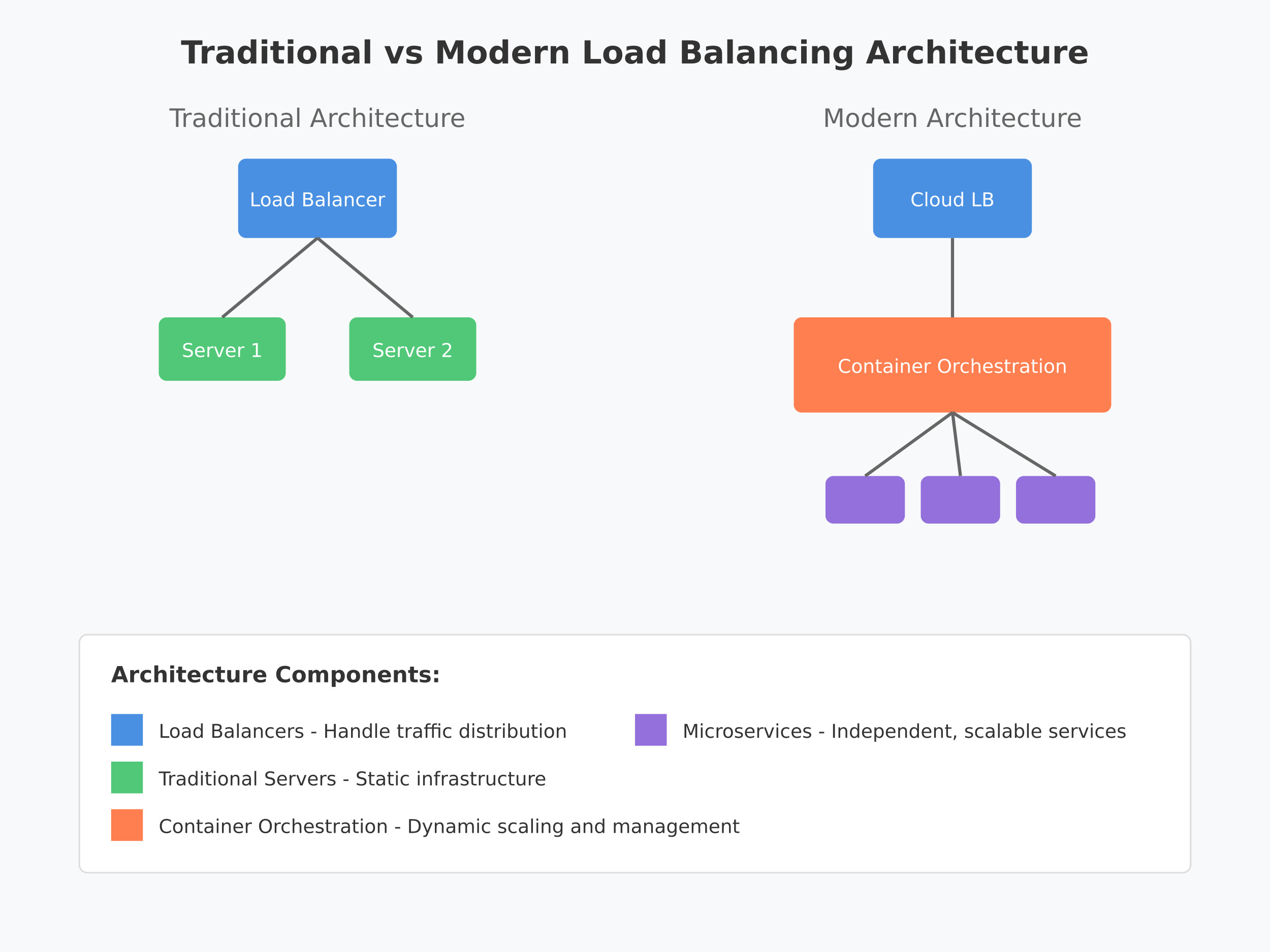

Modern Load Balancing Architectures Service Mesh ArchitectureModern applications often employ a service mesh architecture, which provides advanced load balancing capabilities:

- Sidecar Pattern

- Each service has a proxy sidecar

- Handles service-to-service communication

- Provides automatic load balancing

- Enables advanced traffic management

- Features

- Circuit breaking

- Fault injection

- Traffic splitting

- A/B testing

- Canary deployments

- Multi-Cloud Load Balancing

- Global traffic management across cloud providers

- Automatic failover between regions

- Cost optimization across providers

- Unified traffic management

- Serverless Integration

- Event-driven load balancing

- Function-level traffic distribution

- Auto-scaling based on event volume

- Pay-per-use model

- Kubernetes Integration

- Internal load balancing (Service types)

- Ingress controllers

- Service mesh integration

- Automatic pod scaling

- Features

- Label-based routing

- Health checking

- Circuit breaking

- Traffic splitting

- Edge Load Balancing

- Location-aware routing

- Edge caching

- DDoS protection

- SSL/TLS termination at edge

- Features

- Geographic routing

- Cache optimization

- Edge functions

- Real-time analytics

- Blue-Green Deployments

- Zero-downtime deployments

- Easy rollback capability

- Traffic shifting between versions

- Risk mitigation

- Canary Releases

- Gradual traffic shifting

- A/B testing capability

- Feature flag integration

- Risk-based deployment

- Circuit Breaking Patterns

Automatic failure detection

Graceful degradation

Service isolation

Self-healing capabilities

While load balancers are essential for ensuring high availability and performance, their configuration and maintenance come with challenges. Understanding these challenges and following best practices will help you optimize your system and avoid common pitfalls.

1. Common ChallengesScalability Limits

Although load balancers help distribute traffic, they themselves can become bottlenecks if not properly scaled. A single load balancer handling too much traffic may introduce latency or fail under high loads.

Solution: Use multiple load balancers in an active-active configuration to distribute the load among them, or leverage auto-scaling features in cloud environments.

DDoS Attacks

Distributed Denial of Service (DDoS) attacks can overwhelm load balancers with massive amounts of traffic, causing system outages.

Solution: Implement DDoS protection mechanisms at the network level, such as rate-limiting, IP whitelisting, and using Web Application Firewalls (WAFs) in conjunction with load balancers.

Session Persistence (Sticky Sessions)

Maintaining session persistence can become problematic when the load balancer fails, as it could disrupt user sessions.

Solution: Use cookie-based session persistence or session replication across multiple servers to avoid dependency on a single server or load balancer.

Misconfigured Health Checks

Improperly configured health checks can lead to traffic being routed to unhealthy or underperforming servers.

Solution: Regularly fine-tune health checks to monitor critical application-level performance metrics, such as response time and CPU utilization.

Monitor and Optimize Regularly

Continuously monitor your load balancers to identify performance bottlenecks or failures early. Tools like Prometheus and Grafana can help visualize metrics.

Tip: Analyze logs to detect unusual traffic patterns and fine-tune traffic distribution algorithms or scaling policies.

Use Auto-scaling Features

In dynamic environments where traffic fluctuates, manually scaling your load balancers and servers can be inefficient.

Tip: Cloud-based load balancers often come with built-in auto-scaling capabilities. Ensure that your infrastructure is set up to scale both horizontally and vertically based on traffic loads.

Optimize for Redundancy

Always ensure redundancy for both your load balancers and backend servers. Use multiple load balancers in failover or active-active setups to avoid single points of failure.

Tip: Implement global load balancing across multiple data centers to further increase fault tolerance.

Secure Your Load Balancers

As entry points into your network, load balancers are critical security components. Use secure configurations, apply patches regularly, and encrypt all communication using SSL/TLS.

Tip: Consider deploying Web Application Firewalls (WAFs) and Intrusion Detection Systems (IDS) alongside your load balancers to provide extra layers of security.

Distribute Traffic Based on Performance

Not all servers in your environment may have the same capacity or performance. Using weighted load balancing allows you to send more traffic to higher-performing servers.

Tip: Regularly assess server performance and adjust load balancer settings to reflect the capacity of each server.

- Ensure that your load balancers are integrated into your disaster recovery plan. Load balancers can fail, and having a clear plan for traffic rerouting and failover is crucial.

- Tip: Regularly test failover mechanisms to ensure that they function as expected during a real-world outage.

\ By anticipating challenges and adhering to best practices, you can make your load balancing setup resilient, scalable, and secure. Proper configuration and continuous monitoring will ensure your infrastructure performs optimally, even under heavy loads or during unexpected failures.

ConclusionLoad balancers are an essential tool for any organization aiming to achieve high availability, optimal performance, and scalability in their infrastructure. Whether you are managing a small application or an enterprise-grade global service, load balancers help distribute traffic efficiently, prevent downtime, and maintain a seamless user experience.

\ In this article, we have explored the core concepts of load balancing, from understanding the different types of load balancers and algorithms to optimizing for high availability and performance. We also covered the importance of choosing the right load balancer for your environment, discussed challenges and best practices, and highlighted real-world examples of companies leveraging load balancers to great effect.

\ Key takeaways include:

- Load balancing is crucial for distributing traffic and ensuring redundancy.

- Different algorithms serve different use cases; select the one that fits your workload.

- Load balancers optimize both system performance and availability through features like auto-scaling, SSL termination, and geographic distribution.

- Challenges such as DDoS attacks and misconfigured health checks can be mitigated with proper planning and monitoring.

- Real-world examples show that effective load balancing enables companies to handle traffic spikes, reduce latency, and provide a consistent, reliable service.

\ By mastering load balancers and implementing them as part of your infrastructure, you can future-proof your systems against traffic surges and potential failures, ensuring both optimal performance and continuous service availability for your users.

Call to ActionAs you continue to refine your infrastructure, consider how you can further optimize load balancing to meet your specific performance and availability goals. Experiment with different strategies, monitor traffic patterns and adapt as your user base grows.

\ I hope this article has provided valuable insights into load balancing and its impact on modern infrastructure. Keep exploring, learning, and implementing best practices to master traffic management for high availability and performance in your systems.

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.