and the distribution of digital products.

DM Television

The Compression of Tech Maturity in the Age of AI

Technology used to take decades to develop and mature. But in the age of AI, the timeline is extremely compressed. Today, Kristopher Sandoval explains why we should be cautious about using AI in its current form.

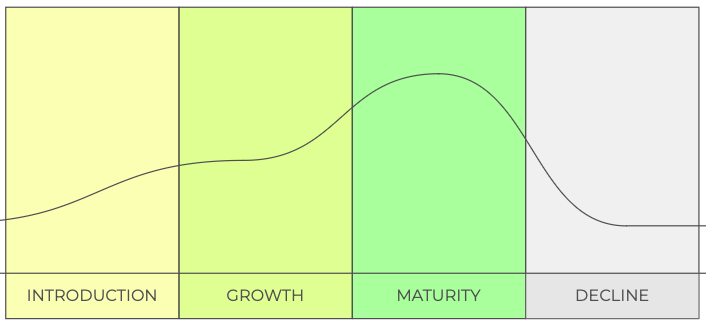

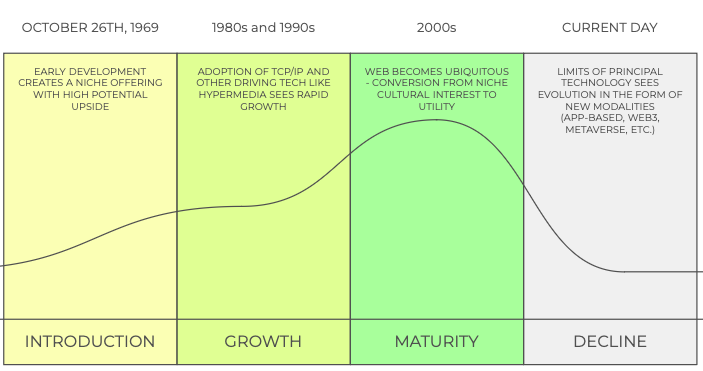

Any piece of technology will naturally go through the process of maturity. No matter the technology or the scope of its application, any new invention will naturally go through an ebb and flow of initial development through maturity and into obsolescence.

However, AI and LLMs present an interesting problem. What once took decades to develop and mature has seen wide use across industries in a matter of months. AI has exploded in utility and adoption in ways that nobody could predict. While this technology is promising, its relatively compressed tech maturity cycle does present some cause for concern.

Below, we’ll look at this tech maturity problem and give some concrete examples of where this has introduced some risk into the market.

A Model for Tech MaturityBefore we can begin to look at the problem behind this tech maturity compression, we must first clearly define what tech maturity looks like. Generally speaking, technical evolution can best be presented as a process that takes place over several distinct stages.

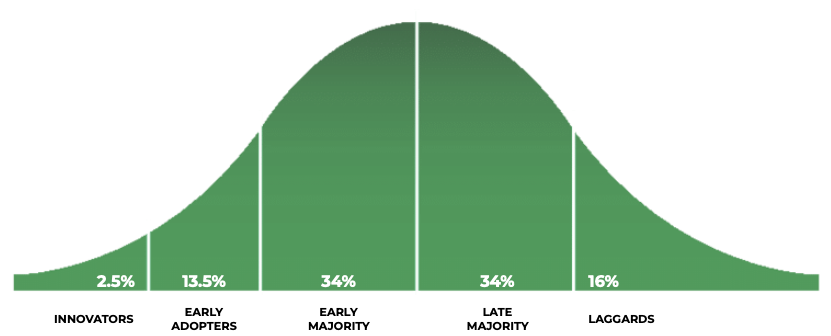

In the introduction stage, we tend to see technology develop the base attributes that make it compelling for adoption. This introductory stage is typically when innovators and the earliest adopters take on the mantle of a new technology, eyeing its promise for disruption and innovation. Technologies in this stage are not perfect — in many cases, they represent only the barest of solutions — but they nonetheless offer enough promise to attract some attention.

In the next stage, we see growth of the initial product offering. At this stage, early adopters will influence adoption more widely, and as the technology evolves, we start to see mass market appeal and innovation. Technologies in this stage lead towards more standardized technologies that can permutate and iterate, offering stable yet evolving experiences for early adopters.

Next, technology enters the maturity phase. The maturity phase is when a technology sees its penultimate developments, in which novel and innovative experiences give way to stable and widely adopted solutions. At this stage, the late majority of users is established, and the products developed from the technology enter a near-ubiquitous state.

Finally, technology enters a slow decline state. This state sees the initial issues with the technology begin to outweigh its benefits, kickstarting more innovation, iteration, and development. At this stage, laggards typically buy in for cheap but find their utility drastically affected.

It’s important to note that this pattern can be replicated across a wide variety of technologies, from farming to jet aircraft. What is critical is the understanding that this pattern comes with a bell curve of adoption. Most technological adoption will happen in the growth and maturity stages, aligned with the technology developing for broader use.

As an example, we can look at how something like the internet developed over time. In the following example, the internet and the world wide web are combined as a single topic due to the productized complimentary nature for the end user despite their subtle differences.

This cycle is made even more interesting by considering the pressure of adoption. Throughout each of these stages, adoption carries certain risks and benefits. If you adopt early, you might be using a transformative technology before any of your competitors, unlocking substantial potential benefits. Conversely, if you adopt too late, you might be using a technology that has already become dated while your competitors have moved on to the new best solution.

This struggle causes a sort of pressure throughout the cycle of a technology. It’s one of the reasons you tend to see a lot of hype and excitement in predictable patterns, especially as the early majority begins to see their investment pay dividends.

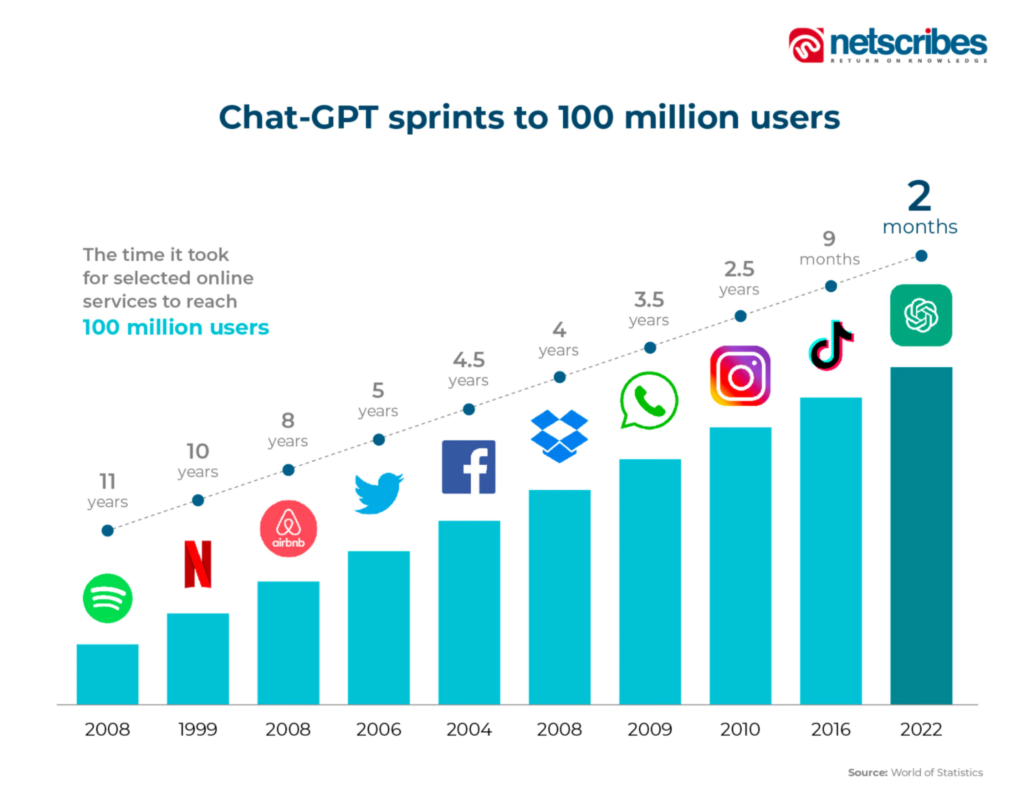

The Problem with AI MaturityAI has seen meteoric increases in user adoption. Unlike the typical development cycle, where a product is typically in its late growth and early maturity phases to see the lion’s share of its user base, AI has seen a rapid explosion even with products in the earliest adoption phases.

One clear example of this trend is with ChatGPT from OpenAI. According to a report from Netscribes, ChatGPT took only two months to rocket to its first 100 million users. Compared to other daily technologies, this is nothing short of miraculous. Even TikTok, which had a built-in audience with the demise of Vine and Musically, took nine months to gain its user base. The next fastest competitor technology was Instagram, which took 2.5 years to gain 100 million users. A more typical example would be Spotify, which took several product iterations and 11 years to gain its user base of 100 million.

Simply put, few technologies have seen such rapid increases in visibility and user acquisition than AI. Due to this compression of the maturity cycle and user acquisition pipeline, we’re starting to see AI adopted widely even though the technology is still relatively in its infancy.

Where Is AI Currently?

Where Is AI Currently?

For some, the statement that AI is still in a relative infancy state might seem a strong statement. The reality is that AI, although promising, still has a lot of development and capability gaps, making it far from a mature technology. To set the stage, let’s look at the current state of AI models, specifically large language models (LLMs).

What Does AI Do Great?As it’s currently developed, AI is really just a predictive model. LLMs are designed to take a word or sentence and generate the most likely output that would typically follow it based on its data sets. This has resulted in a system that works well, assuming you have a data set that can provide context.

Accordingly, AI is currently very good at pattern recognition. The detection of patterns lends itself to discovering trends and context at scale, offering significant benefits to content classification, summarization, and even comparison. Generating text or images based on previous content is the bread and butter of these systems, and it’s hard to argue that LLMs are anything but miraculous in their application of these strengths.

The key point here, however, is that these wonderful solutions require context, and the quality of that context will largely depend on the quality of the data you have used to generate the material in question.

What Does AI Do Poorly?For this reason, current LLM models struggle significantly with tasks that do not have data and context behind them. Unguided generation can be very difficult for current models. Although zero-shot learning solutions are making headway in this area, these techniques still depend on data sets that are adjacent to the subject being generated.

We can see a significant example of AI struggling with this effort in human-centric content creation. Asking an AI to generate a hand will result in something that matches the criteria on paper — a human-like appendage with skin and several fingers. On closer examination, we often find extra fingers, distorted perspectives, strange textures, and other artifacts that are included only because the system is attempting to replicate something ambiguous.

AI also suffers significantly with ambiguous decisions, inference, and logic. Non-algorithmic reasoning, morality, philosophical argumentation, and other such “soft arts” are hard for an LLM to work in because there is no “right” answer. When there is no “right” answer, there’s no solid context, and without solid context, the system struggles.

What Does AI Struggle With?Finally, there are areas where AI just flat-out struggles. Regulatory invisibility is a major concern in this realm. AI can be instructed to follow regulations such as GDPR, but when an LLM hinges entirely on processing as much data as possible from as many sources as possible, you quickly run into a problem where content production suffers from the nature of the production process itself. This can cause serious issues with user and data privacy, and it is one of the main areas of concern for many who argue against AI.

Another huge issue is related to how training is actually done with LLMs. One of the sea changes that has accelerated AI development is the incorporation of retraining — that is, using data put into the system to further train the model in an iterative fashion. While this results in improved outcomes, it does mean that organizations using real intellectual property, such as an organization ingesting their sales deck into an AI model to improve their cold calling, might see their IP leak at scale. This problem is so critical that Gartner has suggested the issue will seriously slow AI adoption and potentially result in diminishing returns.

Perhaps the most significant issues with AI in its current state are relevancy and hallucination. AI relies on context, and an LLM is nothing more than a system that provides what should come next. When a system has no context for what should come next, it often makes up that value, resulting in false data that is shared as if it were truth. This can result in huge issues with the resulting output, significantly impacting the utility of the solution on its face.

This is not even to mention the fact that the data is only as good as its reference material, and since LLMs often use internet data and scraped information for training, this data can be poisoned quite easily. Concerted efforts to make data wrong or dangerously misleading can result in LLM outputs mirroring that low value and danger at scale.

It should also be mentioned that, like every technology, LLMs and AI models are subject to the same security risks as any other connected service. This can be somewhat invisible to the average user utilizing a GPT prompt window, but the risks intrinsic to the model system have been so significant as to generate its own OWASP Top 10 list in recent years.

The Reality of AIAlthough AI is seeing adoption as if it were a mature technology, it simply is not. That doesn’t mean you shouldn’t adopt AI — far from it! AI is perhaps the most disruptive and promising technology of the last decade and can be used to develop and iterate incredible products. What this all means, however, is that you should be aware of these pitfalls and plan accordingly.

Simply put, AI is not as mature as it seems, and rapid adoption means that people are widely using a technology that they may have only a basic understanding of. Put another way, consider this: how many drivers can explain in basic terms what gasoline is? Now, how many users of ChatGPT do you think can explain in basic terms what zero-shot learning is?

A Case StudyThis may sound theoretical, but we can look at some real-world risks this issue presents. Consider for a moment what the current state of AI is in development. AI is seeing wide use to generate basic code and boilerplate code, with some business leaders suggesting in private that AI could replace much of the software development landscape.

The reality of AI, however, does not currently lend itself to these opinions, even if the market is acting as if the product is much more mature. A great example of this reality can be found in the work of Bar Lanyado, a security researcher at Lasso Security. Using a novel method, Bar was able to target the exact issues with this tech compression to discover a huge vulnerability in current LLM models.

The idea was simple — by using LLMs to generate a large list of commonly asked coding questions, Bar’s team was able to find cases in which the LLM suggested packages that did not actually exist in the wild. With these package names in tow, Bar’s team could create any malicious package they desired, uploading these attack vectors to repositories across the web. Now, when an LLM hallucinated these non-existent packages, users would find that the packages did exist but were just the malicious ones deployed by Bar and the team.

These packages were created for research purposes, so they did not contain anything particularly bad. But what would be the case if a Fortune 500 company used LLMs that recommended hallucinated packages that were, in fact, malicious? Would a development team even detect these issues if they trusted the solution wholesale?

Final Thoughts: Be Cautious With AIThis piece was not written to suggest that nobody should use LLMs and AI. Quite the opposite, in fact. LLMs are incredible pieces of technology that can have drastic impacts on coding, iteration, and quality. What this piece does suggest, however, is that we be a bit cautious.

Simply put, AI and LLMs are not as mature as they seem right now, and adopting them as if they are nothing more than another proven technology can have substantial detrimental effects on the macro and micro levels. AI and LLMs will continue to evolve over time, and in the future, these technologies will surely be as ubiquitous as Instagram or Spotify.

For now, however, caution is the name of the game. High attention to detail and a firmly raised eyebrow are essential to ensure that LLMs and AI are used in their appropriate use cases to prevent significant damage at a scale that is not easy to mitigate.

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.