and the distribution of digital products.

DM Television

Anchored Value Iteration and Its Impact on Bellman Consistency in Reinforcement Learning

:::info Authors:

(1) Jongmin Lee, Department of Mathematical Science, Seoul National University;

(2) Ernest K. Ryu, Department of Mathematical Science, Seoul National University and Interdisciplinary Program in Artificial Intelligence, Seoul National University.

:::

1.1 Notations and preliminaries

2.1 Accelerated rate for Bellman consistency operator

2.2 Accelerated rate for Bellman optimality opera

5 Approximate Anchored Value Iteration

6 Gauss–Seidel Anchored Value Iteration

7 Conclusion, Acknowledgments and Disclosure of Funding and References

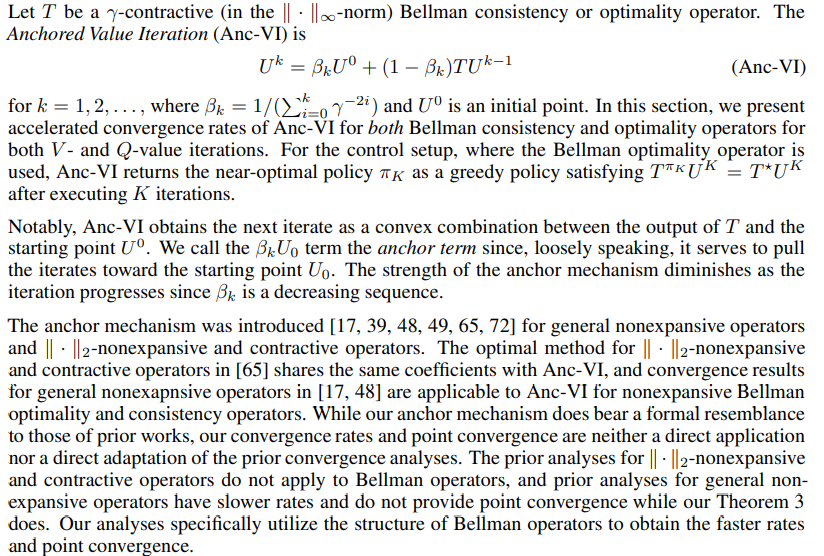

2 Anchored Value Iteration

\ The accelerated rate of Anc-VI for the Bellman optimality operator is more technically challenging and is, in our view, the stronger contribution. However, we start by presenting the result for the Bellman consistency operator because it is commonly studied in the prior RL theory literature on accelerating value iteration [1, 31, 37, 38] and because the analysis in the Bellman consistency setup will serve as a good conceptual stepping stone towards the analysis in the Bellman optimality setup.

\

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

\

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.