and the distribution of digital products.

DM Television

What is embodied AI and why Meta bets on it?

In what many are calling the “Year of Embodied AI,” Meta has taken a big step in advancing robotic capabilities through a suite of new technologies. Meta’s Fundamental AI Research (FAIR) division recently introduced three research artifacts—Meta Sparsh, Meta Digit 360, and Meta Digit Plexus—each bringing advancements in touch perception, dexterity, and human-robot collaboration.

What is embodied AI and why does it matter?Embodied AI refers to artificial intelligence systems that are designed to exist and operate within the physical world, understanding and interacting with their surroundings in ways that mimic human perception and actions. Traditional AI systems excel at data analysis but fall short when applied to physical tasks, which require not only vision but also sensory feedback such as touch. By building embodied AI, researchers aim to create robots that can sense, respond, and even adapt to their environment, bridging the gap between digital intelligence and real-world functionality.

Meta’s innovations in embodied AI are aimed at achieving what its Chief AI Scientist Yann LeCun calls Advanced Machine Intelligence (AMI). This concept envisions machines that are capable of reasoning about cause and effect, planning actions, and adapting to changes in their environment, thereby moving from mere tools to collaborative assistants.

Meta’s breakthroughs in embodied AI: Sparsh, Digit 360, and Digit PlexusMeta’s recent announcements underscore its commitment to tackling the limitations of current robotics technology. Let’s explore the capabilities of each new tool.

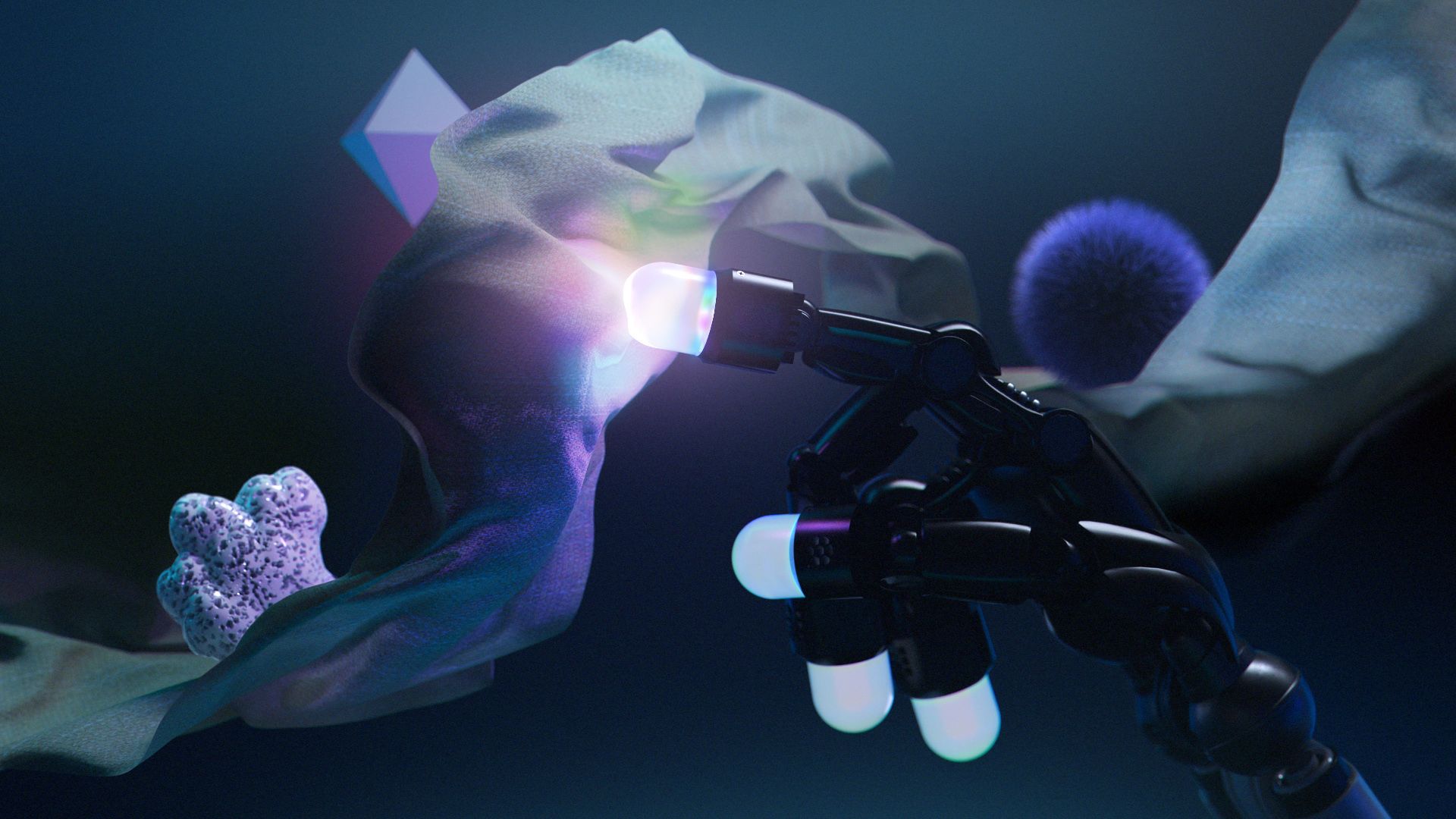

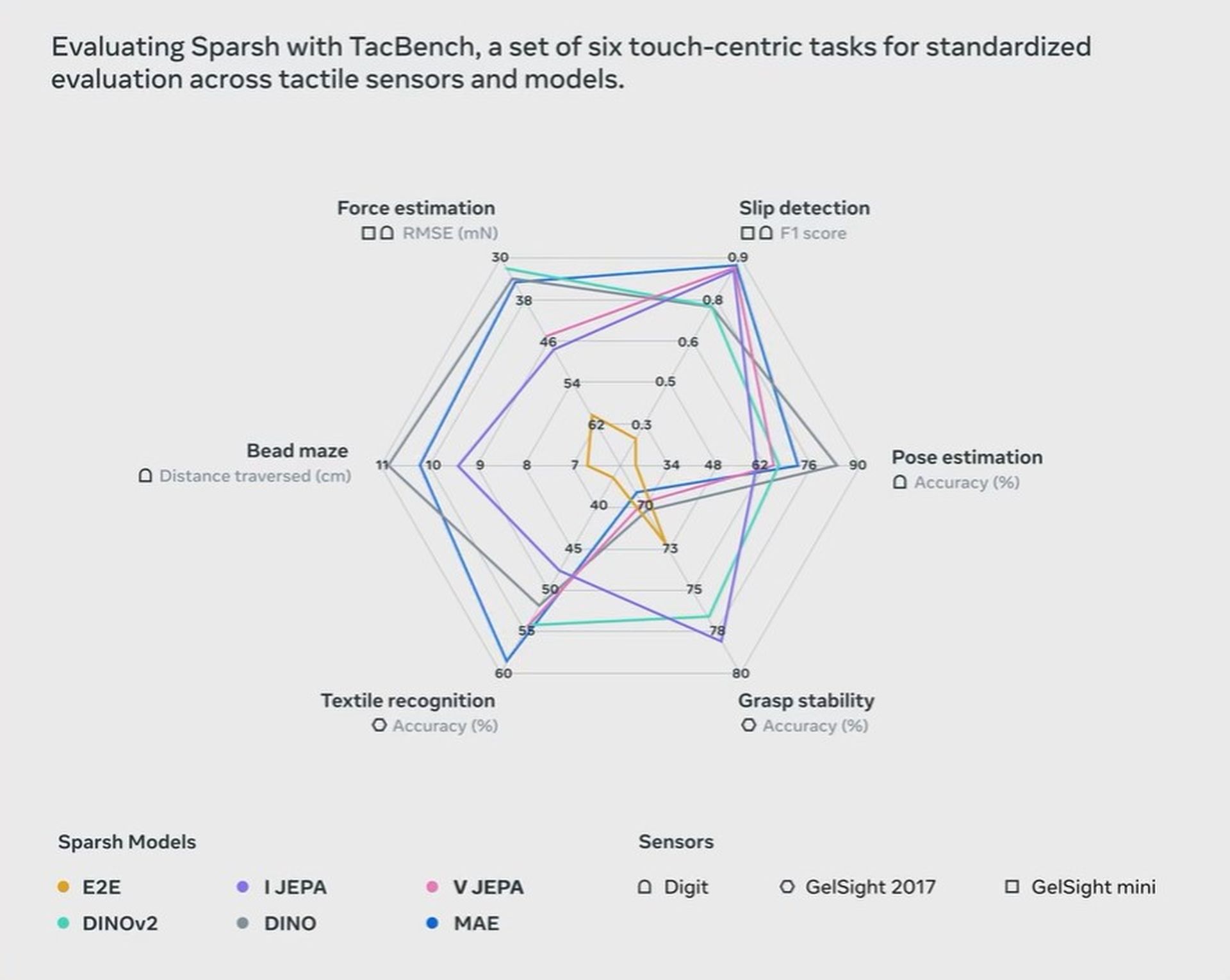

Meta Sparsh: The foundation of tactile sensingMeta Sparsh, which means “touch” in Sanskrit, is a first-of-its-kind vision-based tactile sensing model that enables robots to “feel” surfaces and objects. Sparsh is a general-purpose encoder that relies on a database of over 460,000 tactile images to teach robots to recognize and interpret touch. Unlike traditional models that require task-specific training, Sparsh leverages self-supervised learning, allowing it to adapt to various tasks and sensors without needing extensive labeled data.

This ability to generalize is key for robots that need to perform a wide range of tasks. Sparsh works across diverse tactile sensors, integrating seamlessly into different robotic configurations. By enabling robots to perceive touch, Sparsh opens up opportunities in areas where dexterous manipulation and tactile feedback are critical, such as in medical applications, robotic surgery, and precision manufacturing.

Meta Sparsh, which means “touch” in Sanskrit, is a first-of-its-kind vision-based tactile sensing model that enables robots to “feel” surfaces and objects

Meta Digit 360: Human-level tactile sensing in robotics

Meta Sparsh, which means “touch” in Sanskrit, is a first-of-its-kind vision-based tactile sensing model that enables robots to “feel” surfaces and objects

Meta Digit 360: Human-level tactile sensing in robotics

Digit 360 is Meta’s new tactile fingertip sensor designed to replicate human touch. Equipped with 18 distinct sensing features, Digit 360 provides highly detailed tactile data that can capture minute changes in an object’s surface, force, and texture. Built with over 8 million “taxels” (tactile pixels), Digit 360 allows robots to detect forces as subtle as 1 millinewton, enhancing their ability to perform complex, nuanced tasks.

This breakthrough in tactile sensing has practical applications across various fields. In healthcare, Digit 360 could be used in prosthetics to give patients a heightened sense of touch. In virtual reality, it could enhance immersive experiences by enabling users to “feel” objects in digital environments. Meta is partnering with GelSight Inc to commercialize Digit 360, aiming to make it accessible to the broader research community by next year.

Digit 360 is Meta’s new tactile fingertip sensor designed to replicate human touch.

Meta Digit Plexus: A platform for touch-enabled robot hands

Digit 360 is Meta’s new tactile fingertip sensor designed to replicate human touch.

Meta Digit Plexus: A platform for touch-enabled robot hands

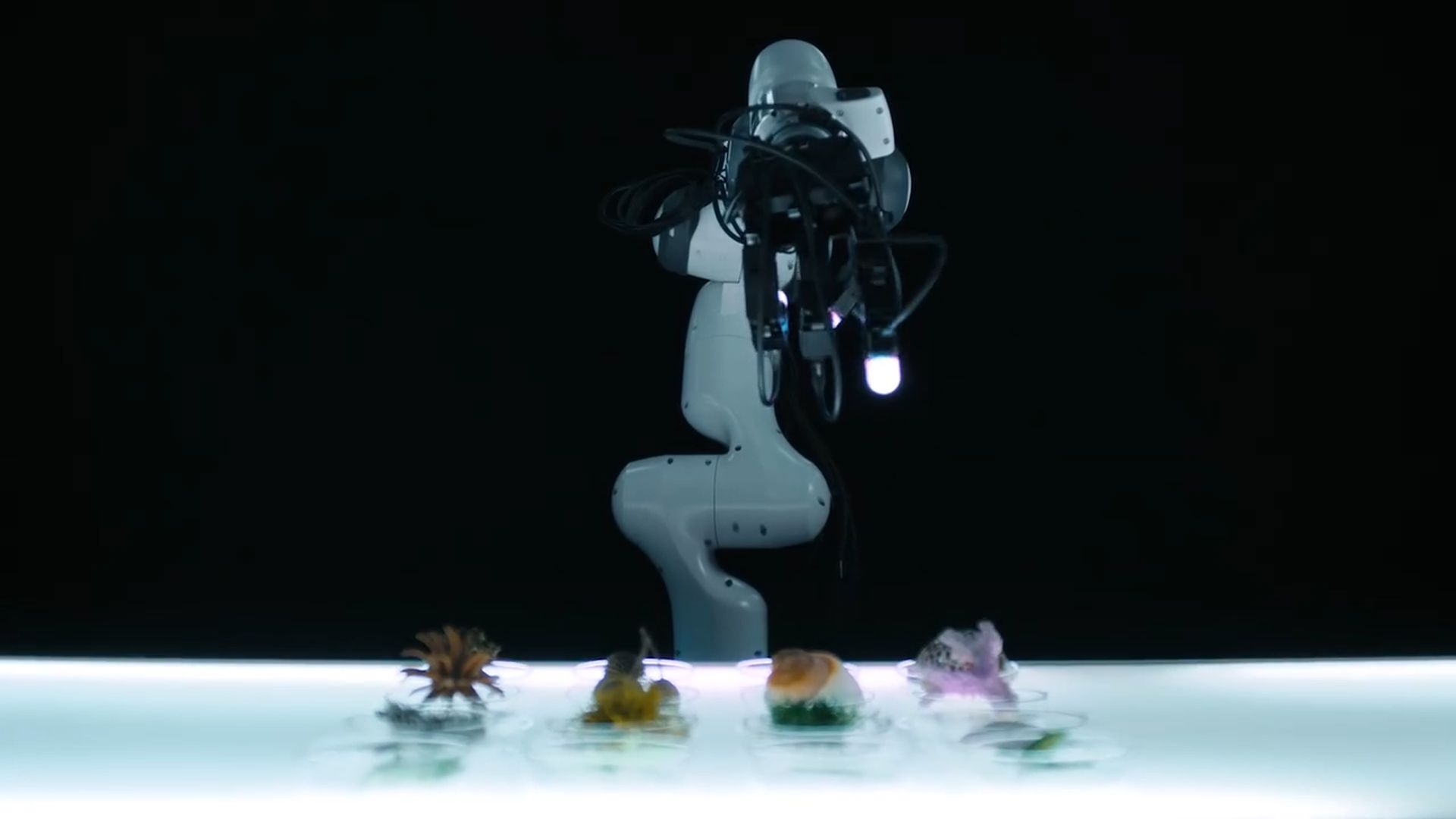

Meta’s third major release, Digit Plexus, is a standardized hardware-software platform designed to integrate various tactile sensors across a single robotic hand. Digit Plexus combines fingertip and palm sensors, giving robots a more coordinated, human-like touch response system. This integration allows robots to process sensory feedback and make real-time adjustments during tasks, similar to how human hands operate.

By standardizing touch feedback across the robotic hand, Digit Plexus enhances control and precision. Meta envisions applications for this platform in fields such as manufacturing and remote maintenance, where delicate handling of materials is essential. To help build an open-source robotics community, Meta is making the software and hardware designs for Digit Plexus freely available.

Meta’s third major release, Digit Plexus, is a standardized hardware-software platform designed to integrate various tactile sensors across a single robotic hand

Meta’s partnerships with GelSight Inc and Wonik Robotics

Meta’s third major release, Digit Plexus, is a standardized hardware-software platform designed to integrate various tactile sensors across a single robotic hand

Meta’s partnerships with GelSight Inc and Wonik Robotics

In addition to these technological advancements, Meta has entered partnerships to accelerate the adoption of tactile sensing in robotics. Collaborating with GelSight Inc and Wonik Robotics, Meta aims to bring its innovations to researchers and developers worldwide. GelSight Inc will handle the distribution of Digit 360, while Wonik Robotics will manufacture the Allegro Hand—a robot hand integrated with Digit Plexus—expected to launch next year.

These partnerships are significant as they represent a shift towards democratizing robotic technology. By making these advanced tactile systems widely available, Meta is fostering a collaborative ecosystem that could yield new applications and improve the performance of robots across industries.

PARTNR: A new benchmark for human-robot collaborationMeta is also introducing PARTNR (Planning And Reasoning Tasks in humaN-Robot collaboration), a benchmark designed to evaluate AI models on human-robot interactions in household settings. Built on the Habitat 3.0 simulator, PARTNR provides a realistic environment where robots can interact with humans through complex tasks, ranging from household chores to physical-world navigation.

With over 100,000 language-based tasks, PARTNR offers a standardized way to test the effectiveness of AI systems in collaborative scenarios. This benchmark aims to drive research into robots that act as “partners” rather than mere tools, equipping them with the capacity to make decisions, anticipate human needs, and provide assistance in everyday settings.

Image credits: Meta

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.