and the distribution of digital products.

DM Television

A Semester-Long Experiment Shows Gamification Boosts Learning Outcomes in Software Testing Education

:::info Authors:

(1) Raquel Blanco, Software Engineering Research Group, University of Oviedo, Department of Computer Science, Gijón, Spain (rblanco@uniovi.es);

(2) Manuel Trinidad, Software Process Improvement and Formal Methods Research Group, University of Cadiz, Department of Computer Science and Engineering, Cádiz, Spain (manuel.trinidad@uca.es);

(3) María José Suárez-Cabal, Software Engineering Research Group, University of Oviedo, Department of Computer Science, Gijón, Spain (cabal@uniovi.es);

(4) Alejandro Calderón, Software Process Improvement and Formal Methods Research Group, University of Cadiz, Department of Computer Science and Engineering, Cádiz, Spain (alejandro.calderon@uca.es);

(5) Mercedes Ruiz, Software Process Improvement and Formal Methods Research Group, University of Cadiz, Department of Computer Science and Engineering, Cádiz, Spain (mercedes.ruiz@uca.es);

(6) Javier Tuya, Software Engineering Research Group, University of Oviedo, Department of Computer Science, Gijón, Spain (tuya@uniovi.es).

:::

:::tip Editor's note: This is part 3 of 7 of a study detailing attempts by researchers to create effective tests using gamification. Read the rest below.

:::

Table of Links2.1 Software testing

2.2 Gamification

2.3 Gamification in software testing education

3.2 Participants

3.3 Materials

3.4 Procedure

3.5 Metrics

4.2 RQ2: Student performance

This section presents the design of the controlled experiment reported in this paper. Section 3.1 and Section 3.2 describe the course in which this experiment took place and the participating students, respectively. The materials used by the students in the experimental and control groups are described in Section 3.3 and the activities they carried out are explained in Section 3.4. Finally, the metrics defined to analyze the results of the experiment are presented in Section 3.5.

3.1 Course designThe experiment takes place in the course titled “Software Verification and Validation“of the 4th year of the Software Engineering degree of the University of Oviedo (Spain). This is a one-semester course (15 weeks) addressing the topics related to the testing process and techniques required to perform effective software tests. It consists of the following types of components:

\

Lectures, in which the professor presents the theoretical contents of the course and provides explanation, examples and practical recommendations. Lecture classes deal with the evaluation of software quality, from the product point of view, and are mainly focused on software testing. The software testing process is addressed, as well as the testing techniques and strategies to be used to create effective test suites. The assessment of the theoretical contents of the course is based on an exam, which is worth 35% of the final grade.

\

Seminars, in which the students address the challenge of creating effective test suites following a continuous improvement approach. For that purpose, the students select and apply testing techniques that were introduced previously in the lecture classes. The students work on a sequence of four exercises, evenly distributed over the whole semester, with an increasing level of difficulty. For each exercise, the students work in teams of 3 or 4 members to create test suites for testing a program with injected defects provided by the professor. After that, they work individually to improve continuously the test suite effectiveness, in order to find as many defects as possible. Table 2 presents a brief description of the program to be tested in each exercise, as well as the number of injected defects and some examples of these defects. Seminar contents and skills are assessed based on: for each exercise, the quality of a test design report provided by the students and the effectiveness of the test suite submitted determined by the SQLTest tool, which is worth 20% of the final grade. This report is submitted before the effectiveness measurement and contains: the test design, an explanation of the testing techniques used and the test cases that constitute the test suite, along with the traceability between the test design and the test cases. The quality of the test design report is graded on the basis of the structure of the test design and the test cases, the correct use of the testing techniques and the degree of traceability established.

\

Laboratories, in which the students put into practice the contents and skills learned in the lectures and seminars to conduct a software testing project for a real-life application provided by the professors. This project is performed individually and involves the following test processes: test design and implementation, test environment set-up and maintenance, test execution and test incident reporting. Laboratories are assessed based on the continuous assessment of each student’s work and progress, which is worth 15% of the final grade, and the quality of the assignments submitted, which is worth 30% of the final grade. These assignments are the test design, the test suite created, along with the traceability between the test design and the test suite, the result of the test execution and the test incident reports.

\ Our experiment was conducted in the seminars, which consist of weekly one-academic-hour sessions.

\ Table 2. Programs to be tested in the exercises of seminars: number of the exercise, program name, brief description of the program, number of injected defects and examples of some of the injected defects.

| Exercise | Program | Description | Number of injected defects | Examples of injected defect | |----|----|----|----|----| | 1 | Triangle classification | It finds the type of triangle based on the lengths of its sides. | 16 | - Length side 2 + length side 3 < length side 1: it is classified as a triangle. (For example, the input 4 2 1is classified as a triangle). \n \n - Only two equal sides: it is classified as equilateral. (For example, the input 2 2 3 is classified as equilateral). \n \n - 3 equal letters introduced as the sides of the triangle: it is classified as an equilateral triangle. (For example, the input a a a is classified as an equilateral triangle). | | 2 | On-line shop | It shows the products of a shop, according to several search criteria that have to be fulfilled simultaneously. | 20 | - Products that do not fulfill the criterion “products whose description starts with ” are shown when the description contains the value searched. (for example, when the criterion “products whose description starts with big“ is established, a product whose description is “the book tells the story of Abigail” is shown). \n __ \n __- Products whose price is equal to the value specified in the criterion “products whose minimum price is ______“ are not shown. (For example, when the criterion “products whose minimum price is 10“is established, a product whose price is 10 is not shown). \n \n - Criterion “products whose ______“distinguishes upper case text from lower case text. (For example, when the criterion “products whose description contains iron“ is established, a product whose description is the “the superhero Iron Maan fights against his enemies“ is not shown). | | 3 | Spanish VAT return | It generates a new VAT return, based on the information of previous VAT returns stored in a database and the taxable transactions of the corresponding period. | 15 | - Very small differences (cents of euro) between output VAT and input VAT are not considered to calculate the VAT payable. (For example, when the difference between the output VAT and the input VAT is 0.01,the program uses the value 0 to calculate the VAT payable). \n \n - The reduction of the VAT payable stops when a previous period has a difference between output VAT and input VAT equal to 0. (For example, the database stores the VAT return of the first three trimesters of 2022 and the differences between the output VAT and the inputs VAT are -100, 0 and -200, respectively. In the fourth trimester, the difference between the output VAT and the input VAT is 350, and when the program calculates the VAT payable, it stops the reduction in the second semester, instead of using the three trimesters). \n \n - Non-allowed periods to make VAT adjustments are used. (For example, the database stores the VAT return of the four trimesters of the years 2021 and 2022. These VAT returns have a negative difference between the output VAT and the input VAT. To simplify, we consider that we can only use one year to reduce the VAT payable. When the program calculates the VAT payable for the first trimester of 2023, it uses the four trimesters of 2022 and the last trimester of 2021, instead of using only the four trimesters of 2022). \n | | 4 | Lab reservation clash | It checks whether the lab reservation a professor wants to make clashes with the existing reservations of other courses of the same university degree and academic year. | 11 | - It is not checked whether the university degree of the course involved in the new reservation is the same as the university degree of the reservations already made in order to detect a clash. (For example, a professor wants to make a lab reservation for the course Software Verification and Validation” of the 4th year of the Software Engineering degree. The same day and at the same time, there is a lab reservation for the course “Web Technologies“ of the 4th year of the IT Engineering degree. The program does not check the university degree and it determines that there is a clash, when in fact there is not). \n \n - The reservations already made for the course involved in the new reservation produce a clash, but they do not have to. (For example, a professor wants to make a lab reservation for the course “Software Verification and Validation“of the 4th year of the Software Engineering degree. The same day and at the same time, there is a lab reservation for the same course. The program does not exclude the lab reservations already made for the same course and determines that there is a clash, when in fact there is not. Note that a course can have several professors that can conduct the lab classes of different groups simultaneously.) \n \n - A reservation already made that finishes the same day that the new reservation starts does not produce a clash.(For example, a professor wants to make a lab reservation from 17:00 to 18:00, starting on January 16th, 2023. There is a reservation for another course of the same degree and year from 17:00 to 18:00 that finishes on January 16th, 2023. The program does not compare both dates correctly and determines that there is not a clash, but actually there is.) |

3.2 ParticipantsThe controlled experiment consisted of one experimental group and one control group. The experimental group is formed by the students that voluntarily got involved in the gamification experience performed during the seminars in the academic year 2020-2021. The number of participating students was 135 (98% students in the 18-28 age range and 2% in the 28-38 age range), which corresponds to 94% of the students enrolled in the course.

\ This study obtained the approval from the Responsible Research and Innovation Subcommittee of the Research Ethics Committee of the University of Oviedo and is conformed to the ethical principles and spanish legislation. The main ethical aspects of this study involved the participants’ informed consent and the personal data protection. All the participants were informed of the experiment, all the data to be collected and their treatment. They gave their consent before entering the research and answering a demographic questionnaire. The participants were informed that their participation in the experiment was voluntary and the decision to participate or not participate, or to discontinue participation, will not result in any consequences, academic or otherwise.

\ The control group is formed by the 100 students who studied the same course in the academic year 2019-2020, where the seminar activities were not gamified. The age range is similar to the experimental group, but precise age data are not available because the control group did not answer a demographic questionnaire.

\ All seminar sessions, in both the experimental and control groups, were conducted by the same professor, who has experience in software testing teaching and researching.

\

3.3 MaterialsThe materials used in this experiment are the programs to be tested, provided to the students as learning materials, and the software applications SQLTest and GoRace, which have been integrated to support the gamification experience and are used by the students to carry out the learning activities. The following subsections provide further information about them.

\ 3.3.1 Programs to be tested

\ As mentioned before, the experiment was conducted in the seminar classes where the students work on a sequence of four exercises (see Table 2) to apply their knowledge and develop their skills as software testers. A critical resource for these exercises is, therefore, the program that each testing exercise is focused on.

\ For each program, the following materials are created and provided by the professors:

\

Program specification. It describes the program functionality. The students are provided with the program specification, as prepared by the professors, and they used it as the body of knowledge from which the test suites are created. Table 2 of Section 3.1 provides a brief functional description of each of these programs.

\

Defects. For each program, the professors decide and create manually a set of significant defects tailored to the particular program functionality, which usually appear during the software development. Each defect produces a different failure. Initially, the students do not know these defects. Table 2 of Section 3.1 indicates the number of defects created for each program, as well as some examples of these defects.

\

Program implementations. For each program, the professors create several independent implementations, called versions, for the corresponding program specification. One of these versions implements the program specification correctly and it is called the original version. The remaining versions are faulty ones, called mutants. Each mutant is an originally correct implementation modified to contain only one of the defects injected manually by the professors. Therefore, each program has as many mutants as tailored defects which were created for that program. In addition, the source code of the implementations is not available to students.

\ 3.3.2 SQLTest

\ SQLTest is a software tool developed by the Software Engineering Research Group of the University of Oviedo. SQLTest allows the students to execute their test suites and evaluate their effectiveness for the program implementations that have been previously loaded by the professors. SQLTest was initially created to test SQL queries, hence its name. Later, it was expanded to test programs in general, but it was decided to keep its original name.

\ For each exercise, SQLTest embeds all the implementations of the program to be tested, that is, the original version and the mutants. When a student submits their test suite for a particular exercise, SQLTest executes this test suite internally against the original version and all the mutants and compares the outputs obtained by each execution. If the output of the original version is different to the output of a mutant, that means that the defect injected in such a mutant has been detected by the test suite provided by the student.

\ SQLTest determines the test suite effectiveness as the percentage of detected defects over the total number of defects to be detected, that is, the number of mutants. After that, SQLTest gives feedback to students, indicating the test suite effectiveness, as well as the description of the defects that have not been detected yet if that is the case.

\ 3.3.3 GoRace

\ GoRace is a multi-context and narrative-based gamification tool developed by the Software Process Improvement and Formal Methods Research Group of the University of Cadiz. GoRace allows the user to automatically create a tailored web solution to gamify the use of any third-party tool with which it can be easily integrated through a REST API.

\ In a GoRace experience, the participants are immersed in a virtual world based on Greek mythology where they take part in an Olympic race decreed by Zeus to commemorate his victory over his father Cronus. The prize for those who reach the finish line of this legendary race is immortality. To provide a meaningful gamified experience for the different types of existing players, GoRace does not only implement the well-known and widely used game elements such as points and leaderboards, but a comprehensive range of game elements such as: a) game dynamics, such as sense of progress, sense of competence, interaction, narrative and socialization, b) game mechanics, such as challenges, effort, participation, strategies and encouragement, and c) game components, such as results, evolution, classification, points and gifts). In total, GoRace implements 131 different game elements out of the 229 game elements identified by Peixoto and Silva in their systematic literature review (Peixoto & Silva, 2017). More information about the game elements implemented in GoRace can be found in (Trinidad et al., 2021).

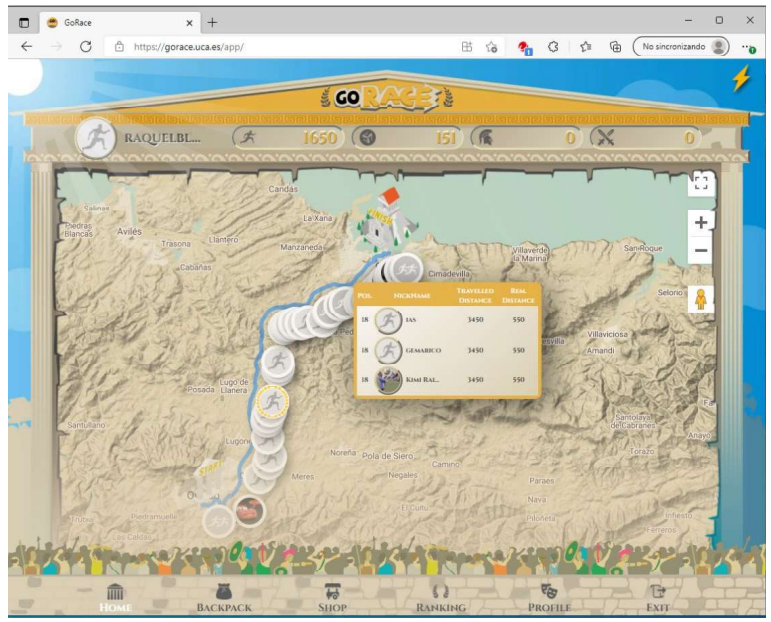

\ An Olympic race in GoRace takes place between two places that are decided when creating a gamified experience. In the case of the experiment described in this paper, the School of Computer Engineering in Oviedo, where the students attend their classes, was selected as the starting point, and the beach of Gijón, where the students enjoy their leisure time, was selected to be the finish line. The participants in the race need to cover the distance between those two places. Such distance is measured in distance units. Figure 1 shows a screenshot of GoRace displaying the map of the Olympic race. As it can be seen, a blue line shows the route of the race and over it the different avatars of the participants are placed, according to their position in the race.

\

\

\

\ \ \ During the race, the participants can obtain divine points, a type of virtual currency, that they can use to purchase different powerful goods called Relics. Once purchased, the participant needs to decide when is the most suitable moment to make use of their Relics’ powers. The Relics have powers with positive or negative consequences, such as different distance increments for advancing a specific number of distance units in the race, attacks for decreasing the distance units achieved by other players or protections for neutralizing an attack or reflecting it back onto the attacker.

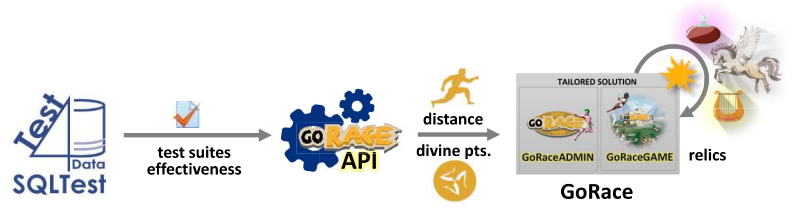

\ 3.3.4 Integration of SQLTest and GoRace

\ In the gamification strategy implemented in GoRace for this experiment, both the distance units traveled in the race and the divine points obtained by the students are based on the assessment of the effectiveness of their test suites determined by SQLTest: the higher the effectiveness, the higher the distance units and the divine points the student is rewarded with. As both distance units and divine points are positive rewards to motivate the students to work on the seminars, negative rewards have not been used in the experiment.

\ The integration of SQLTest and GoRace was done by using the API provided by GoRace. Through that API, SQLTest sends to GoRace the data containing the identification of the exercise and the student, along with the effectiveness of the test suite executed and the timestamp of this execution. Then, GoRace calculates the distance units and the divine points the student will be awarded with as a function of their effectiveness reported by SQLTest and updates the information shown on the application screens, such as the map of Figure 1. The interaction between SQLTest and GoRace is depicted in Figure 2.

\

\

\

\ \ \

3.4 ProcedureIn our experiment, the Olympic race is made of the four exercises presented in Section 3.1 (see Table 2) , carried out in sequence. In the first seminar session, the gamification experience and the Olympic race were introduced to the students, as well as the tools SQLTest and GoRace. The instructions to be followed to carry out the exercises were explained too. The students were informed of the experiment, the treatment of the data to be collected and their voluntary participation. After that, they answered the demographic questionnaire.

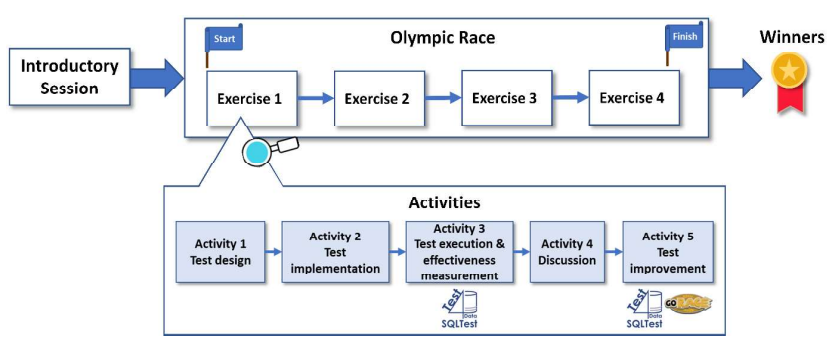

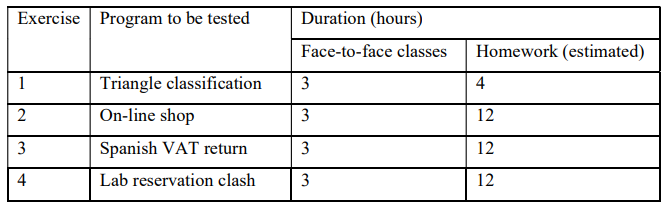

\ The remaining seminar sessions and homework implemented the Olympic race, which covered 4000 distance units, during 14 weeks (Table 3 shows the duration of each exercise in hours). The race started in the first exercise and the subsequent ones allowed the students to progress in the race. The test suite effectiveness achieved in every exercise gave at most 1000 distance units and at most 90 divine points. After finalizing the fourth exercise, the race also finished. Figure 3 depicts the procedure followed in seminars and the activities involved in each exercise.

\

\

\

\

\

\

\

\

\

\

\

\ \ \ \ Each exercise is composed of the following activities:

\

Test design: (one class session) the professor introduces the specification of the program to be tested, and after that the students design the tests, applying the testing techniques explained in the lecture classes. The students work in teams in this activity.

\

Test implementation: (one class session) the teams create the test cases that constitute their test suites, applying the strategies explained in the lecture classes to determine the test input of each test case.

\

Test execution and effectiveness measurement: (2 days, homework) each student individually provides SQLTest with the test suite and executes it to measure the effectiveness achieved. SQLTest shows the test suite effectiveness to the student, along with the description of the defects that have not been detected yet. This information constitutes valuable feedback that can be used to improve the test suite.

\

Discussion: (one class session) the professor and the students present several alternatives for the test design and implementation of this exercise and carry out a discussion about the effectiveness of these alternatives.

\

Test improvement: (two weeks, homework) the students, individually, use the feedback given in the discussion activity and the feedback provided by SQLTest to improve their test suite (they can add, remove and modify test cases of their test suites in SQLTest). Whenever the test suite has been modified, the student can execute it in SQLTest to measure the new effectiveness achieved and receive new feedback. After obtaining the results of each execution, SQLTest sends automatically the effectiveness to GoRace in order to update the distance units traveled in the Olympic race and the divine points. The students use GoRace to know the distance units and divine points they have reached, to check the race ranking, to buy relics that give them some benefits and to interact with other players (attacking other players or protecting themselves).

\ The ability to apply testing techniques to design the tests that give rise to effective test suites, as well as the ability to determine the test inputs, are crucial to obtain as many distance units and divine points as possible at the beginning of the test improvement activity of each exercise. During the test improvement activity, the abilities to understand the defects and determine the test inputs give rise to the additional distance units and divine points the students accumulate in each exercise.

\ The exercises were evaluated by the professor and the students received their grade on a scale from 0 to 10. Then, the 30% of the students that achieved the higher positions in the race ranking were rewarded with additional points, from 0.5 to 1.5 points.

\ The procedure followed by the students in the control group was similar to the procedure described above. The first seminar session addressed the same issues, except those related to the experiment and the demographic questionnaire. During the remaining 14 weeks, they worked on the same sequence of four exercises, carried out the same activities that form each exercise and used the same materials, except GoRace.

3.5 MetricsThe main objective of our gamification experience is to increase the engagement of the students in the test improvement activity, so that they became more motivated to use SQLTest to improve their test suites. Related to this objective, this experience also tries to increase the performance of the students in the creation of test suites.

\ To measure the engagement of the students, we have considered the proposal of (Fredricks et al., 2004), which divides the engagement concept into three facets (behavioral, emotional and cognitive). In particular we have considered the behavioral engagement facet, which is based on the idea of student’s participation and involvement in academic and extracurricular activities. For the purpose of this study, we have defined four metrics that measure the student’s academic participation in terms of their interaction with SQLTest (the first two metrics) and their interaction with the exercises (the last two metrics):

\

- Number of Test Suite Executions: it is the number of times that a student carries out the execution of a test suite in the test improvement activity of each exercise, with the aim of determining its effectiveness.

\

- Active Time: it is the number of days that a student interacts with SQLTest executing at least one test suite in the test improvement activity of each exercise. This metric measures the number of days where a student is actively interacting with SQLTest and complements the previous one. Thus, we can measure the interaction degree achieved by the students during the days they were actively working on the test improvement activity.

\

- Participation Rate: it is the proportion of students that work in all activities of each exercise, including the test improvement activity.

\

- Dropout Rate: it is the proportion of students that do not work on each exercise and abandon the rest of seminar exercises in that moment, over the number of students that have worked on the previous exercise.

\ To measure the performance of students, we have used two metrics related to the test suite defect finding ability, which indicate the quality of the test suite:

\

- Effectiveness: It is the percentage of defects detected by the test suite of a student over the total number of defects to be detected (that is, the mutation score).

\

- Effectiveness Increase: It is the relative effectiveness increment of the test suite of a student in the test improvement activity of each exercise over their particular margin of improvement.

\ For each metric, we collected data of each individual exercise for the experimental and control groups, in order to analyze each one separately. We also accumulated the data of the four exercises to analyze the experience as a whole.

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

\

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright 2025, Central Coast Communications, Inc.