and the distribution of digital products.

DM Television

Rollups – Layer 2 Scaling | Is it the Next Big Thing?

In 2017’s crypto bull market, the debate on Ethereum’s network congestion heated up. Infamous crypto-kitties clocked out the entire Ethereum network along with ICOs, causing a significant gas fee hike. Again in 2020, with the rise of DeFi and yield farming, the network congestion bashed in an even more vital sense. The gas fees as high as 500+ Gwei were not sufficient to validate the transactions. This is where the scalability of Ethereum comes into question.

What is scalability?Scalability refers to a blockchain network’s ability to support high transactional throughput and future growth. Speaking more technically, the ability to scale throughput (the number of transactions the system can process per second) and latency (the time taken for a transaction to be processed) superlinearly to the cost of running a system defines scalability.

Approaches to scaling Ethereum.When it comes to scaling Ethereum or any other blockchain in general, two major approaches can be used:

- By scaling the base layer (Layer 1) itself, or

- Scaling the network by offloading some of the work to another layer (Layer 2).

Layer 1 is the base consensus layer where pretty much all the transactions are currently settled. Ethereum, Bitcoin, Litecoin, for example, are Layer-1 blockchains.

Layer 1 can be made more scalable by:

- Increasing the amount of data contained in each block, or

- By increasing the rate at which blocks are confirmed.

So, the first method can be achieved by replacing Proof of Work (PoW) with Proof of Stake (PoS). PoW requires miners to solve cryptographic algorithms using substantial computing power. Although PoW is secure, it is comparatively slower. Whereas PoS systems process and validate new blocks of transaction data based on the staking of collateral by the participants in the network. The proof of work consensus algorithm limits how many new blocks of data can be generated. For example, miners can only create a Bitcoin (BTC) block every 10 minutes, while PoS doesn’t adhere to any limit.

The second method can be achieved by implementing a process known as Sharding. Sharding is a process in which the state of the entire blockchain network is broken into distinct datasets called “shards”, which makes it a more manageable task to maintain the network. It is crucial to ensure that these shards have a way to communicate with each other because a user of the network should be able to access all the information stored in the blockchain.

What is Layer 2?Layer 2 is assembled on top of layer 1. Therefore, Layer 2 is an aggregate term designed to help scale the Ethereum network by handling transactions off the Ethereum mainnet (layer 1). There are significant focuses to be noted here:

Layer 2 doesn’t need any progressions in Layer 1. Layer 2 uses existing elements, such as smart contracts to build on top of Layer 1

- Layer 2 also use the security of layer 1 by securing its state into layer 1.

- Ethereum can process 15 Tx/sec on its base layer. But scaling solutions provided by layer 2 drastically increases to 2–4K Tx/sec.

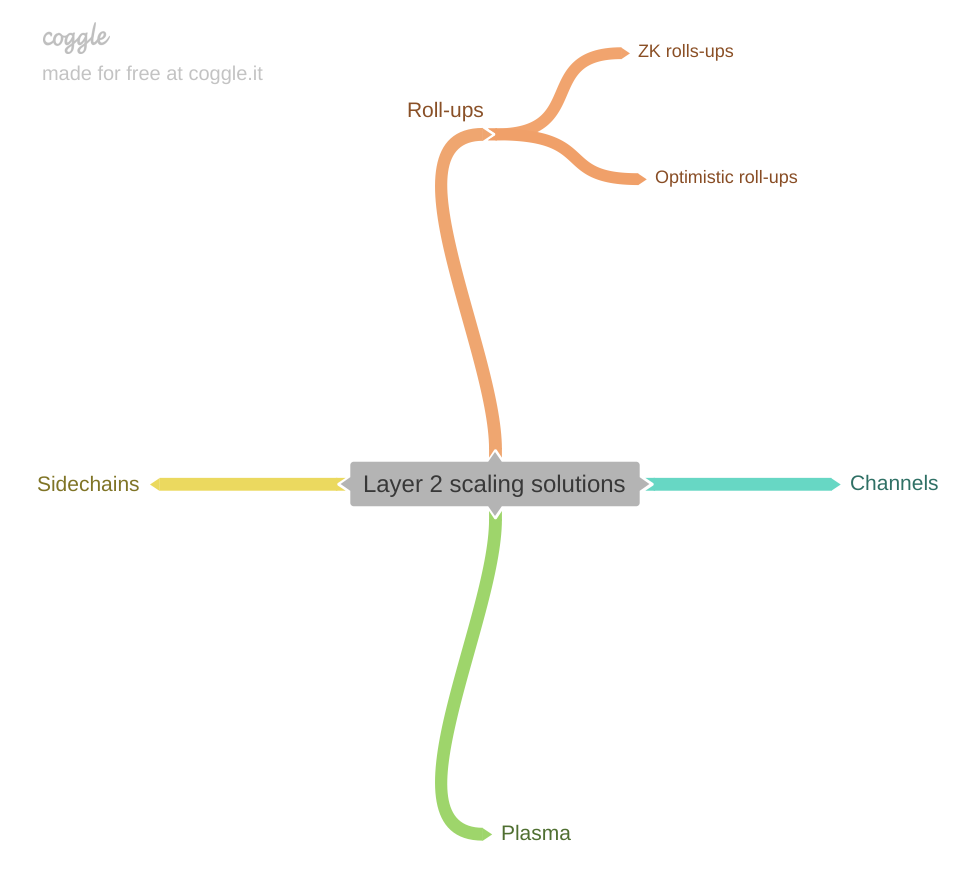

There are multiple options available for existing scaling solutions, as shown in the mind map below. However, the Ethereum community is converging on mainly scaling through rollups and Ethereum 2.0 phase 1 data sharding.

Various scaling solutions for Layer 2

Various scaling solutions for Layer 2

The main focus of this article will be on Rollups

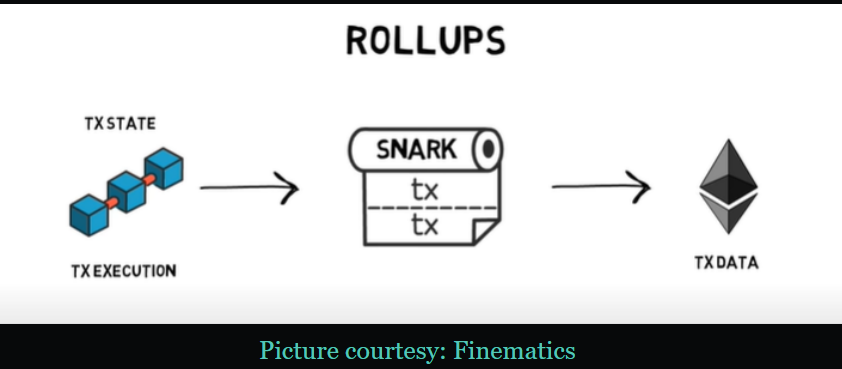

A rollup is a scaling solution in which transactions are executed outside of Layer 1, but transaction data are posted on Layer 1

Layer 1 is eased up as all the computation work are done off-chain. This allows more transactions to be processed, but only a few fit into the Ethereum blocks. So basically, a sidechain is formed where all the transactions are processed, and a Rollup specific EVM is used to run them. Then these transactions are bundled up and posted into the Ethereum chain.

Compiling the above explanation into steps as:

- Execute transactions off-chain.

- Take the transaction data.

- Compress the transaction data.

- Roll the transactions into a single batch

- Post them to the Ethereum main chain.

A question arises here, “How can one verify that the data has not been tampered with or a malicious user does not submit the data?” We will look into the solution by exploring the two different types of rollups: Optimistic Rollups and ZK Rollups.

For Optimistic Rollups, fraud-proof ensures validity, while for ZK-Rollups, validity is confirmed by zero-knowledge proofs.

Optimistic RollupsOptimistic Rollup is the most anticipated scaling solution of the 2019–2021 era. This is for a good reason, as it is the final artefact of a multi year-long research process in the Ethereum community. The goal of optimistic Rollup is to decrease latency and increase the transaction throughput, thereby reducing the gas fees.

If the data posted by the aggregators run without any faults, no proof is required. The proof is required in case of fraud only. Hence, optimistic Rollup doesn’t have to do additional work in the case of authentic data.

There may be situations where invalid transactions are submitted; in that case, the system must identify it, recover the correct state, and penalize the party that offers such a transaction. To achieve this, optimistic rollups implement a dispute resolution system that can verify fraud proofs, detect fraudulent transactions and disincentivize bad actors from submitting other invalid transactions.

One of the main projects implementing optimistic Rollup is “Optimism”.

This is a fundamental overview of Optimistic Rollup. I will get into depth in the upcoming articles. For this article, I am keeping it short and precise.

ZK RollupsZK Rollup aims to reduce computing and storage costs. In a ZK-Rollup, a succinct Zero-Knowledge Proof (SNARK) is generated for every state transition, verified by the Rollup contract on the mainchain. This SNARK proves a series of transactions correctly signed by owners, which update the account balances correctly. It is consequently an impossible task for the operators to commit an invalid or manipulated state.

ZK rollup is faster than optimistic Rollup, but it doesn’t provide an easy way for smart contracts to migrate to layer 2.

Whereas optimistic Rollup runs an EVM compatible virtual machine called OVM (Optimistic Virtual Machine), which allows for executing the same smart contracts as can be performed on the main chain.

Few decentralized exchanges using ZK rollup are Loopring, DeversiFi, etc.

A few more articles will be posted based on getting started with Optimism. Tune in!

Click on the link here to view my article on “Minting your first NFT using Polygon“.

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.