and the distribution of digital products.

Request Throttling: A Cybersecurity Shield Against Overload and Abuse

\ In today’s digital ecosystem, request throttling acts as a key gatekeeper, ensuring system stability by regulating traffic flow. Without it, systems are vulnerable to Distributed Denial of Service (DDoS) attacks, uncontrolled access surges, and brute-force login attempts. It is akin to a bouncer at a club—only allowing a manageable number of guests in at any given time to avoid chaos inside. Throttling ensures both security and fair usage, maintaining order by balancing service availability and system performance.

Types of Request Throttling: Choosing the Right StrategyRate Limiting: Controls how many requests a user or client can send over a specified timeframe. \n Example: A DynamoDB table with 60 write capacity units permits 3,600 writes per minute. However, if those requests arrive too quickly, the system triggers rate limits, rejecting the excess traffic to preserve resources.

\

Concurrent Request Limiting: Caps the number of simultaneous requests the system can handle at any moment. \n Example: AWS Lambda enforces a regional execution environment limit. If a spike exhausts those environments, new requests are paused until resources become available.

\

Resource-based Throttling: Protects the system using available resources such as CPU, memory, or database partitions. \n Example: A hot partition issue in DynamoDB arises when multiple requests target the same partition key, causing delays or failures due to excessive load.

\

User/IP-based Throttling: Applies different limits based on user identity or IP address. \n Example: AWS WAF can block an IP address if it sends over 2,000 requests in five minutes, ensuring abusive traffic is contained.

\

Endpoint-specific Throttling: Assigns unique limits to individual API endpoints. \n Example: Twitter's API applies varying limits across different endpoints, ensuring critical ones remain operational even during high traffic loads.

\

In cloud environments, autoscaling mechanisms are designed to respond to increased traffic. However, scaling isn’t instantaneous—there’s always a time lag. Throttling fills this gap by temporarily slowing down or rejecting incoming requests until additional capacity is available, preventing system crashes or degraded performance.

Throttling Responses: System Feedback Mechanisms

\

1. HTTP 429: Too Many RequestsThe client has sent too many requests in a given time.

\ Response: The server blocks additional requests temporarily and may send a Retry-After header indicating when the client can try again. Use Case: Common in APIs to limit excessive calls from clients.

\

When a system detects suspicious or excessive requests, it may redirect the user to a CAPTCHA page or login challenge to confirm the user is human.

\ Response Example: A web application redirects to a CAPTCHA page where the user must complete a puzzle.

Use Case: Useful for mitigating bot attacks or brute force login attempts while allowing legitimate users to proceed.

\

\ \ \ \ \ \

3. Rate Limit Headers: Know Your QuotaSome APIs or services send back headers like X-RateLimit-Limit, X-RateLimit-Remaining, and X-RateLimit-Reset to inform clients about their quota usage.

\ Response: Headers like X-RateLimit-Remaining help clients manage usage.

Example:

X-RateLimit-Limit: 1000 X-RateLimit-Remaining: 0 X-RateLimit-Reset: 60Use Case: Helps clients manage their usage and avoid getting throttled.

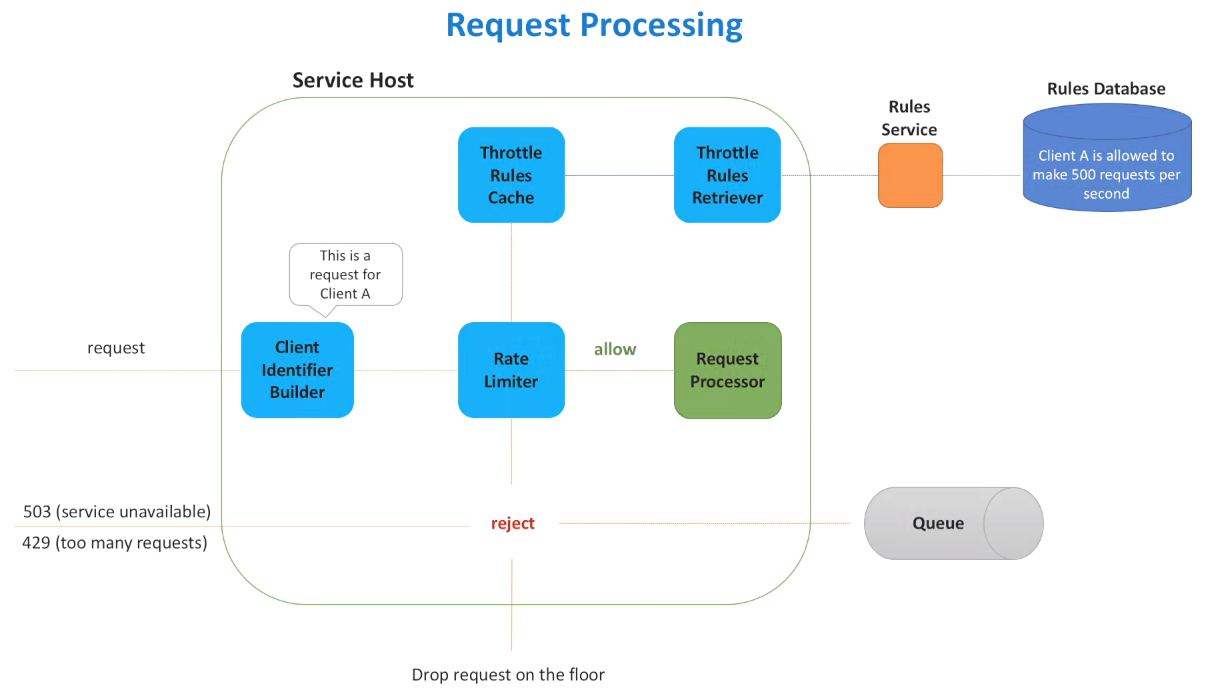

4. Throttling with Queue or DelayInstead of rejecting requests, some systems place them in a queue and process them when resources are available, or introduce artificial delays.

\ Response: A service may send a response like, “Your request is queued; estimated wait time: 10 seconds.”

Use Case: Used in situations where deferred execution is acceptable, like bulk uploads.

Balancing User Experience and StabilityEffective throttling is a delicate balance. Too strict, and users may face unnecessary rejections, harming the user experience. Too lenient, and the system could collapse under excessive traffic. Well-designed systems use throttling as a safety net, providing graceful degradation under heavy load by issuing friendly error messages or subtle delays rather than abrupt failures.

\ Ultimately, request throttling ensures systems remain reliable and responsive, quietly working behind the scenes to manage chaos—whether from enthusiastic users or malicious actors. It safeguards uptime, stabilizes performance, and keeps digital systems running smoothly in the face of growing demand.

\

:::info Stay tuned for Part 2: Designing and Implementing Request Throttling!

:::

\

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.