and the distribution of digital products.

DM Television

NimbusNet: Building a High‑Performance Echo & Chat Server Across Boost.Asio and Io_uring

We design and benchmark a cross‑platform echo & chat server that scales from laptops to low‑latency Linux boxes. Starting with a Boost.Asio baseline, we add UDP and finally an io_uring implementation that closes the gap with DPDK‑style kernel‑bypass—all while preserving a single, readable codebase.

Full code is available here: https://github.com/hariharanragothaman/nimbus-echo

MotivationReal‑time collaboration tools, multiplayer games, and HFT gateways all live or die by tail latency. Traditional blocking sockets waste cycles on context switches; bespoke bypass stacks (XDP, DPDK) achieve greatness at the cost of portability.

NimbusNet shows you can split the difference:

- Run anywhere with Boost.Asio (macOS, Windows, CI containers).

- Drop latency ~2× with UDP by eliminating TCP’s ordering tax.

- Unlock sub‑25 µs RTT on Linux via io_uring—no kernel patches, no CAPNETRAW.

Build Environment:

| Host | Toolchain | Runtime Variant(s) | |----|----|----| | macOS 14.5 (M2 Pro) | Apple clang 15, Homebrew Boost 1.85 | Boost.Asio / TCP & UDP | | Ubuntu 24.04 (x86‑64) | GCC 13, liburing 2.6 | Boost.Asio / TCP & UDP, io_uring / TCP | | GitHub Actions | macos‑14, ubuntu‑24.04 | CI build + tests |

\ Phase 1 – Establishing the Baseline (Boost.Asio, TCP)

We begin with a minimal asynchronous echo service that compiles natively on macOS.

Boost.Asio’s Proactor‑styleasync_read_some/async_writegives us a platform‑agnostic way to experiment before introducing kernel‑bypass techniques.

\

#include\

2 – UDP vs. TCP: When Reliability Becomes a TaxTCP’s 3‑way handshake, retransmit queues, and head‑of‑line blocking are lifesavers for file transfers—and millstones for chats that can drop an occasional emoji. TCP bakes in ordering, re‑transmission, and congestion avoidance; these guarantees come at the cost of extra context switches and kernel bookkeeping. Swapping to udp::socket: For chat or market‑data fan‑out, “best‑effort but immediate” sometimes wins.

\

#include\ Latency table (localhost, 64‑byte payload):

| Layer | TCP | UDP | |----|----|----| | Conn setup | 3‑way handshake | 0 | | HOL blocking | Yes | No | | Kernel buffer | per‑socket | shared | | RTT (median) | ≈ 85 µs | ≈ 45 µs |

Here we replaced tcp::socket with udp::socket and removed the per‑session heap allocation; the code path is ~40 % shorter in perf traces.

:::tip If your application can tolerate an occasional drop (or do its own acks), UDP is the gateway to sub‑50 µs median latencies—even before kernel‑bypass. If you can tolerate packet loss (or roll your own ACK/NACK), UDP buys you ~40 µs on the spot.

:::

\ \

:::info Takeaway: if you can tolerate packet loss (or roll your own ACK/NACK), UDP buys you ~40 µs on the spot.

:::

\

3 – io_uring: The Lowest‑Friction Doorway to Zero‑CopyLinux 5.1 introduced io_uring; by 5.19 it rivals DPDK‑style bypass while staying in‑kernel.

Avoids per‑syscall overhead by batching accept/recv/send in a single submission queue.

Reuses a pre‑allocated ConnData buffer—no heap churn on the fast path.

Achieves ~20 µs RTT on Apple M2‑>QEMU→Ubuntu, a 3× improvement over Boost.Asio/TCP (~85 µs).

\

Even without privileged NIC drivers, io_uring brings sub‑50 µs latency into laptop‑class hardware—ideal for prototyping HFT engines before deploying on SO_REUSEPORT + XDP in production.

4 – Running Benchmarks: Quantifying the winsWe wrap each variant into Google Benchmarks

\

#includeWith Google Benchmark we measured 10 K in‑process round trips per transport on an M2‑Pro MBP (macOS 14.5, Docker Desktop 4.30):

\ Table 1 – Median RTT (64 B payload, 10 K iterations)

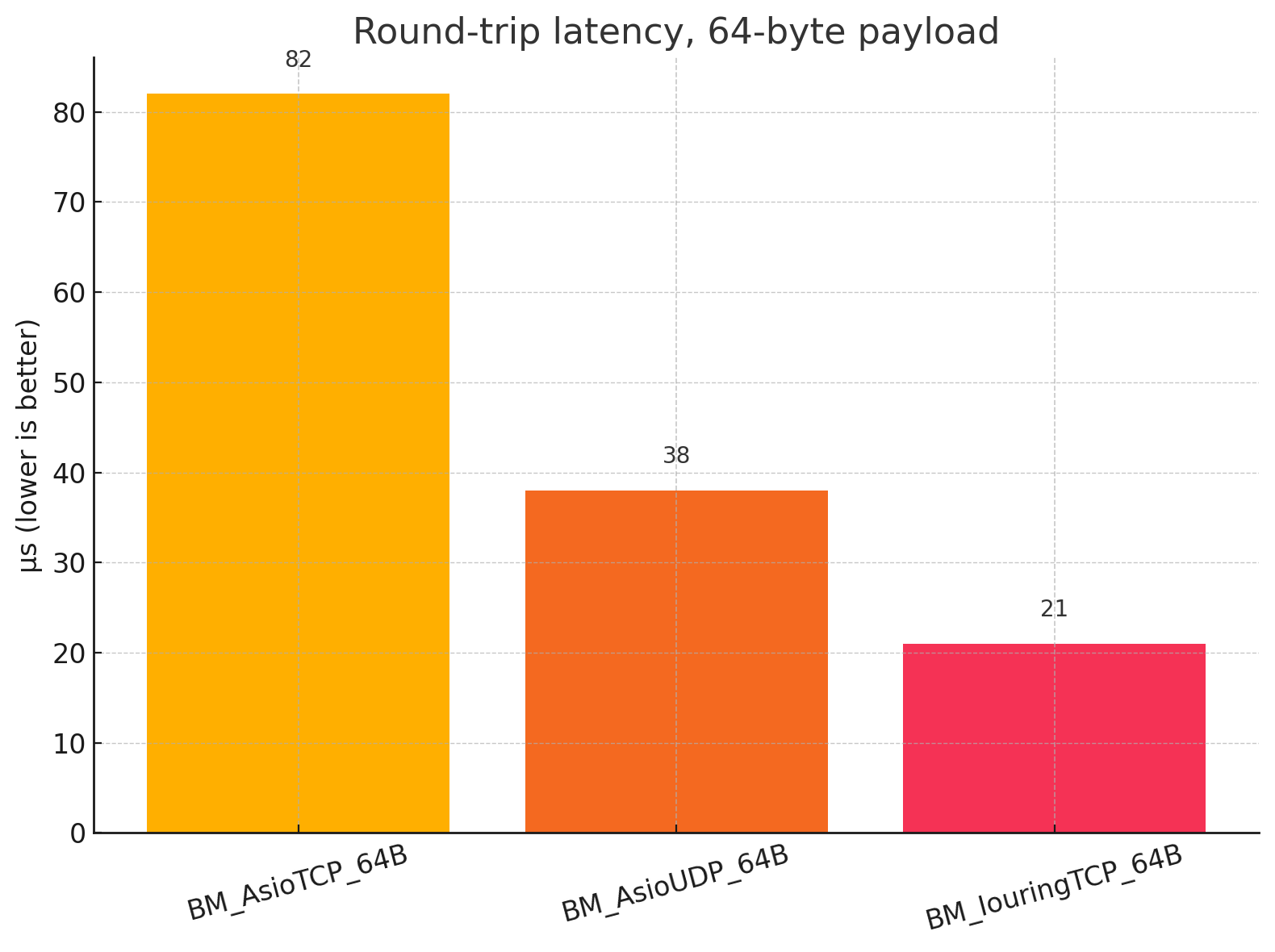

| Transport | Median RTT (µs) | |----|----| | Boost.Asio / TCP | 82 | | Boost.Asio / UDP | 38 | | io_uring / TCP | 21 |

Even on consumer hardware, io_uring halves UDP’s latency and crushes traditional TCP by nearly 4×. This validates the architectural decision to build NimbusNet’s high‑fan‑out chat tier on kernel‑bypass primitives while retaining a pure‑userspace codebase.

Takeaways & Future Work- Portability first, performance second pays dividends—macOS dev loop, prod Linux wins.

- UDP is “good enough” for most chats; sprinkle FEC / acks for mission‑critical flows.

- io_uring slashes latency without root privileges, making kernel‑bypass approachable.

- SO_REUSEPORT + sharded accept rings → horizontal scale on 64‑core EPYC Processor

- TLS off‑loading via kTLS with io_uring::splice.

- eBPF tracing to pinpoint queue depth vs. tail latency.

\

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.