and the distribution of digital products.

Multi-Aspect Training: Adapting SDXL for Real-World Image Diversity

:::info Authors:

(1) Dustin Podell, Stability AI, Applied Research;

(2) Zion English, Stability AI, Applied Research;

(3) Kyle Lacey, Stability AI, Applied Research;

(4) Andreas Blattmann, Stability AI, Applied Research;

(5) Tim Dockhorn, Stability AI, Applied Research;

(6) Jonas Müller, Stability AI, Applied Research;

(7) Joe Penna, Stability AI, Applied Research;

(8) Robin Rombach, Stability AI, Applied Research.

:::

Table of Links2.4 Improved Autoencoder and 2.5 Putting Everything Together

\ Appendix

D Comparison to the State of the Art

E Comparison to Midjourney v5.1

F On FID Assessment of Generative Text-Image Foundation Models

G Additional Comparison between Single- and Two-Stage SDXL pipeline

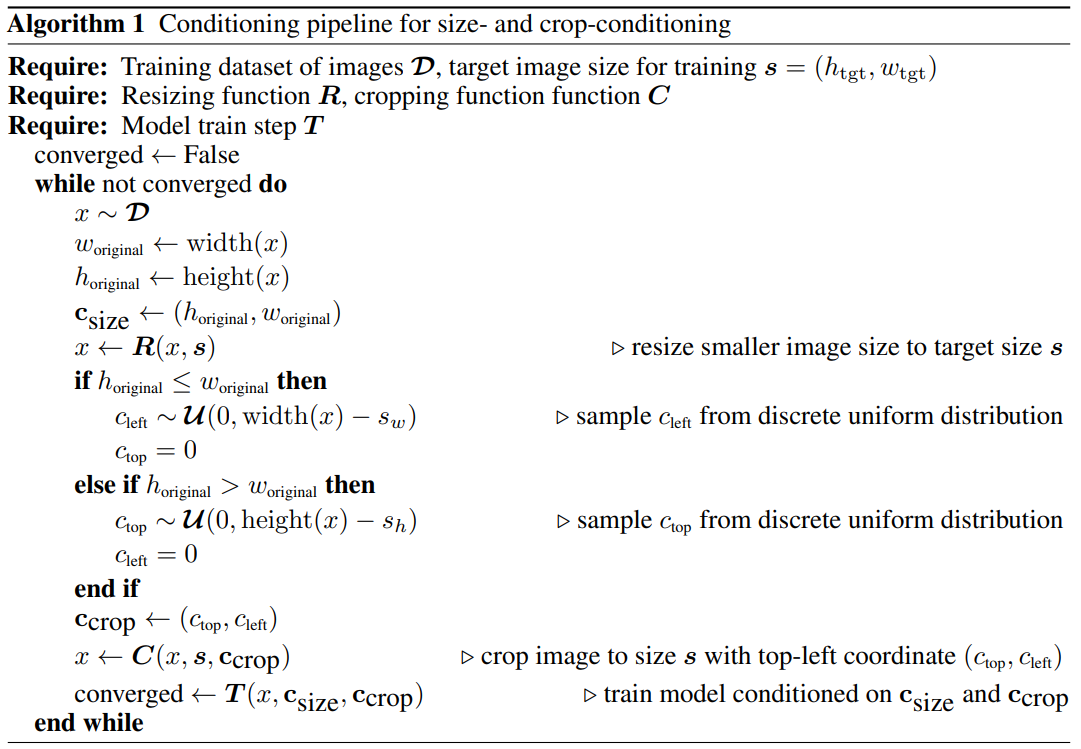

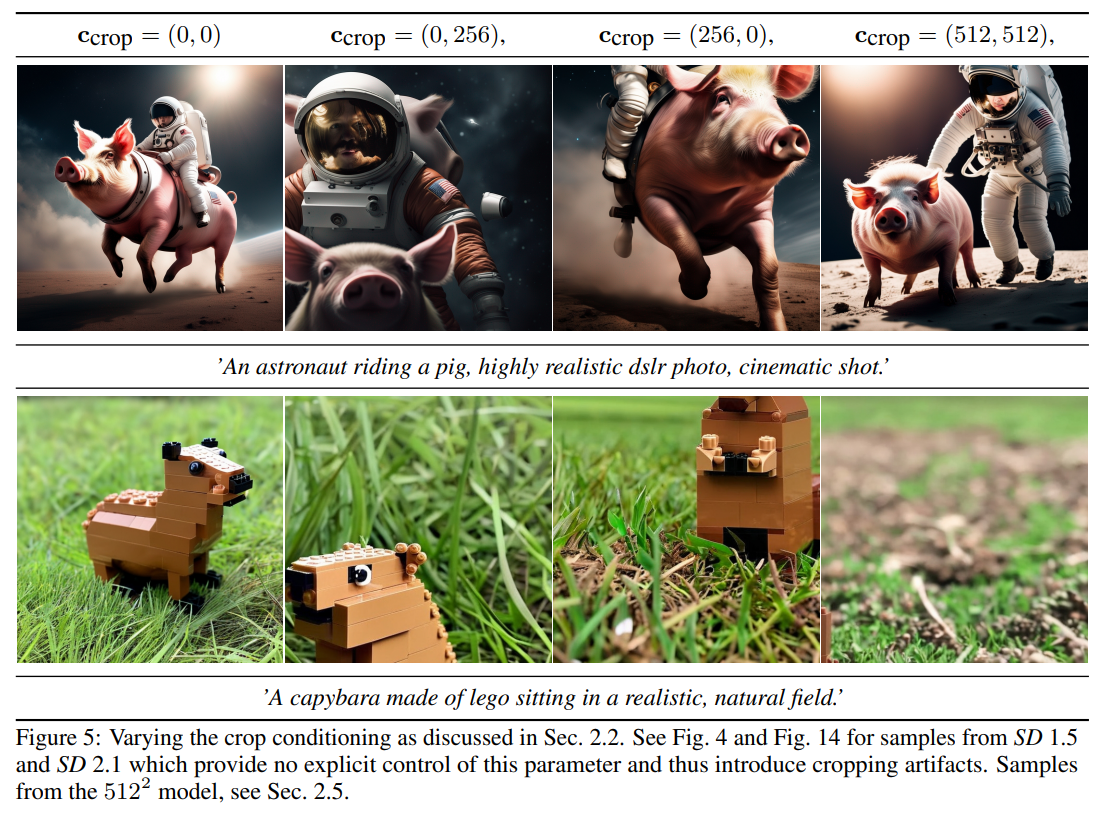

2.3 Multi-Aspect TrainingReal-world datasets include images of widely varying sizes and aspect-ratios (c.f. fig. 2) While the common output resolutions for text-to-image models are square images of 512 × 512 or 1024 × 1024 pixels, we argue that this is a rather unnatural choice, given the widespread distribution and use of landscape (e.g., 16:9) or portrait format screens.

\

\

\

\ In practice, we apply multi-aspect training as a finetuning stage after pretraining the model at a fixed aspect-ratio and resolution and combine it with the conditioning techniques introduced in Sec. 2.2 via concatenation along the channel axis. Fig. 16 in App. J provides python-code for this operation. Note that crop-conditioning and multi-aspect training are complementary operations, and crop-conditioning then only works within the bucket boundaries (usually 64 pixels). For ease of implementation, however, we opt to keep this control parameter for multi-aspect models.

\

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

\

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.