and the distribution of digital products.

Meta has officially released Llama 3.2

Meta has announced the production release of Llama 3.2, an unprecedented collection of free and open-source artificial intelligence models aimed at shaping the future of machine intelligence with flexibility and efficiency.

Since businesses are on the lookout for apocalyptic AI solutions that can work on the hardware most common or on those that are popular to develop solutions for large businesses as well as independents, Llama 3.2 gives new models.

Llama 3.2’s focus on edge and mobile devicesAn emphasis on the edge and mobility is something that is quite evident at Meta.

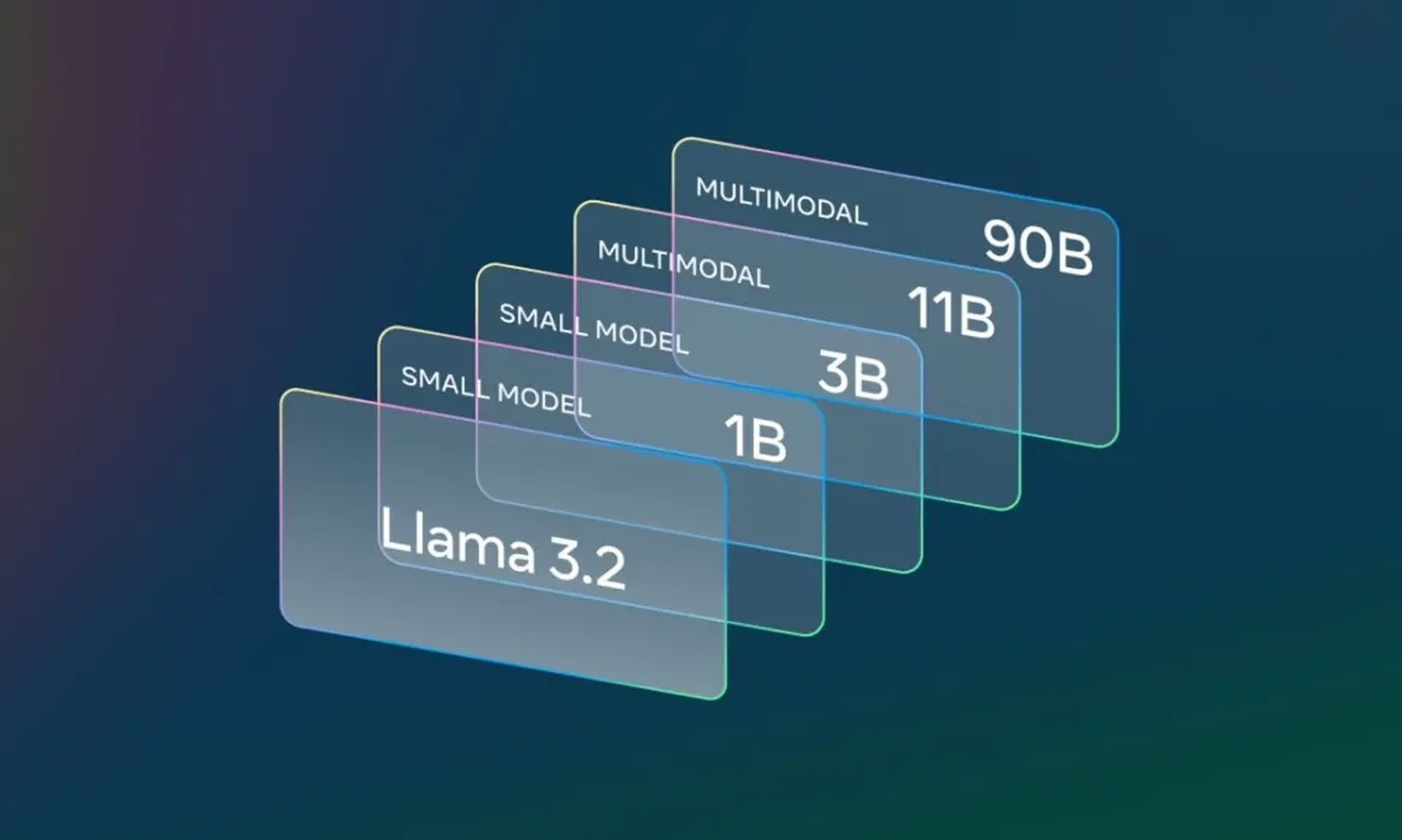

As for the new features of this version, the developers have added small- and medium-sized vision LLM: 11B and 90B, and so also introduced pure text alternatives, 1B and 3B.

Particularly, the new models introduced here are aligned for the operation of edge devices, thus making the AI technology available to more clients. The lightweight text-only models, especially those without any visual data, are designed for simpler tasks such as summarization and instruction following due to the low computation power.

Llama 3.2’s sub-models consist of two on-device and two multimodal models

Llama 3.2’s sub-models consist of two on-device and two multimodal models

Due to central data processing on mobile devices, with local execution, none of the data is uploaded to the cloud, as Meta states,

“Running locally on mobile devices ensures that the data remains on the device, enhancing user privacy by avoiding cloud-based processing,”

This capability is especially useful for applications that process sensitive data, as it enables the application to perform important tasks while maintaining the confidentiality of the data. For example, users can reply to personal messages while summarizing them, or get to-do-list items from meetings without relaying messages to external servers.

Advancements in model architectureThe most significant change in Llama 3.2 is various architectural improvements. The new models use an adapter-based architecture that can combine image encoders with pre-trained text models without modification. This integration leads to improvements in the ability to reason in both text and image areas and greatly expands the range of applications for these models.

The resulting pre-trained models went through stringent fine-tuning exercises that entailed the utilization of huge noisy image-text pair data.

Llama 3.2 11B & 90B include support for a range of multimodal vision tasks. These capabilities enable scenarios like captioning images for accessibility, providing natural language insights based on data visualizations and more. pic.twitter.com/8kwTopytaf

— AI at Meta (@AIatMeta) September 25, 2024

There is one important addition to the token context length, and it increased to a very impressive 128K for the lightweight 1B and 3B models. It facilitates wider data travelling which is particularly valuable for long documents and elaborate thinking.

This capability to accommodate such large input sizes places Llama 3.2 at an advantage with respect to competitors in the dynamic AI market dominated by OpenAI’s GPT models.

How about the performance metrics?Llama 3.2’s models have demonstrated exceptional performance metrics, further solidifying their competitive edge in the market. The 1B model achieved a score of 49.3 on the MMLU benchmark, while the 3B model scored 63.4. On the vision side, the 11B and 90B models showcased their capabilities with scores of 50.7 and 60.3, respectively, in visual reasoning tasks.

Evaluating performance on extensive human evaluations and benchmarks, results suggest that Llama 3.2 vision models are competitive with leading closed models on image recognition + a range of visual understanding tasks. pic.twitter.com/QtOzExBcrd

— AI at Meta (@AIatMeta) September 25, 2024

These metrics indicate that the Llama 3.2 models not only meet but often exceed the performance of similar offerings from other companies, such as Claude 3 Haiku and GPT4o-mini.

The integration of UnslothAI technology also adds to the efficiency of these models, enabling twice as fast fine-tuning and inference speeds while reducing VRAM usage by 70%. This enhancement is crucial for developers looking to implement real-time AI solutions without facing hardware limitations.

Ecosystem collaboration and supportOne of the key factors that define Llama 3.2’s readiness to be brought into the market is its well-developed ecosystem. Partnerships with other mobile industry leaders like Qualcomm, MediaTek, and AWS make it possible for developers to implement these models across different settings, cloud environments, and local devices.

The Llama Stack distributions such as Llama Stack for on-device installations and Llama Stack for single-node installation offer solutions that developers can take advantage of and build these models into their projects without added complications.

The lightweight Llama 3.2 models shipping today include support for @Arm, @MediaTek & @Qualcomm to enable the developer community to start building impactful mobile applications from day one. pic.twitter.com/DhhNcUviW7

— AI at Meta (@AIatMeta) September 25, 2024

How to use Meta Llama 3.2?The latest version of the open-source AI model, Llama 3.2, is now available on the Meta Llama website, offering enhanced capabilities for customization, fine-tuning, and deployment across various platforms.

Developers can choose from four model sizes: 1B, 3B, 11B, and 90B, or continue utilizing the earlier Llama 3.1.

Meta is not just releasing these models into the wild; they are keen on ensuring developers have everything they need to leverage Llama 3.2 effectively. This commitment includes sharing valuable tools and resources to help developers build responsibly. By continuously updating their best practices and engaging with the open-source community, Meta hopes to inspire innovation while promoting ethical AI usage.

“We’re excited to continue the conversations we’re having with our partners and the open-source community, and as always, we can’t wait to see what the community builds using Llama 3.2 and Llama Stack,”

Meta stated.

This collaborative approach not only enhances the capabilities of Llama 3.2 but also encourages a vibrant ecosystem. Whether for lightweight edge solutions or more complex multimodal tasks, Meta hopes that the new models will provide the flexibility needed to meet diverse user demands.

Image credits: Meta

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.