and the distribution of digital products.

Meet Maia 100, Microsoft’s latest weapon in the AI hardware battle

Microsoft found its way to compete with NVIDIA, the Maia 100 AI accelerator. This new chip represents a strategic move to challenge NVIDIA’s dominance in the AI hardware sector by offering advanced capabilities designed for high-performance cloud computing. With the Maia 100, Microsoft aims to provide a more cost-effective and efficient solution for managing large-scale AI workloads.

Everything we know about Microsoft’s Maia 100 so farMicrosoft’s Maia 100 is a powerful new AI accelerator designed to handle big AI tasks in the cloud. Unveiled at Hot Chips 2024, this chip is a big step forward in making AI infrastructure more cost-effective, and here is how:

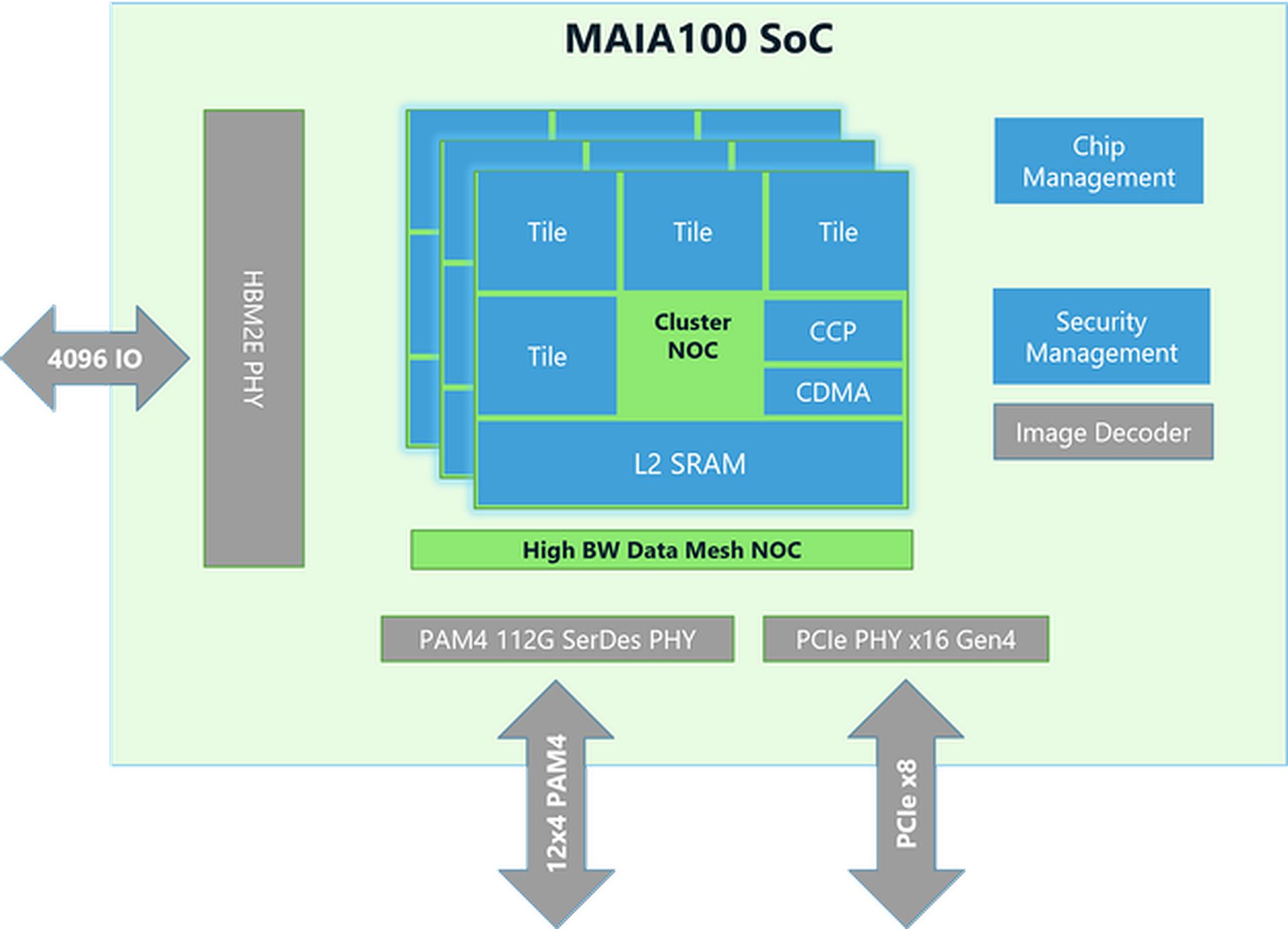

Chip design and technology- Size and manufacturing: The Maia 100 chip is about 820mm² in size and is built using TSMC’s advanced N5 technology. It uses COWOS-S technology, which helps integrate the chip with other components effectively.

- Memory and bandwidth: The chip has a lot of memory and can handle a huge amount of data. It has large on-die SRAM (a type of fast memory) and four HBM2E memory chips. Together, they provide 1.8 terabytes per second of data transfer speed and 64 gigabytes of memory capacity. This setup is crucial for processing large AI datasets quickly.

- Power usage: Maia 100 can handle up to 700 watts of power but is set to use 500 watts for better efficiency. This allows it to perform well while keeping power usage under control.

- Tensor unit: The chip includes a high-speed tensor unit for handling complex AI tasks like training models and making predictions. It can process different types of data, including a new format called MX, which Microsoft introduced in 2023. This unit is designed to handle many calculations at once efficiently.

- Vector processor: Maia 100 has a vector processor that supports various data types, such as FP32 (32-bit floating point) and BF16 (16-bit floating point). This helps in performing a wide range of machine learning tasks.

- DMA engine: The Direct Memory Access (DMA) engine helps in moving data quickly and supports different ways of splitting up tensors (data chunks), which improves efficiency.

(Credit)

Data handling and efficiency

(Credit)

Data handling and efficiency

- Data storage and compression: Maia 100 uses lower-precision data storage and a compression engine to reduce the amount of data that needs to be moved around. This helps in handling large AI tasks more efficiently.

- Scratch pads: It has large L1 and L2 scratch pads (temporary storage areas) managed by software to make sure data is used efficiently and power is saved.

- Networking: The chip supports high-speed Ethernet connections, allowing it to handle up to 4800 Gbps of data in certain ways and 1200 Gbps in others. This custom networking protocol ensures reliable and fast data transfer, which is also secure with AES-GCM encryption.

Elon Musk’s Nvidia AI chip order is too big to handle

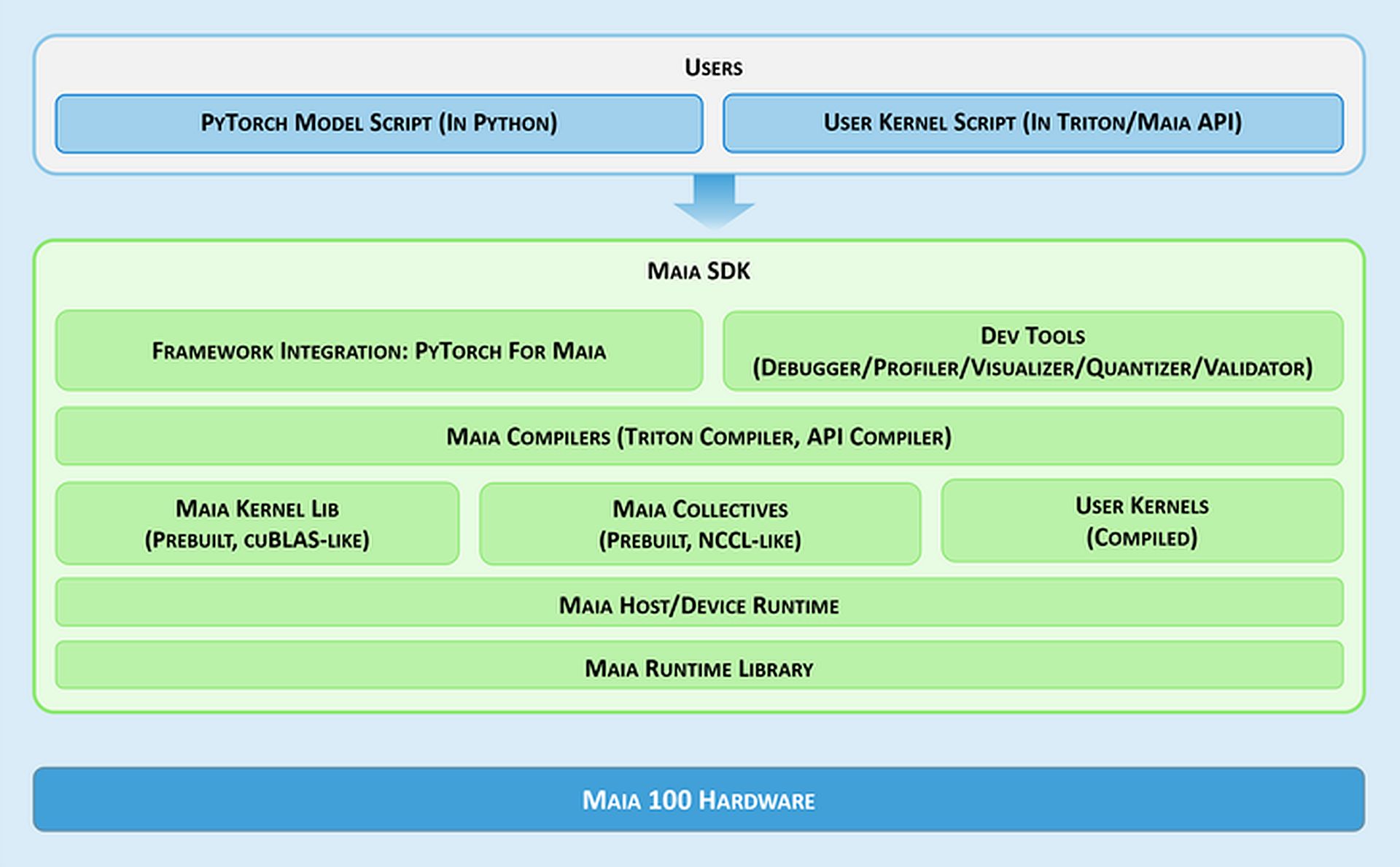

Software tools and integration- Maia SDK: Microsoft provides a set of tools called the Maia SDK to make it easier to use the Maia 100. These tools include:

- Framework integration: A PyTorch backend that supports both eager mode (for quick development) and graph mode (for better performance).

- Developer tools: Tools for debugging, performance tuning, and validating models, which help in improving the efficiency of AI tasks.

- Compilers: Maia supports two programming models: Triton, an easy-to-use language for deep learning, and the Maia API, a custom model for high performance.

- Kernel and collective library: Provides optimized computing and communication kernels for machine learning, with options to create custom kernels.

- Host/Device runtime: Manages memory allocation, running programs, scheduling tasks, and device management.

(Credit: Microsoft)

Programming models and data handling

(Credit: Microsoft)

Programming models and data handling

- Asynchronous programming: Maia supports asynchronous programming with semaphores, which helps overlap computations with data transfers to improve efficiency.

- Programming models: Developers can choose between:

- Triton: A simple plan guage for deep learning that works on both GPUs and Maia. It handles memory and synchronization automatically.

- Maia API: A more detailed programming model for those who need fine control over performance, requiring more code and management.

- Data flow optimization: Maia uses a Gather-based method for matrix multiplication (GEMMs) rather than the traditional All-Reduce method. This makes processing faster by combining results directly in SRAM (fast memory), reducing latency and improving performance.

- SRAM usage: The chip uses SRAM to temporarily store data and results, which reduces the need for slower memory accesses and boosts overall performance.

Maia 100 can run PyTorch models with minimal changes. The PyTorch backend supports both development (eager mode) and high-performance (graph mode), making it easy to move models across different hardware setups.

Need a recap of Maia 100’s specs? Specification Details Chip Size ~820mm² Manufacturing Technology TSMC N5 process with COWOS-S interposer On-Die SRAM Large capacity for fast data access Memory 64 GB HBM2E (High Bandwidth Memory) Total Bandwidth 1.8 terabytes per second Thermal Design Power (TDP) Supports up to 700W, provisioned at 500W Tensor Unit High-speed, supports MX format, 16xRx16 Vector Processor Custom superscalar engine, supports FP32 and BF16 DMA Engine Supports various tensor sharding schemes Data Compression Includes compression engine for efficiency Ethernet Bandwidth Up to 4800 Gbps all-gather, 1200 Gbps all-to-all Network Protocol Custom RoCE-like, AES-GCM encryption Programming Models Triton (domain-specific language), Maia API (custom model) Scratch Pads Large L1 and L2, software-managed SRAM Usage For buffering activations and results Software SDK Includes PyTorch backend, debugging tools, compilers, and runtime management Data Flow Optimization Gather-based matrix multiplication (GEMMs)That’s all! In summary, Microsoft’s Maia 100 AI accelerator positions itself as a direct competitor to NVIDIA’s offerings in the AI hardware market. With its advanced architecture and performance features, the Maia 100 seeks to provide a viable alternative for handling large-scale AI tasks.

Featured image credit: Microsoft

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.