and the distribution of digital products.

DM Television

LLM’s Diverse Capabilities in Video Generation and Limitations

:::info Authors:

(1) Dan Kondratyuk, Google Research and with Equal contribution;

(2) Lijun Yu, Google Research, Carnegie Mellon University and with Equal contribution;

(3) Xiuye Gu, Google Research and with Equal contribution;

(4) Jose Lezama, Google Research and with Equal contribution;

(5) Jonathan Huang, Google Research and with Equal contribution;

(6) Grant Schindler, Google Research;

(7) Rachel Hornung, Google Research;

(8) Vighnesh Birodkar, Google Research;

(9) Jimmy Yan, Google Research;

(10) Krishna Somandepalli, Google Research;

(11) Hassan Akbari, Google Research;

(12) Yair Alon, Google Research;

(13) Yong Cheng, Google DeepMind;

(14) Josh Dillon, Google Research;

(15) Agrim Gupta, Google Research;

(16) Meera Hahn, Google Research;

(17) Anja Hauth, Google Research;

(18) David Hendon, Google Research;

(19) Alonso Martinez, Google Research;

(20) David Minnen, Google Research;

(21) Mikhail Sirotenko, Google Research;

(22) Kihyuk Sohn, Google Research;

(23) Xuan Yang, Google Research;

(24) Hartwig Adam, Google Research;

(25) Ming-Hsuan Yang, Google Research;

(26) Irfan Essa, Google Research;

(27) Huisheng Wang, Google Research;

(28) David A. Ross, Google Research;

(29) Bryan Seybold, Google Research and with Equal contribution;

(30) Lu Jiang, Google Research and with Equal contribution.

:::

Table of Links3. Model Overview and 3.1. Tokenization

3.2. Language Model Backbone and 3.3. Super-Resolution

4. LLM Pretraining for Generation

5. Experiments

5.2. Pretraining Task Analysis

5.3. Comparison with the State-of-the-Art

5.4. LLM’s Diverse Capabilities in Video Generation and 5.5. Limitations

6. Conclusion, Acknowledgements, and References

5.4. LLM’s Diverse Capabilities in Video GenerationThis subsection presents several capabilities we discover from the pretrained VideoPoet, shedding light on the LLM’s promising potential in video generation. By combining the

\

\

\ flexibility of our autoregressive language model to perform diverse tasks such as extending video in time, inpainting, outpainting, and stylization, VideoPoet accomplishes multiple tasks in a unified model.

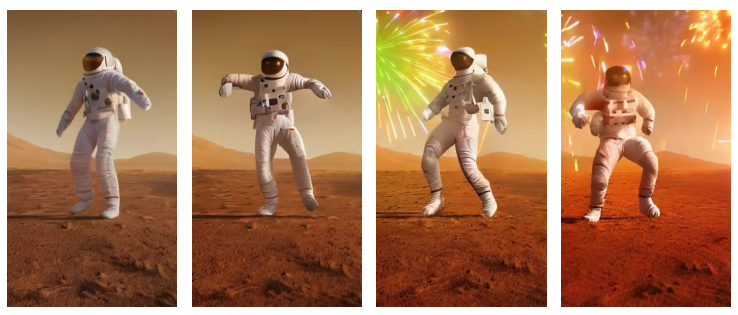

\ Coherent long video generation and image-to-video. A benefit of an decoder-based language model is that it pairs well with autoregressively extending generation in time. We present two different variants: Generating longer videos and converting images to videos. Encoding the first frame independently allows us to convert any image into the initial frame of a video without padding. Subsequent frames are generated by predicting remaining tokens, transforming the image into a video as shown in Fig. 6 [1].

\

\ based on previously generated video, and produces temporally consistent videos without significant distortion. Such capabilities are rarely observed in contemporary diffusion models.

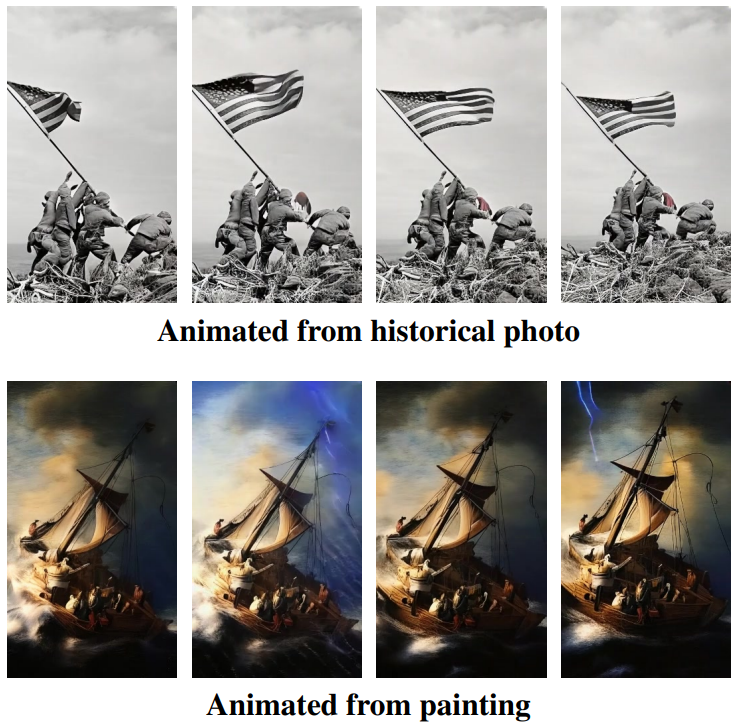

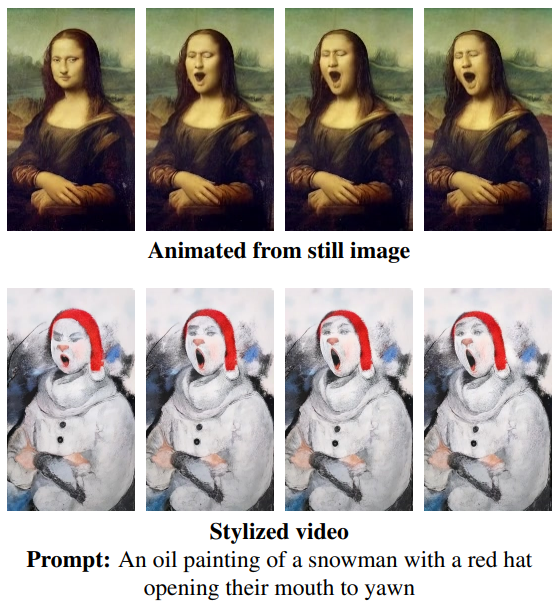

\ Zero-shot video editing and task chaining. With the multi-task pretraining, VideoPoet exhibits task generalization that can be chained together to perform novel tasks. We show the model can apply image-to-video animation followed by video-to-video stylization in Fig. 7. In the Appendix, Fig. 10 shows another example applying video-tovideo outpainting, followed by editing them with additional video-to-video effects. At each stage, the quality of the output is sufficient to remain in-distribution (i.e. teacher forcing) for the next stage without noticeable artifacts. These capabilities can be attributed to our multimodal task design within an LLM transformer framework that allows for modeling multimodal content using a single transformer architecture over a unified vocabulary.

\ Zero-shot video stylization. Stylization results are presented in Appendix A.4 where the structure and text are used as prefixes to guide the language model. Unlike other stylization methods that employ adapter modules such as cross-attention networks (Zhang et al., 2023b) or latent blending (Meng et al., 2021), our approach stylizes videos within an LLM as one of several generative tasks.

\ 3D structure, camera motion, visual styles. Even though we do not add specific training data or losses to encourage 3D consistency, our model can rotate around objects and predict reasonable visualizations of the backside of objects.

\ Additionally, with only a small proportion of input videos and texts describing camera motion, our model can use short text prompts to apply a range of camera motions to image-to-video and text-to-video generations (see Fig. 11).

5.5. LimitationsDespite VideoPoet demonstrating highly competitive performance of LLMs relative to state-of-the-art models, certain limitations are still observed. For example, the RGB frame reconstruction from compressed and quantized tokens place an upper bound on the generative model’s visual fidelity. Second, the per-frame aesthetic biases in static scenes does not match the best baseline. This difference is largely due to a choice of training data, where we focus our training on more natural aesthetics and excluded some sources containing copyrighted images, such as LAION (Schuhmann et al., 2022), which is commonly used in other work. Finally, small objects and fine-grained details, especially when coupled with significant motions, remains difficult within the token-based modeling.

\

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

[1] For image-to-video examples we source images from Wikimedia Commons: https://commons.wikimedia.org/wiki/Main Page

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.