and the distribution of digital products.

DM Television

LightRAG - Is It a Simple and Efficient Rival to GraphRAG?

Traditional RAG systems work by indexing raw data. This data is simply chunked and stored in vector DBs. Whenever a query comes from the user, it queries the stored chunks and retrieves relevant chunks. If you wish to learn the fundamentals of RAG I have written a comprehensive intro about it here.

\ As the retrieval step happens for every single query from the user, it is the most crucial bottleneck to speed up naive RAG systems. Would it not be logical to make the retrieval process super efficient? This is the promise of LightRAG.

Why Not GraphRAG?Before we look at them, you may ask, “Wait. Do we not have GraphRAG from Microsoft?”. Yes, but GraphRAG seems to have a couple of drawbacks.

- Incremental knowledge update. (sec 3.1) GraphRAG first creates a reference to entities and relationships in the entire private dataset. It then does bottom-up clustering that organizes the data hierarchically into semantic clusters. An update to the dataset with new knowledge means that we have to go through the entire process of building the graph! LightRAG on the other hand addresses this by simply appending new knowledge to the existing one. More specifically, it combines new graph nodes and edges with existing ones through a simple union operation.

\

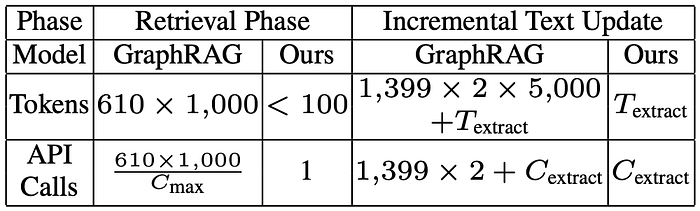

Computational intensity. As seen from their study, LightRAG significantly reduces the cost of the retrieval phase. What takes 610,000 tokens for GraphRAG takes less than 100 tokens for LightRAG.

So, without further adieu, let's dive into LightRAG.

Visual LearningIf you are a visual learner like me, why not check this YouTube video explaining the idea of LightRAG:

https://youtu.be/5EmRZcJIfnw?si=UPhwRtxUUcZbTfRU&embedable=true

\

LightRAGThe two main selling points of LightRAG are Graph-based indexing and a dual-level retrieval framework. So, let's look into each of them.

Graph-based Indexing

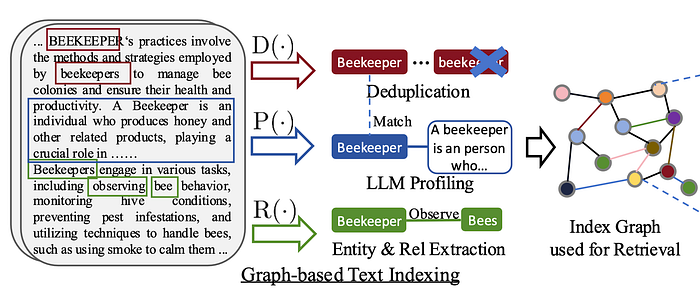

Below are the steps LightRAG follows to incorporate graph-based indexing.

- Entity and Relationship (ER) extraction. ER extraction is shown by R(.) in the above figure. This step ensures that simple entities are first extracted from a given document. For example, in the above example, “bees” and “beekeeper” are two entities. And they are related by “observe” relation. As in, a beekeeper observes bees.

\

- Key-value (KV) pair generation using LLM. KV pairs are then generated using a simple LLM. The LLM profiling step gives a small note or explanation of what the entity or relation is all about. For example, the LLM explains who a “beekeeper” is in our chosen example. This step is denoted by the P(.) in the above figure. Note that this LLM is different from the general-purpose LLM used in the main RAG pipeline.

\

- Deduplication. Given that the documents have to do with bees, it is quite possible that the entity “beekeeper” could have been retrieved from several documents or chunks. So, we need a deduplication step that just keeps one and discards the rest with the same meaning. This is shown by the D(.) in the above figure.

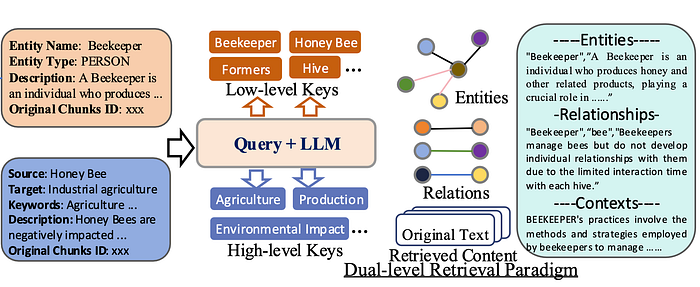

A query to a RAG system can be one of two types — specific or abstract. In the same bee example, a specific query could be “How many queen bees can be there in the hive?” An abstract query could be, “What are the implications of climate change on honey bees?” To address this diversity, LightRAG employs two retrieval types:

- Low-level retrieval. It simply extracts precise entities and their relationships like bees, observe, and beekeepers.

\

- High-level retrieval. Employing an LLM, LightRAG aggregates information and summarizes multiple sources of information.

Doing all this exercise and switching to LightRAG improves execution time indeed. During indexing, the LLM needs to be called just once per chunk to extract entities and their relationships.

\ Likewise, during user query, we only retrieve entities and relationships from chunks using the same LLM we used for indexing. This is a huge saving on the retrieval overhead and hence computation. So, we have a “light” RAG at last!

\ Integrating new knowledge into existing graphs seems to be a seamless exercise. Instead of re-indexing the whole data whenever we have new information, we can simply append new knowledge to the existing graph.

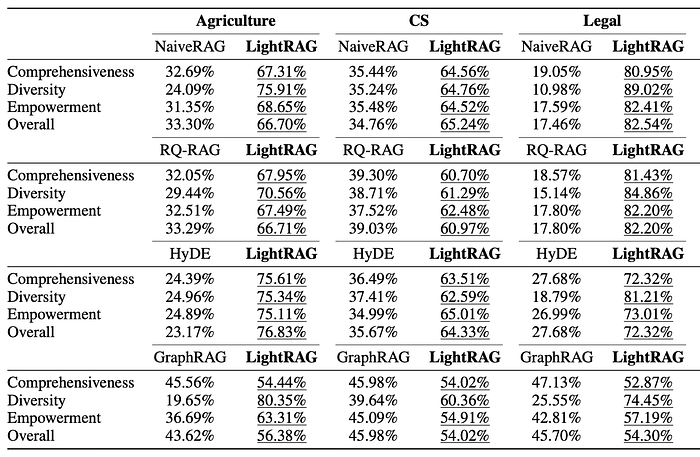

EvaluationIn their evaluations, they have compared against Naive RAG, RQ-RAG, HyDE, and GraphRAG. To keep the comparison fair, they have used GPT-4o-mini as the LLM across the board with a fixed chunk size of 1200 for all datasets. The answers were evaluated for comprehensiveness, diversity, and effectiveness in answering the user(a.k.a. empowerment in the paper).

As we can see from the underlined results, LightRAG beats all of the state-of-the-art methods currently available.

\ In general, they draw the following conclusions:

- Using graph-based methods (GraphRAG or LightRAG) improves significantly over the baseline Naive RAG.

\

- LightRAG produces quite diverse answers powered by the dual-level retrieval paradigm.

\

- LightRAG can deal with complex queries better

Though RAG is a fairly recent technique, we are seeing rapid progress in the area. Techniques like LightRAG which can take RAG pipelines to cheap commodity hardware are the most welcome. While the hardware landscape is ever-growing, there is always an increasing need to run LLMs and RAG pipelines in compute-constrained hardware in real time.

\ Would you like to see some hands-on study of LightRAG? Please stay tuned…

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.