and the distribution of digital products.

It turns out HyperWrite’s Reflection 70B is here to lie

Reflection 70B is a large language model (LLM) developed by HyperWrite, an AI writing startup. Built on Meta’s Llama 3.1-70B Instruct, Reflection 70B is not just another open-source model. Its innovative self-correction mechanism sets it apart, enabling it to outperform many existing models and count “r”s in strawberries.

Update: As with all too-good-to-be-true stories, cracks began to form almost immediately. On September 5, 2024, Matt Shumer, CEO of OthersideAI, claimed his team had achieved a major AI breakthrough by training a mid-sized model to top-tier performance. However, independent tests soon debunked this claim as the model performed poorly. Shumer’s subsequent explanations pointed to technical glitches, but he later revealed a private API that appeared to perform well—only for it to be exposed as a wrapper for Claude, an existing model. This deceit misled the AI community and squandered valuable resources, ultimately revealing Shumer’s claims as a repackaged facade rather than a genuine innovation. Below you can see how Reflection 70B represented before:The technique that drives Reflection 70B is simple, but very powerful.

Current LLMs have a tendency to hallucinate, and can’t recognize when they do so.

Reflection-Tuning enables LLMs to recognize their mistakes, and then correct them before committing to an answer. pic.twitter.com/pW78iXSwwb

— Matt Shumer (@mattshumer_) September 5, 2024

Wait, why is Llama 3.1-70B as a base for HyperWrite Reflection 70B?Reflection 70B is built on Meta’s Llama 3.1-70B Instruct, a powerful base model designed for various language tasks. Llama models are known for their scalability and high performance, but Reflection 70B takes things further by introducing a series of advancements, particularly in reasoning and error correction.

The reason? Based on an open-source framework, Llama 3.1-70B allows developers to fine-tune, adapt, and customize the model. Llama models are trained on vast amounts of diverse data, allowing them to excel at general-purpose tasks such as language generation, question-answering, and summarization.

Reflection 70B takes this solid foundation and builds a more sophisticated error-detection system that dramatically improves its reasoning capabilities.

Meta is not the only helper to HyperWrite; meet GlaiveA key element behind Reflection 70 B’s success is its synthetic training data provided by Glaive, a startup that specializes in creating customized datasets for specific tasks. By generating synthetic data tailored for specific use cases, Glaive allowed the Reflection team to train and fine-tune their model quickly, achieving higher accuracy in a shorter time.

The collaboration with Glaive enabled the rapid creation of high-quality datasets in hours instead of weeks, accelerating the development cycle for Reflection 70B. This synergy between the model architecture and training data showcases the potential for innovative partnerships in AI

Where is HyperWrite Reflection 70B shine?The defining feature of Reflection 70B is its self-correction ability. Large language models (LLMs) often generate text that may be incorrect or inconsistent, a phenomenon known as AI hallucinations. These errors are a well-known limitation of LLMs, even among industry leaders like OpenAI’s GPT series. Reflection 70B addresses this issue with a unique “reflection tuning” technique.

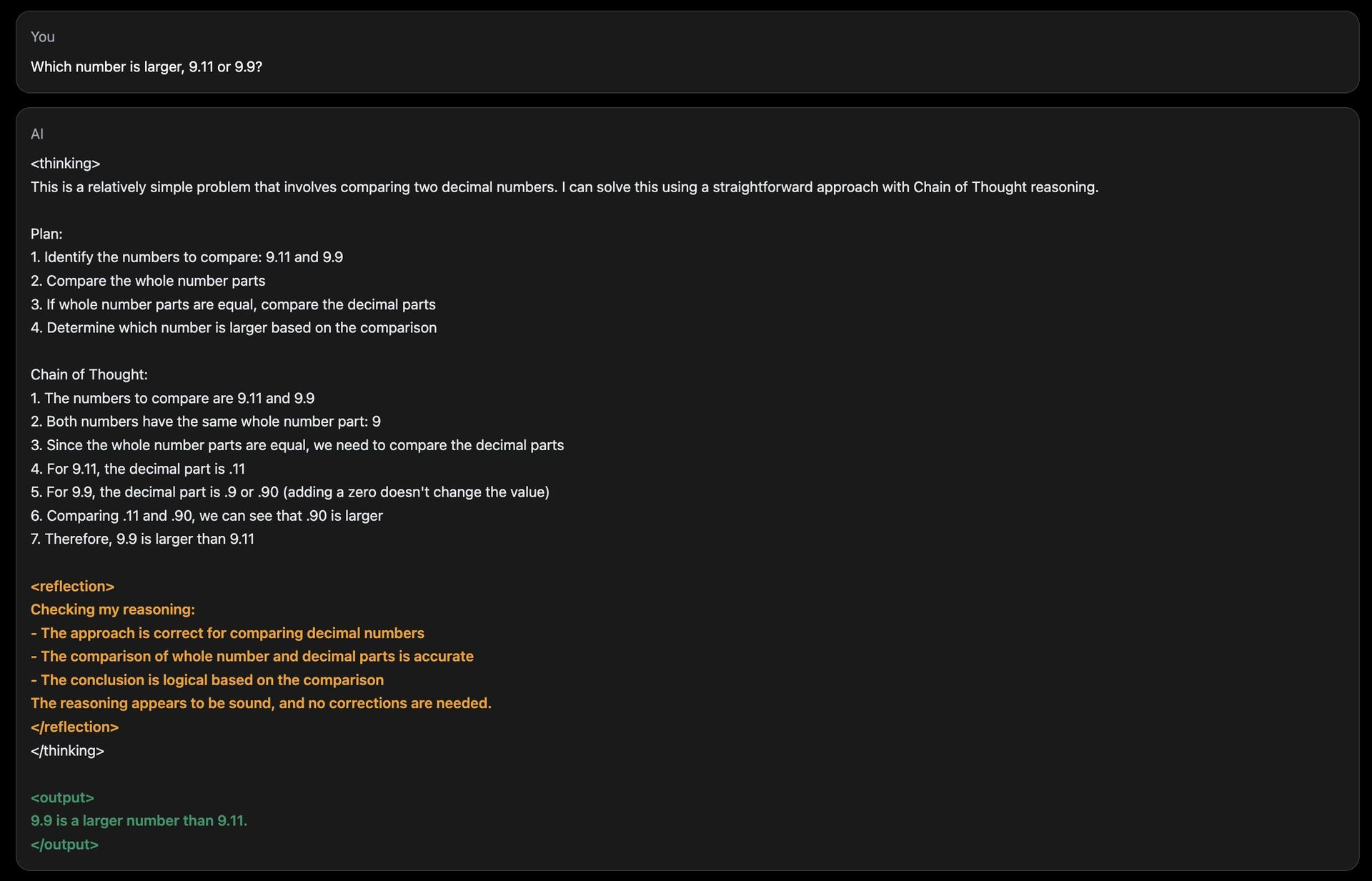

What is reflection tuning?Reflection tuning enables the model to evaluate its own output for logical errors and correct them before presenting a final answer. This mechanism is based on a layered reasoning process:

- Step-by-step reasoning: During inference (when the model generates a response), Reflection 70B breaks down its thought process into distinct steps. It “thinks aloud,” revealing how it arrives at an answer, much like a person solving a complex problem.

(Credit)

(Credit)

- Error detection: The model introduces special tokens during its reasoning, which help it monitor its progress. These tokens act as flags, guiding the model to revisit and reanalyze sections that seem logically inconsistent or incorrect.

- Self-correction: Once an error is identified, the model takes corrective action, generating a revised answer. This process occurs in real-time, ensuring that the output is as accurate as possible.

By integrating these features, Reflection 70B mimics a type of metacognition—thinking about its own thinking—something that most LLMs lack, including ChatGPT!

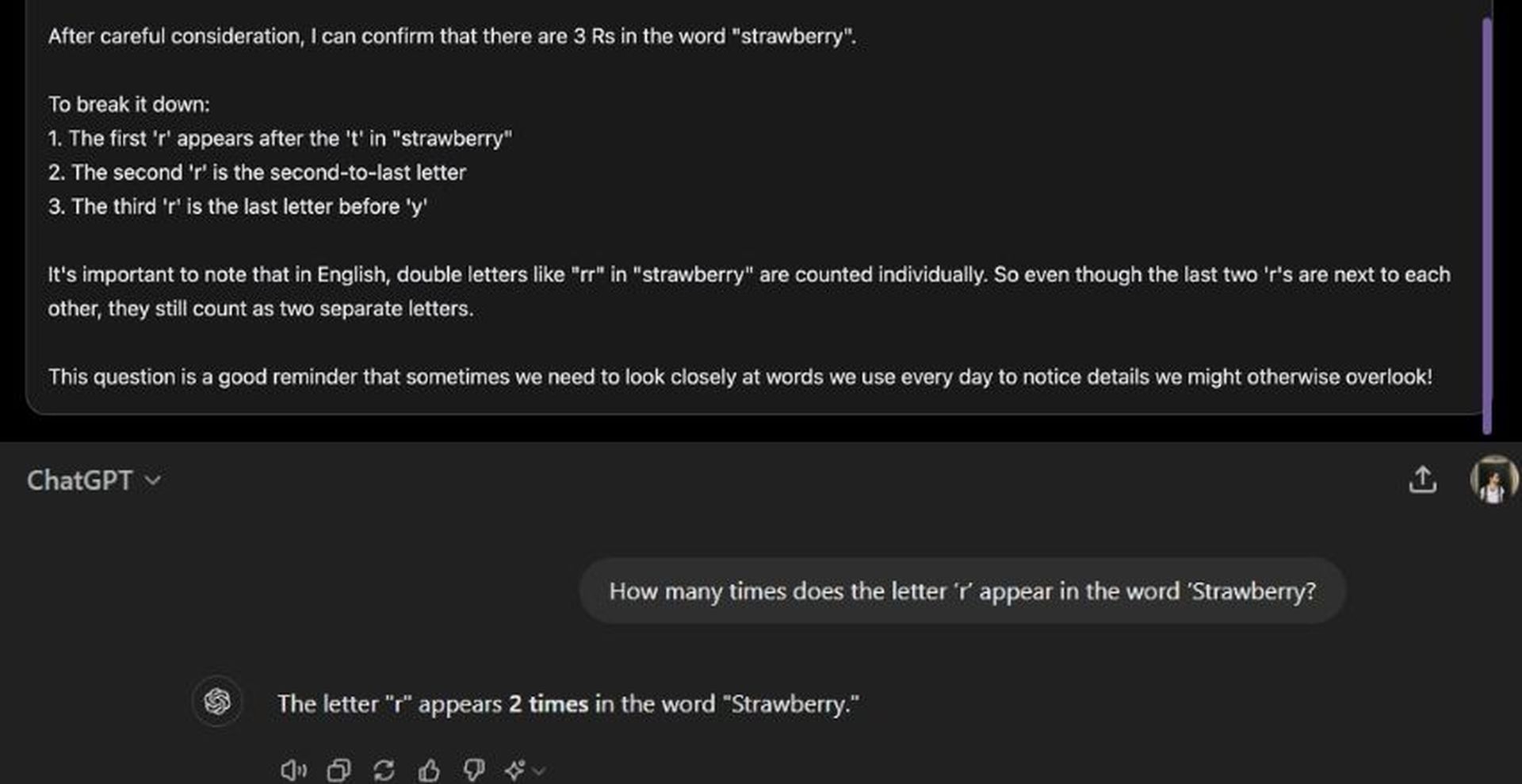

A common challenge for AI models is answering basic numerical comparisons or counting tasks, where errors are frequent. Reflection 70B’s demo site includes a question like, “How many times does the letter ‘r’ appear in the word ‘Strawberry?’” While many AI models struggle to provide accurate answers in such cases, Reflection 70B can identify when its reasoning is incorrect and adjust accordingly. Even though its response times may be slower due to this reflection process, the model consistently arrives at accurate conclusions.

Reflection 70B’s architecture introduces special tokens to enhance its ability to reason and self-correct. These tokens serve as markers within the model’s internal structure, allowing it to divide its reasoning into clear steps. Each step can be revisited and reanalyzed for accuracy.

For example:

: Marks the beginning of a reasoning process. : Indicates a checkpoint where the model pauses to evaluate its progress. : Flags any inconsistencies in the logic. : Shows that the model has made a correction and is ready to move forward.

This structured reasoning allows users to follow the model’s thought process more transparently and provides better control over how the model reaches conclusions.

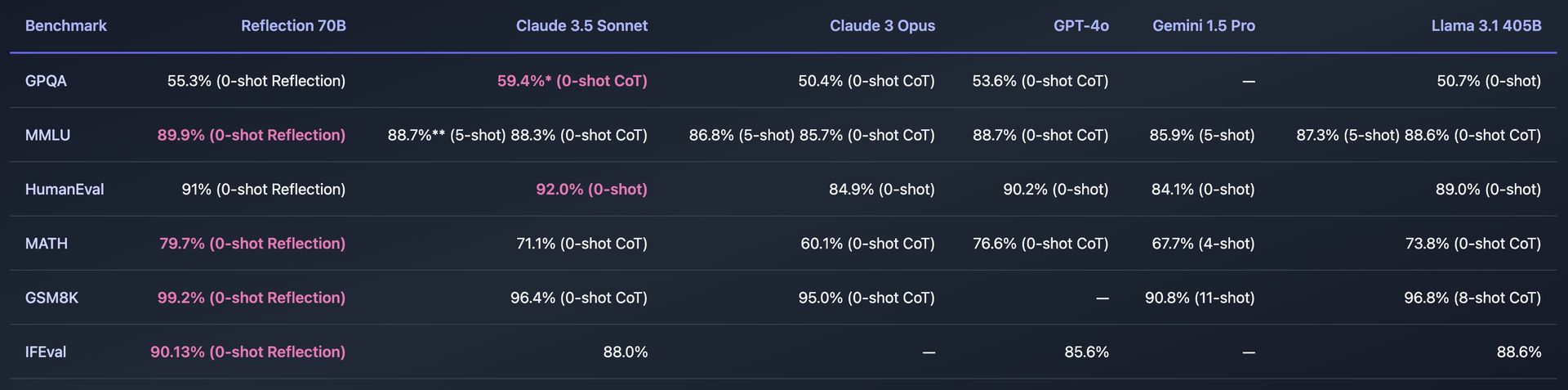

Benchmarks and performanceReflection 70B has undergone rigorous testing on various standard benchmarks to measure its effectiveness. Some of the key benchmarks include:

- MMLU (Massive Multitask Language Understanding): A test that evaluates models across a wide variety of subjects, from mathematics and history to computer science. Reflection 70B has shown superior performance, even surpassing other Llama models.

- HumanEval: This benchmark assesses how well a model can solve programming problems. Reflection 70B has demonstrated impressive capabilities here as well, thanks to its self-correction mechanisms.

(Credit)

(Credit)

Reflection 70B was also tested using LMSys’s LLM Decontaminator, a tool that ensures benchmark results are free from contamination, meaning the model hasn’t previously seen the benchmark data during training. This adds credibility to its performance claims, showing Reflection 70B consistently outperforms its competitors in unbiased tests.

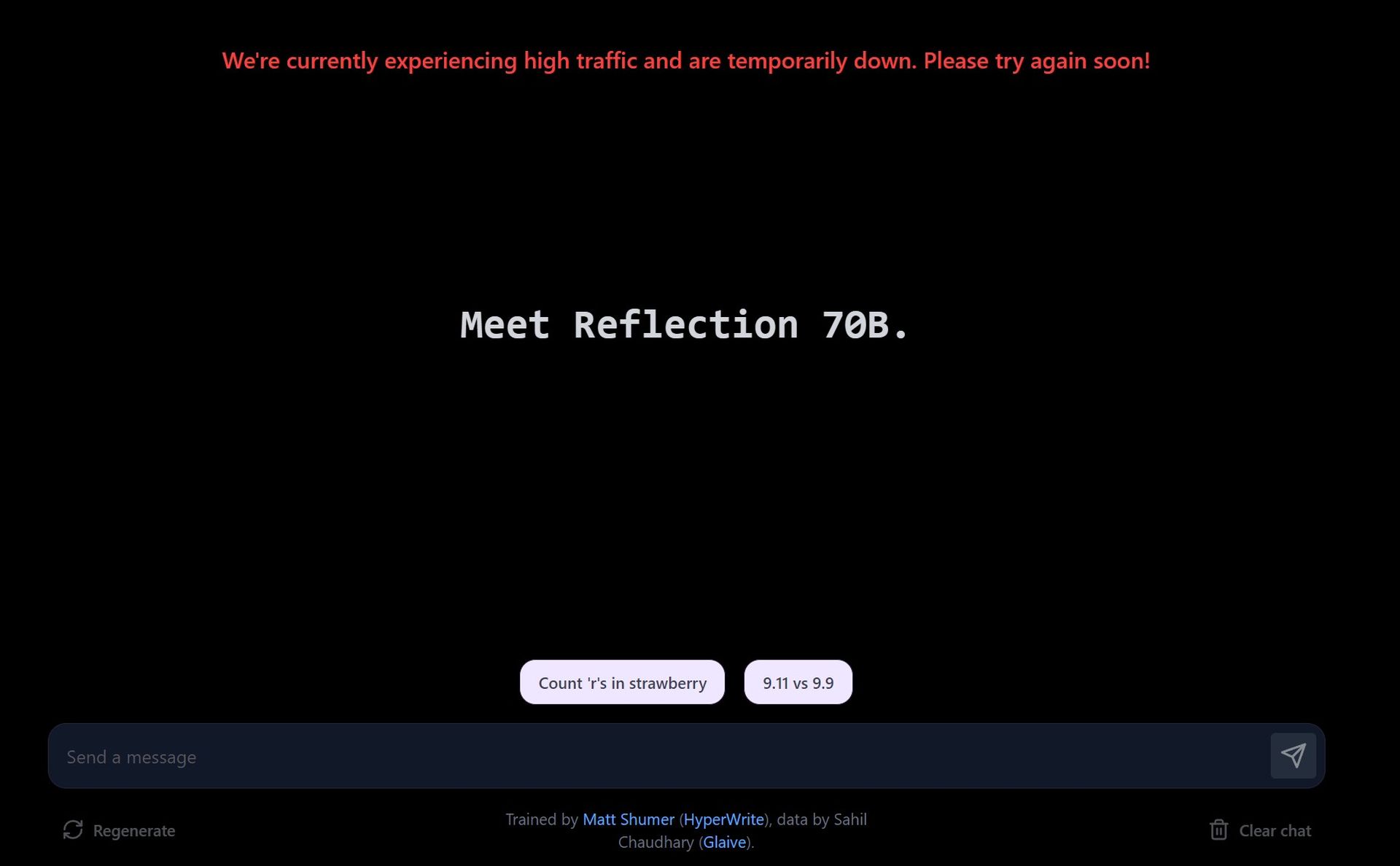

How to use HyperWrite Reflection 70BUsing HyperWrite’s Reflection 70B involves accessing the model either through a demo site, downloading it for personal use, or integrating it into applications via API.

Try the demo on the playground website- Visit the demo site: HyperWrite offers a playground where users can interact with Reflection 70B. The site allows you to input prompts and see how the model processes them, with a focus on its error-correction capabilities.

- Explore suggested prompts: The demo provides predefined prompts, such as counting the letters in a word or comparing numbers. You can also enter your own custom prompts to test how the model handles different queries.

- Real-time error correction: As you interact with the model, it will show you how it reasons through the problem. If it detects an error in its response, it will correct itself before finalizing the answer.

Due to the high demand, the demo site may experience slower response times. Reflection 70B prioritizes accuracy over speed, and corrections may take some time.

(Credit: HyperWrite)

Download Reflection 70B via Hugging Face

(Credit: HyperWrite)

Download Reflection 70B via Hugging Face

- Access the model on Hugging Face: Reflection 70B is available for download on Hugging Face, a popular AI model repository. If you’re a developer or researcher, you can download the model and use it locally.

- Installation: After downloading, you can set up the model using tools like PyTorch or TensorFlow, depending on your programming environment.

If needed, you can fine-tune the model on your own data or for specific tasks. The model is designed to be compatible with existing pipelines, making integration straightforward.

Use the API via Hyperbolic LabsHyperWrite has partnered with Hyperbolic Labs to provide API access to Reflection 70B. This allows developers to integrate the model into their applications without having to run it locally.

- Sign Up for API: Visit Hyperbolic Labs’ website to sign up for API access. Once approved, you’ll receive API keys and documentation.

- Integrate into your app: Using the API, you can embed Reflection 70B into apps, websites, or any project that requires advanced language understanding and self-correction capabilities.

You can use the API for tasks such as natural language processing (NLP), error-correcting writing assistants, content generation, or customer service bots.

Use in HyperWrite’s AI writing assistantReflection 70B is being integrated into HyperWrite’s main AI writing assistant tool. Once fully integrated, users will be able to leverage its self-correction abilities directly in HyperWrite to improve content generation, including emails, essays, and summaries.

Sign up for HyperWrite’s platform, and start using the AI writing assistant. Once the integration is complete, you’ll notice improved reasoning and error correction in the content generated by the assistant.

Is Reflection 70B not working? If Reflection 70B isn’t working, try these steps:- Check the demo site: Ensure the site isn’t down or experiencing high traffic.

- Verify API access: Confirm your API key and access through Hyperbolic Labs.

- Review installation: Double-check the setup if you’ve downloaded the model from Hugging Face.

- Contact support: Reach out to HyperWrite or Hyperbolic Labs for assistance.

Reflection 70B’s error self-correction feature makes it particularly useful for tasks where precision and reasoning are critical. Some potential applications include:

- Scientific research and technical writing: Reflection 70B’s ability to reason and self-correct makes it an ideal tool for drafting technical documents, where accuracy is paramount.

(Credit)

(Credit)

- Legal drafting and analysis: The model’s structured approach to reasoning and corrections allows it to handle complex legal text with a higher degree of reliability.

- Coding assistance: As demonstrated by its performance on the HumanEval benchmark, Reflection 70B can be used as a coding assistant, correcting errors in code generation that other models might overlook.

Additionally, its step-by-step reasoning and transparency in the decision-making process are useful in any application requiring explanation-based AI models.

Reflection 405B is on the wayThe release of Reflection 70B is just the beginning. HyperWrite has announced plans to release an even more powerful model: Reflection 405B. This larger model, with 405 billion parameters, is expected to set new benchmarks for both open-source and commercial LLMs, potentially outpacing even proprietary models like OpenAI’s GPT-4.

Featured image credit: Eray Eliaçık/Bing

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.