and the distribution of digital products.

DM Television

Instella is here: AMD’s 3B-parameter model takes on Llama and Gemma

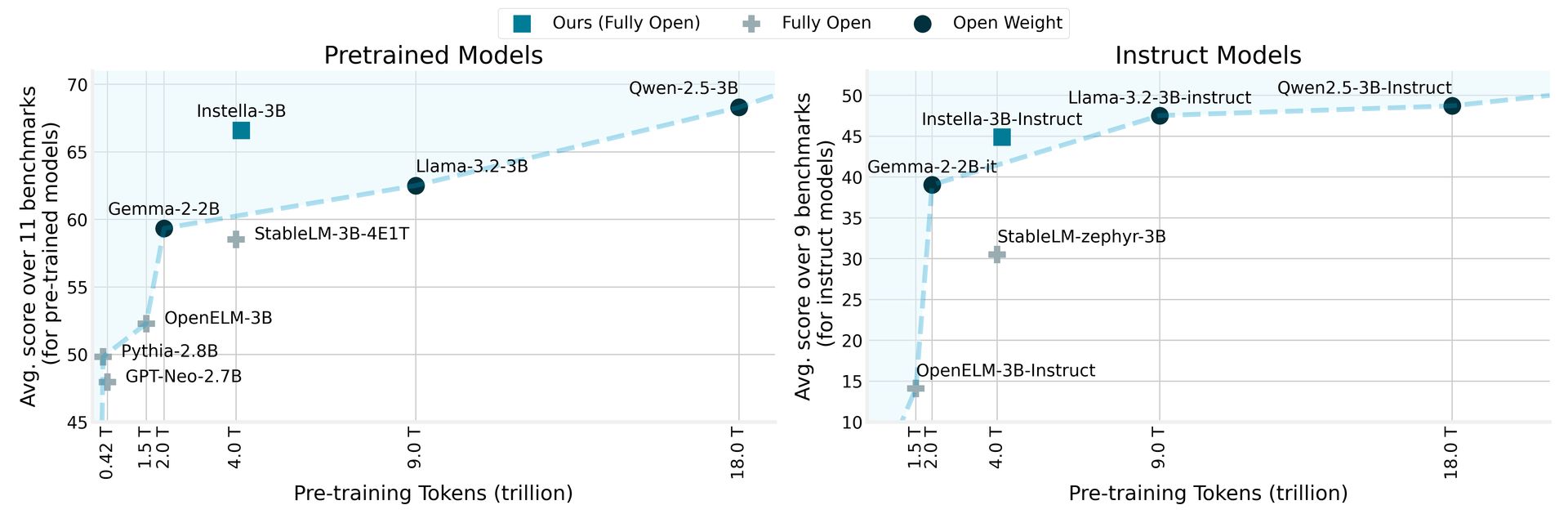

AMD has unveiled Instella, a family of fully open-source language models featuring 3 billion parameters, trained from scratch on AMD Instinct™ MI300X GPUs. Instella models outperform existing open models of similar sizes and compete effectively with leading open-weight models, including Llama-3.2-3B, Gemma-2-2B, and Qwen-2.5-3B, including their instruction-tuned versions.

AMD unveils Instella: Open-source language models outperforming rivalsInstella employs an autoregressive transformer architecture consisting of 36 decoder layers and 32 attention heads, enabling it to process lengthy sequences of up to 4,096 tokens. The model utilizes a vocabulary of approximately 50,000 tokens, managed by the OLMo tokenizer, making it adept at generating and interpreting text across various domains.

The training procedure for Instella highlights collaboration between AMD’s hardware and software innovations. This new model builds on the groundwork established by AMD’s previous 1-billion-parameter models, transitioning from training on 64 AMD Instinct MI250 GPUs with 1.3 trillion tokens to using 128 Instinct MI300X GPUs with 4.15 trillion tokens for the current 3-billion-parameter model.

Image: AMD

Image: AMD

By comparing Instella to prior models, AMD reports that it not only surpasses existing fully open models but also achieves competitive performance against state-of-the-art open-weight models, marking a significant milestone in the natural language processing field. This initiative aligns with AMD’s commitment to making advanced technology more accessible and fostering collaboration and innovation within the AI community.

AMD RX 9000 pricing could make you rethink that RTX 5090 purchase

Instella model phases and training dataThis release includes several versions of the Instella models, each representing different training stages:

Model Stage Training Data (Tokens) Description Instella-3B-Stage1 Pre-training (Stage 1) 4.065 Trillion First stage pre-training to develop proficiency in natural language. Instella-3B Pre-training (Stage 2) 57.575 Billion Second stage pre-training to enhance problem-solving capabilities. Instella-3B-SFT SFT 8.902 Billion (x3 epochs) Supervised Fine-tuning (SFT) to enable instruction-following capabilities. Instella-3B-Instruct DPO 760 Million Alignment to human preferences and enhancement of chat capabilities with direct preference optimization (DPO).In the multi-stage training pipeline, the first pre-training stage used 4.065 trillion tokens from diverse datasets, establishing foundational language understanding. The subsequent training on an additional 57.575 billion tokens further enhanced the model’s performance across varied tasks and domains.

During supervised fine-tuning, Instella-3B-SFT was trained with 8.9 billion tokens, improving interactive response capabilities. The final stage, Instella-3B-Instruct, underwent alignment training with Direct Preference Optimization using 0.76 billion tokens, ensuring that the model’s outputs are aligned with human values and preferences.

AMD has made all artifacts associated with Instella models fully open-source, including model weights, training configurations, datasets, and code, fostering collaboration and innovation in the AI community. These resources can be accessed via Hugging Face model cards and GitHub repositories.

Featured image credit: AMD

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.