and the distribution of digital products.

DM Television

Improving Real-Time Inference with Anchor Tokens

:::info Authors:

(1) Jianhui Pang, from the University of Macau, and work was done when Jianhui Pang and Fanghua Ye were interning at Tencent AI Lab ([email protected]);

(2) Fanghua Ye, University College London, and work was done when Jianhui Pang and Fanghua Ye were interning at Tencent AI Lab ([email protected]);

(3) Derek F. Wong, University of Macau;

(4) Longyue Wang, Tencent AI Lab, and corresponding author.

:::

Table of Links3 Anchor-based Large Language Models

3.2 Anchor-based Self-Attention Networks

4 Experiments and 4.1 Our Implementation

4.2 Data and Training Procedure

7 Conclusion, Limitations, Ethics Statement, and References

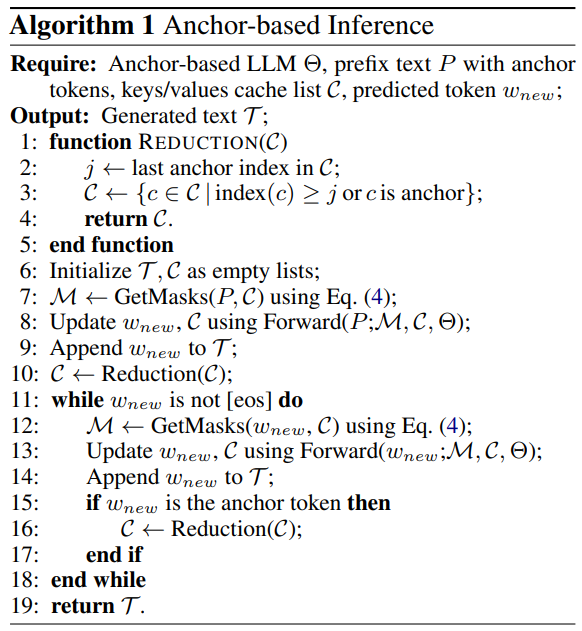

3.3 Anchor-based InferenceBy training the model to compress information into the anchor token of a natural language sequence, we can optimize the inference process by modifying the keys/values caching mechanism. Specifically, during inference, upon encountering an anchor token that condenses the comprehensive semantic information of preceding tokens in the current sequence, the model can reduce the keys/values caches by deleting the caches of non-anchor tokens within that sequence.

\ We introduce the inference method in Algorithm 1. The function “REDUCTION” in Line 1 is utilized to remove keys/values caches when the model processes prefix texts in Line 10 or generates an anchor token during the prediction of the next

\

\ token in Line 16. This approach aims to reduce the keys/values caches for both prefix tokens and generated outputs during real-time inference.

\

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

\

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.