and the distribution of digital products.

DM Television

How to Transform Static Text-to-Image Models into Dynamic Animation Generators

:::info Authors:

(1) Yuwei Guo, The Chinese University of Hong Kong;

(2) Ceyuan Yang, Shanghai Artificial Intelligence Laboratory with Corresponding Author;

(3) Anyi Rao, Stanford University;

(4) Zhengyang Liang, Shanghai Artificial Intelligence Laboratory;

(5) Yaohui Wang, Shanghai Artificial Intelligence Laboratory;

(6) Yu Qiao, Shanghai Artificial Intelligence Laboratory;

(7) Maneesh Agrawala, Stanford University;

(8) Dahua Lin, Shanghai Artificial Intelligence Laboratory;

(9) Bo Dai, The Chinese University of Hong Kong and The Chinese University of Hong Kong.

:::

Table of Links- AnimateDiff

4.1 Alleviate Negative Effects from Training Data with Domain Adapter

4.2 Learn Motion Priors with Motion Module

4.3 Adapt to New Motion Patterns with MotionLora

5 Experiments and 5.1 Qualitative Results

8 Reproducibility Statement, Acknowledgement and References

\

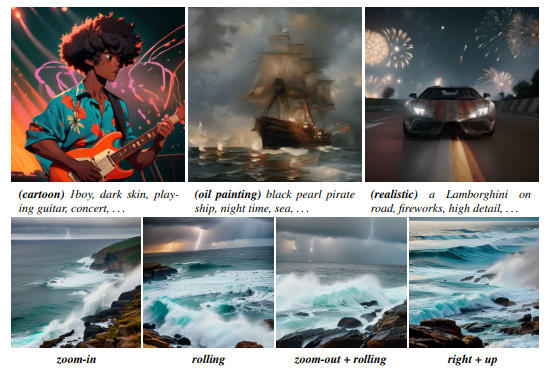

With the advance of text-to-image (T2I) diffusion models (e.g., Stable Diffusion) and corresponding personalization techniques such as DreamBooth and LoRA, everyone can manifest their imagination into high-quality images at an affordable cost. However, adding motion dynamics to existing high-quality personalized T2Is and enabling them to generate animations remains an open challenge. In this paper, we present AnimateDiff, a practical framework for animating personalized T2I models without requiring model-specific tuning. At the core of our framework is a plug-and-play motion module that can be trained once and seamlessly integrated into any personalized T2Is originating from the same base T2I. Through our proposed training strategy, the motion module effectively learns transferable motion priors from real-world videos. Once trained, the motion module can be inserted into a personalized T2I model to form a personalized animation generator. We further propose MotionLoRA, a lightweight fine-tuning technique for AnimateDiff that enables a pre-trained motion module to adapt to new motion patterns, such as different shot types, at a low training and data collection cost. We evaluate AnimateDiff and MotionLoRA on several public representative personalized T2I models collected from the community. The results demonstrate that our approaches help these models generate temporally smooth animation clips while preserving the visual quality and motion diversity. Codes and pre-trained weights are available at https://github.com/guoyww/AnimateDiff.

1 INTRODUCTIONText-to-image (T2I) diffusion models (Nichol et al., 2021; Ramesh et al., 2022; Saharia et al., 2022; Rombach et al., 2022) have greatly empowered artists and amateurs to create visual content using text prompts. To further stimulate the creativity of existing T2I models, lightweight personalization methods, such as DreamBooth (Ruiz et al., 2023) and LoRA (Hu et al., 2021) have been proposed. These methods enable customized fine-tuning on small datasets using consumer-grade hardware such as a laptop with an RTX3080, thereby allowing users to adapt a base T2I model to new domains and improve visual quality at a relatively low cost. Consequently, a large community of AI artists and amateurs has contributed numerous personalized models on model-sharing platforms such as Civitai (2022) and Hugging Face (2022). While these personalized T2I models can generate remarkable visual quality, their outputs are limited to static images. On the other hand, the ability to generate animations is more desirable in real-world production, such as in the movie and cartoon industries. In this work, we aim to directly transform existing high-quality personalized T2I models into animation generators without requiring model-specific fine-tuning, which is often impractical in terms of computation and data collection costs for amateur users.

\ We present AnimateDiff, an effective pipeline for addressing the problem of animating personalized T2Is while preserving their visual quality and domain knowledge. The core of AnimateDiff is an approach for training a plug-and-play motion module that learns reasonable motion priors from video datasets, such as WebVid-10M (Bain et al., 2021). At inference time, the trained motion module can be directly integrated into personalized T2Is and produce smooth and visually appealing animations without requiring specific tuning. The training of the motion module in AnimateDiff consists of three stages. Firstly, we fine-tune a domain adapter on the base T2I to align with the visual distribution of the target video dataset. This preliminary step guarantees the motion module concentrates on learning the motion priors rather than pixel-level details from the training videos. Secondly, we inflate the base T2I together with the domain adapter and introduce a newly initialized motion module for motion modeling. We then optimize this module on videos while keeping the domain adapter and base T2I weights fixed. By doing so, the motion module learns generalized motion priors and can, via module insertion, enable other personalized T2Is to generate smooth and appealing animations aligned with their personalized domains. The third stage of AnimateDiff, also dubbed as MotionLoRA, aims to adapt the pre-trained motion module to specific motion patterns with a small number of reference videos and training iterations. We achieve this by fine-tuning the motion module with the aid of Low-Rank Adaptation (LoRA) (Hu et al., 2021). Remarkably, adapting to a new motion pattern can be achieved with as few as 50 reference videos. Moreover, a MotionLoRA model requires only approximately 30M of additional storage space, further enhancing the efficiency of model sharing. This efficiency is particularly valuable for users who are unable to bear the expensive costs of pre-training but desire to fine-tune the motion module for specific effects.

\ We evaluate the performance of AnimateDiff and MotionLoRA on a diverse set of personalized T2I models collected from model-sharing platforms (Civitai, 2022; Hugging Face, 2022). These models encompass a wide spectrum of domains, ranging from 2D cartoons to realistic photographs, thereby forming a comprehensive benchmark for our evaluation. The results of our experiments demonstrate promising outcomes. In practice, we also found that a Transformer (Vaswani et al., 2017) architecture along the temporal axis is adequate for capturing appropriate motion priors. We also demonstrate that our motion module can be seamlessly integrated with existing content-controlling approaches (Zhang et al., 2023; Mou et al., 2023) such as ControlNet without requiring additional training, enabling AnimateDiff for controllable animation generation.

\ In summary, (1) we present AnimateDiff, a practical pipeline that enables the animation generation ability of any personalized T2Is without specific fine-tuning; (2) we verify that a Transformer architecture is adequate for modeling motion priors, which provides valuable insights for video generation; (3) we propose MotionLoRA, a lightweight fine-tuning technique to adapt pre-trained motion modules to new motion patterns; (4) we comprehensively evaluate our approach with representative community models and compare it with both academic baselines and commercial tools such as Gen2 (2023) and Pika Labs (2023). Furthermore, we showcase its compatibility with existing works for controllable generation.

\

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

\

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.