and the distribution of digital products.

DM Television

Ducho’s Big Bet: A Unified Future for Multimodal AI

:::info Authors:

(1) Daniele Malitesta, Politecnico di Bari, Italy and [email protected] with Corresponding authors: Daniele Malitesta ([email protected]) and Giuseppe Gassi ([email protected]);

(2) Giuseppe Gassi, Politecnico di Bari, Italy and [email protected] with Corresponding authors: Daniele Malitesta ([email protected]) and Giuseppe Gassi ([email protected]);

(3) Claudio Pomo, Politecnico di Bari, Italy and [email protected];

(4) Tommaso Di Noia, Politecnico di Bari, Italy and [email protected].

:::

Abstract and 1 Introduction and Motivation

2 Architecture and 2.1 Dataset

5 Demonstrations and 5.1 Demo 1: visual + textual items features

5.2 Demo 2: audio + textual items features

5.3 Demo 3: textual items/interactions features 6

Conclusion and Future Work, Acknowledgments and References

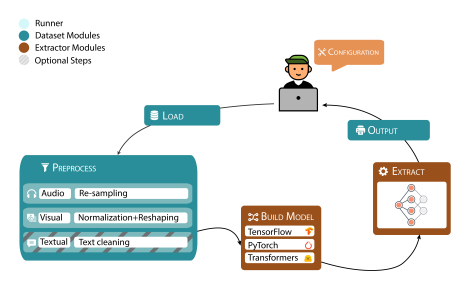

3 EXTRACTION PIPELINEThe overall multimodal extraction pipeline is represented in Figure 1. Through the Dataset module, the load and preprocess steps take place, assuming that the user is providing the input data and the YAML configuration file (overridable from command line) to customize the extraction. Then, the Extractor module is in charge of building the extraction model(s) by setting the backends and output layer(s). Finally, after the multimodal feature extraction, features are saved to the output path in numpy format (the Dataset module again controls this latter phase). As previously described, the whole process is orchestrated by the Runner module.

\

\

4 DUCHO AS DOCKER APPLICATIONTo fully exploit the GPU-speedup implemented in all backends we use for the multimodal feature extraction, one of the basic requirements is to setup a suitable development environment where the backends’ versions are compatible with CUDA and, optionally, cuDNN. Generally, setting a workstation where all such libraries/tools are correctly aligned is challenging. To this end, we decide to dockerize Ducho by making it into a Docker image (available on Docker Hub[3] ) with all packages already installed in a tested and safe virtualization environment on your physical machine

\ and safe virtualization environment on your physical machine. Our Docker image is built from an NVIDIA-based image which comes with CUDA 11.8 and cuDNN 8 on Ubuntu 22.04, Python 3.8 and Pip, and our cloned repository having all Python packages already installed and ready to be used. A possible container instantiated from the image should specify the gpus to use from the host machine (this feature is currently available on Docker but it depends on the version of CUDA to be installed), and the volume you may want to use to save the framework’s outputs permanently

\ Note that a generic container instantiated from our image would prompt the user to a shell environment where one could run custom multimodal feature extractions via the command line, and also create custom configuration files for the same purpose.

\

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

\

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.