and the distribution of digital products.

DM Television

Decentralized AI Platform Nosana Readies for Mainnet

- Nosana’s core offering is a decentralized GPU-based compute grid, recognizing the growing demand for AI computing.

- Nosana leverages underutilized consumer-grade GPUs to offer on-demand AI inference services with cost savings of up to 2.5X compared to traditional cloud providers.

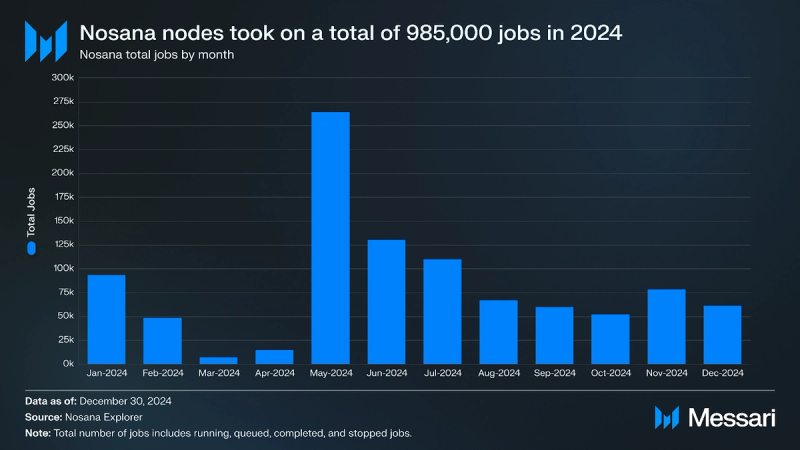

- In 2024, Nosana nodes completed 985,000 jobs, with a significant spike in job requests occurring in May following the onboarding of 1,000 new nodes.

- 29.7 million NOS (31% of the circulating token supply) are staked on Nosana. At the time of writing, the value of the staked tokens amounted to $92.4 million.

- Nosana’s mainnet is scheduled to launch in Q1 2025, with its GPU compute grid in full production.

Nosana is a decentralized physical infrastructure network (DePIN) built on Solana. The project's core offering is a decentralized GPU-based compute grid that allows individuals and organizations to rent out their idle GPU power for AI inference tasks. This approach addresses the growing demand for computational resources in the AI industry and allows GPU owners to earn passive income. By focusing on AI inference, Nosana aims to create a more accessible and cost-effective solution than traditional cloud providers.

The project's native token, NOS, plays a central role in the ecosystem, facilitating transactions, enabling staking for network participation, and, eventually, governance. Nosana's strategic pivot from CI/CD services (an agile practice automating code integration, testing, and deployment for developers) to AI inference, coupled with its focus on leveraging underutilized consumer-grade GPUs, positions it uniquely within the rapidly evolving landscape of decentralized computing and artificial intelligence. With its mainnet launch scheduled for January 14, 2025, Nosana aims to unlock accessibility and affordability for AI computing resources.

Nosana's Strategic Pivot to AI InferenceAt its launch in 2021, Nosana initially focused on providing decentralized automation services for software developers through CI/CD services. The project aimed to create a decentralized marketplace where developers could access compute resources from a distributed network of hardware providers. However, after a year and a half of development, the team encountered challenges with gaining traction. As noted in their blog post, most developers hesitated to migrate to new tools, even if they offered decentralized alternatives.

This realization coincided with rapid innovation and growth in AI following the release of ChatGPT in late 2022. Demand surged for computational resources required to run AI models. In Q4 2023, Nosana pivoted towards building a decentralized GPU-powered compute grid precisely for AI inference.

Several factors drove this pivot. First, the team recognized that the demand for AI inference was rapidly outpacing the supply of available GPUs, leading to inaccessible pricing. Second, they saw that many consumer-grade GPUs were underutilized, representing a vast untapped resource that could be harnessed to meet this demand. Third, they believed their decentralized approach could offer a more cost-effective and scalable solution than traditional cloud providers. By focusing on AI inference, Nosana aims to create a marketplace where anyone with a compatible GPU can contribute their idle compute power and earn rewards. At the same time, AI developers and researchers can access the resources they need at a fraction of the cost. Nosana's research shows that renting consumer GPUs like the RTX 4090 can deliver inferences at a 2.5X lower price than the industry-standard A100 GPU while maintaining the same ROI.

A Deep Dive into AI Inference on NosanaThe primary goal of Nosana’s decentralized compute grid is to deliver more inferences per dollar than centralized cloud providers. Inference refers to using a trained AI model to make predictions or generate outputs based on new input data. For example, when asking ChatGPT to summarize an article, OpenAI's model processes the request on servers in a data center, scaling resources as needed. The process ends once the summary is returned, completing the inference workflow. By focusing on inference, Nosana can optimize its network for the specific requirements of this type of workload, such as low latency and high throughput.

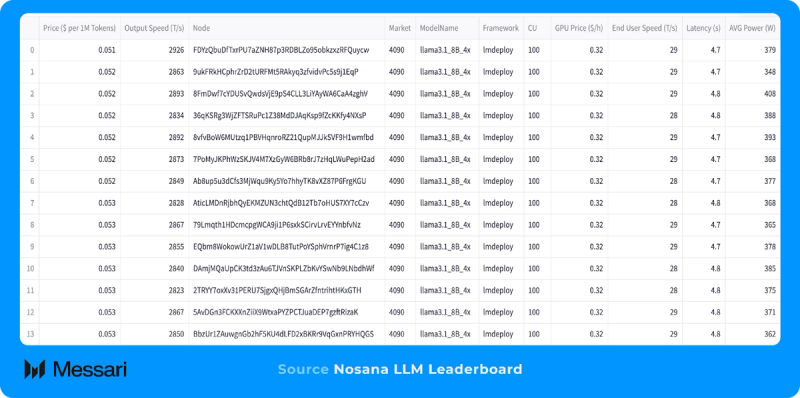

Nosana consistently operates around 300 nodes running jobs with various GPU and AI models. The RTX 4090, a popular consumer-grade GPU with 24GB of VRAM, delivers compute power for $0.32 per hour for running a Llama 3.1 8B model. 8-32GB of VRAM is typically sufficient for small to medium-sized inference tasks. A few RTX 4090s can match the compute power of an industrial-grade A100 GPU—commonly used for larger inference tasks—which costs over $1.29 per hour to rent.

Nosana nodes, i.e., software connecting GPUs to Nosana’s decentralized compute grid, are designed to be highly accessible. The hardware requirements to become a node operator (recently rebranded to “hosts”) include running any of the 19 supported NVIDIA RTX models, at least 4GB of RAM, and an internet connection. By focusing on consumer-grade hardware, Nosana enables individuals with gaming PCs or other devices with idle GPUs to join the network and earn rewards. Nosana categorizes nodes by hardware into markets tailored for specific AI algorithms or models. A queuing system within each market ensures fair and efficient resource allocation, optimizes workloads, and provides a customized experience for GPU providers and consumers.

The emphasis on smaller, open-source models, such as LLaMA and Stable Diffusion, offers more transparency, collaborative opportunity, and affordable rates, albeit sometimes at the cost of performance. Closed models achieved a performance advantage of 24%, according to the Stanford Institute for Human-Centered Artificial Intelligence (HAI). However, in the case of Nosana’s compute grid designed for small to medium-sized inferences, smaller, open-source models may have quicker inference times at a reasonable performance level. This strategy optimizes for price and efficiency.

In 2024, Nosana nodes took on 985,000 jobs. Total jobs include running, queued, completed, and stopped jobs. Jobs may not be completed for various reasons, including a job expiring, node downtime, or nodes declining a job while it is running.

In April, Nosana launched Phase 2 of their Test Grid, adding over 1,000 new nodes to the network. The node growth coincided with a 1,667% increase in job requests the following month and a notable increase in requests each month for the remainder of 2024. Phase 3 began in September and introduced node slashing, a new pricing system to address crypto volatility, and enhancements to tools like the explorer, node leaderboard, and Nosana’s CLI.

Partnerships and Ecosystem GrowthNosana has established partnerships to expand its decentralized GPU network across various domains, including AI development, cloud infrastructure, and decentralized applications:

- Matrix One: Utilizes Nosana’s GPU marketplace to support AI-powered avatar creation.

- PiKNiK: Provides enterprise-grade cloud infrastructure, including Nvidia A5000 setups, to enhance Nosana's GPU marketplace.

- Sogni.AI: Leverages Nosana’s GPU network for computationally intensive tasks like Stable Diffusion model processing.

- Arbius: Uses Nosana's GPU marketplace for cost-effective validation of machine learning infrastructure.

- Theoriq: Employs Nosana’s GPU marketplace to test and optimize AI agents with advanced model setups.

- Render Network: Integrated with Nosana in January following an approved proposal, enabling Render nodes to execute Nosana jobs and Nosana to post jobs on the Render Network.

- OCADA: Uses Nosana’s GPU marketplace to support their AI agents, reducing costs, improving performance, bypassing restrictions, and enabling greater operational flexibility.

- Alpha Neural AI: Leverages Nosana’s GPU grid to deploy, train, and host AI models.

- HDSP Research Group: Uses Nosana’s GPU resources to accelerate AI and ML academic research.

Nosana’s partnerships demonstrate the versatility of its GPU network in handling diverse computational tasks, ranging from stable diffusion to AI-powered avatars. Collaborating with protocols like PiKNiK and the Render Network could reinforce GPU supply and node availability as Nosana scales its compute market.

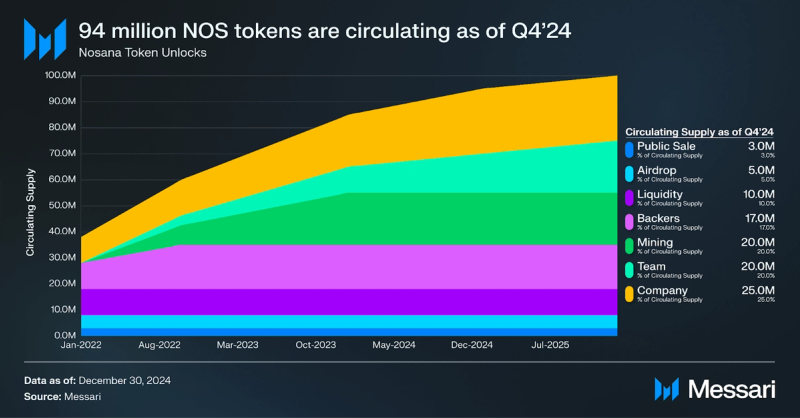

Tokenomics and the NOS TokenNOS, an SPL token with a maximum token supply of 100 million, is at the heart of the Nosana network. The token settles network transaction fees, pays for inference tasks, and supports network security through staking. Once the main net launches in Q1 2025, NOS token holders will have governance rights to vote on proposals and influence the project's future direction.

Node operators must stake NOS as collateral. This staking mechanism incentivizes honest behavior, as malicious actors risk losing their staked tokens through slashing. As of January 2, 2025, 29.7 million NOS have been staked by over 14,100 wallets, equivalent to $92.4 million. Of the circulating supply, 31% of the tokens are staked.

The initial distribution of NOS, which took place on Jan. 17, 2022, allocated tokens to various stakeholders, including the team, company, backers, and the community. Notably, 62% of tokens were allocated to insiders (i.e., the team, company, and backers), subject to various vesting schedules. The team's allocation vests linearly over 48 months, while the company's allocation vests over 36 months. Backers finished vesting nine months after the token’s launch. These vesting schedules align the incentives of the team and early backers with the project's long-term success.

Staking is a crucial component of the Nosana network, as it incentivizes node operators to provide reliable and high-quality compute resources. By staking NOS, node operators signal their commitment to the network and become eligible to receive rewards for their contributions.

The team has implemented a dynamic staking rewards system to ensure the network's long-term sustainability. Initially, the staking program offered a relatively high APY to attract early adopters and bootstrap the network. However, the APY will gradually reduce as the network matures and more nodes join. This adjustment is necessary to prevent excessive inflation and maintain a healthy balance between supply and demand for the NOS token.

The team plans to introduce transaction-based rewards in addition to staking rewards. As the network grows and more AI inference jobs are processed, some transaction fees will be distributed to stakers. This mechanism links network usage and staker rewards, incentivizing participants to contribute to the platform's growth and adoption.

Closing SummaryNosana's shift toward AI inference, powered by a decentralized network of consumer-grade GPUs, positions it as a potential contender in the growing market for AI compute. Nosana’s partnerships showcase its GPU network's versatility and ability to handle various job requests. The upcoming mainnet launch in January 2025 will demonstrate the full capabilities of the network's compute grid.

While the decentralized compute market presents high barriers to entry and competition from players like Akash, io.net, Aethir, and Render, Nosana's success will depend on its ability to capture underserved niche markets. This includes catering to specific developer ecosystems and small-to-medium-sized inference tasks seeking cost-effective solutions.

Furthermore, by exploring decentralized inference, Nosana could address a critical pain point in the AI pipeline — trust and verification — offering enhanced transparency and reliability to its users. By focusing on neglected areas for improvement, like decentralized inference, Nosana has the potential to carve out a unique position in the compute market and contribute meaningfully to the evolving AI ecosystem.

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright 2025, Central Coast Communications, Inc.