and the distribution of digital products.

Automated Essay Scoring Using Large Language Models

:::info Authors:

- Junaid Syed, Georgia Institute of Technology

- Sai Shanbhag, Georgia Institute of Technology

- Vamsi Krishna Chakravarthy, Georgia Institute of Technology

:::

\ Automated Essay Scoring (AES) is a classic NLP task that has been studied for many decades. AES has a lot of practical relevance and massive economic potential - AES is the cornerstone for large competitive exams (ex. SAT, GRE) and also the booming online learning market. Several philanthropic and non-profit organizations such as the Bill & Melinda Gates Foundation and Zuckerberg-Chan Initiative have funded multiple Kaggle competitions on AES [6, 7, 8]. Despite these efforts, however, the problem is far from being solved due to fundamental difficulties with essay scoring. Evaluating an essay is highly subjective and involves abstract factors such as cohesion, grammar, relevance, etc. that are hard to compute. As a result, obtaining labels for training data with a granular rating of an essay across features such as grammar, coherence, etc is quite expensive. Consequently, the training data set is quite limited in comparison to other NLP tasks such as (masked) Language models, NER, POS tagging, machine translation, etc. Furthermore, providing a simple overall score provides little to no feedback to the student and does not help the students in their progression. Therefore, the current efforts are focused on evaluating the essay on the granular aspects rather than a single score. This also helps avoid over-fitting because the prediction model now has to perform well on all the metrics and not just one metric, essentially, one can think of this as a multi-task model. In the current study, we focus on six metrics: cohesion, syntax, vocabulary, phraseology, grammar, and conventions.

\

1.1 Literature SurveyPrior to the 2010s, most of the AES models relied on hand-crafted features designed by computational linguists [10, 4]. However, these models were typically biased toward certain features (e.g. essay length) and could not generalize across topics and metrics. The bias towards hand-crafted features was addressed by replacing them with word embeddings learned by language models such as Word2Vec and GloVe. Based on these word embeddings, the essay scores were predicted as regression and classification tasks by adding a neural network downstream of the word embeddings. By using embeddings trained on a large corpus, a significant improvement is seen in the essay scoring for all metrics as well as the overall score [11]. However, the very word embeddings that were crucial to performance improvements proved to be the biggest limitation of the model. As the embeddings essentially came from the Bag-of-Words approach, they could not capture any contextual information that was partially captured by the hand-crafted linguistic features in the previous models. Instead of adding the hand-crafted features and potentially re-introducing the previous models’ deficiencies, the problem of lack of contextual information was addressed via the attention mechanism using LSTM [13] and transformer architectures. The work of Vaswani and Polosukhin [14] successfully developed the BERT model using transformers. Buoyed by the success of the BERT model and transformer architectures, a flurry of attention-based language models were developed. Now, instead of word embeddings, one could get the sentence or document-level embedding that captures the contextual information. Using these deep embeddings, neural network models are developed to predict the essay scores (both as classification and regression tasks).

\

1.2 Limitations of Current ApproachesDespite this progress, severe limitations exist with using the BERT model. Lottridge et al. (2021) [10] demonstrated the lack of robustness of the model for game essays, random shuffle, and Babel essays. Performance varies drastically across various classes and metrics. To address this drawback, in this investigation, we will model all metrics simultaneously through multi-task learning. Another key limitation of BERT-based analysis is that the token length is limited to 512 in the BERT model. We seek to address this by using more advanced architectures such as Longformer which allow up to 4096 tokens per document. For the data set considered in this study (details in Section 2.1), more than 40% of the documents are over 512 tokens in length. Therefore, truncating the document to only 512 tokens with the standard BERT model would result in a substantial loss in the context. The third key limitation of various studies is the limited dataset - though multiple studies have focused on AES, each of those datasets is scored differently, and consequently, the models cannot be readily trained on all data sets. Therefore, in this study, we investigate the utility of autoencoder networks to train across datasets and use the autoencoder-derived encodings to perform AES tasks. In summary, this study investigates the effect of various deep learning-based document encodings on automated essay scoring. The data set, methodology, experiments, and the deep embeddings considered in this study are introduced in Section 2. Besides varying the deep embeddings, we analyze ways to combine various AES datasets by training the deep encodings across an Autoencoder network. The results from all these approaches are presented in Section 3 and the conclusions as well as the directions for further investigations are given in Section 4.

2. Methodology 2.1 DataThe Learning Agency Lab, Georgia State University, and Vanderbilt University have collected a large number of essays from state and national education agencies, as well as non-profit organizations. From this collection, they have developed The Persuasive Essays for Rating, Selecting, and Understanding Argumentative and Discourse Elements (PERSUADE) corpus, consisting of argumentative essays written by students in grades 6-12, and the English Language Learner Insight, Proficiency and Skills Evaluation (ELLIPSE) corpus, consisting of essays written by English Language Learner (ELLs) in grades 8-12.

\ ELLIPSE corpus: The ELLIPSE corpus contains over 7,000 essays written by ELLs in grades 8-12. These essays were written as part of state standardized writing assessments from the 2018-19 and 2019-20 school years. Essays in the ELLIPSE corpus were annotated by human raters for language proficiency levels using a five-point scoring rubric that comprised both holistic and analytic scales. The holistic scale focused on the overall language proficiency level exhibited in the essays, whereas the analytic scales included ratings of cohesion, syntax, phraseology, vocabulary, grammar, and conventions. The score for each analytic measure ranges from 1.0 to 5.0 in increments of 0.5 with greater scores corresponding to greater proficiency in that measure.

\ PERSUADE corpus: The PERSUADE corpus contains over 25,000 argumentative essays written by U.S. students in grades 6-12. These essays were written as part of national and state standardized writing assessments from 2010-2020. Each essay in the PERSUADE corpus was annotated by human raters for argumentative and discourse elements as well as hierarchical relationships between argumentative elements. The annotation rubric was developed to identify and evaluate discourse elements commonly found in argumentative writing.

\ For this project, we utilize the ELLIPSE corpus and simultaneously predict the score for the six analytic measures: cohesion, syntax, vocabulary, phraseology, grammar, and conventions. Additionally, we attempt to improve our prediction accuracy by utilizing an autoencoder. The idea is to train an autoencoder using the ELLIPSE and PERSUADE corpus. Through this process, the compressed feature vector from the autoencoder might be able to capture features of essays essential to scoring that pre-trained language model features might miss.

2.2 ApproachAs stated earlier, the goal of this project is to predict the score of six analytic measures: cohesion, syntax, vocabulary, phraseology, grammar, and conventions on argumentative essays written by 8th- 12th grade English language learners. For this task, we first develop a baseline and then utilize multiple pre-trained models to improve upon the baseline.

\ Baseline: The baseline is developed using GloVe embeddings and a bidirectional LSTM network. For the baseline model, we first perform the clean-up of data i.e. punctuation removal, white space removal, etc using the regex library and then, utilize the word tokenizer from NLTK to tokenize the essays. An LSTM network is trained on the GloVe encodings of the essays to output a vector of length 6 representing the score for each of the above six analytic measures. We use Mean Squared Error loss (MSELoss) to train the neural network.

\ DistilBERT: DistilBERT is a small, fast, and light Transformer model trained by distilling BERT base. It has 40% fewer parameters than bert-base-uncased and runs 60% faster while preserving over 95% of BERT’s performances as measured on the GLUE language understanding benchmark. BERT uses self-attention to capture the contextual information from the entire sequence [2]. This improves the model’s ability to evaluate the essay samples and provide a more accurate score. For this model, we utilize an auto tokenizer to tokenize the essays and then pass these tokens to the pre-trained DistilBERT model to get the vector representation of the essays. We then train a two-layer neural network using MSELoss to return a 6-dimensional output vector representing the scores for each of the six writing attributes described above.

\ T5: T5 or Text-To-Text Transfer Transformer is an encoder-decoder model pre-trained on a multi-task mixture of unsupervised and supervised tasks and for which each task is converted into a text-to-text format. With BERT, which is pre-trained on a Masked LM and Next Sentence Prediction objective, we need to separately fine-tune different instances of the pre-trained model on different downstream tasks like sequence classification. T5’s text-to-text framework provides a simple way to train a single model on a wide variety of text tasks using the same loss function and decoding procedure. This pre-training framework provides the model with general-purpose “knowledge” that improves its performance on downstream tasks [12]. We used an auto-tokenizer to tokenize the essays and then passed these tokens to the pre-trained T5-Base model to get the vector representation of the essays. We then train a two-layer neural network using MSELoss to return the 6-dimensional output vector (similar to DistilBERT).

\ RoBERTa-base: RoBERTa is another BERT-like masked language model developed by Facebook. In the case of RoBERTa, dynamic masking is used throughout the training for all epochs, while in BERT the mask is static. Through this, the model learns a lot more tokens than in BERT. Further performance improvement is achieved by training on a much larger corpus of data than BERT (10x) and a larger vocabulary set. Through these changes in training, RoBERTa outperforms BERT on most GLUE and SQuAD tasks [9].

\ Longformer: Longformer is a BERT-like transformer model that evolved from RoBERTa checkpoint and trained as a Masked Language Model (MLM) on long documents. It supports sequences of length up to 4,096 tokens. Typically, transformer-based models that use a self-attention mechanism are unable to process long sequences because the memory and computational requirements grow quadratically with the sequence length. This makes it infeasible to efficiently process long sequences. Longformers address this key limitation by introducing an attention mechanism that scales linearly with sequence length [1]. It uses a sliding-window and dilated sliding-window attention mechanism to capture the local and global context. For the Longformer model, we utilize a similar approach as DistilBERT. We use an auto-tokenizer to tokenize the essays and then pass these tokens to the pre-trained Longformer model to get the vector representation of the essays. We then train a two-layer neural network using MSELoss to return the 6-dimensional output vector (similar to DistilBERT).

\ We’ve also used gradient accumulation to train our models on a bigger batch size than our Colab runtime GPU was able to fit in its memory. Due to the large size of the Longformer model, we were limited to a batch size of only two. Such a small batch size would result in unstable gradient computations. We circumvent this with gradient accumulation - instead of backpropagating the loss after every iteration, we accumulate the loss and backpropagate the error only after a certain number of batches to improve the stability of gradient updates [3].

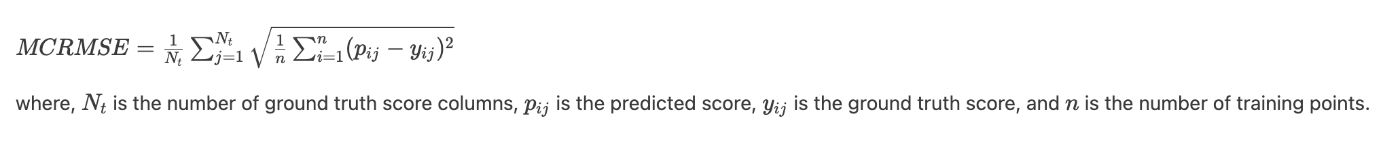

2.3 EvaluationTo evaluate the accuracy of our model's predicted scores, we will use mean column root mean squared error (MCRMSE) as the metric. The metric is computed as:

After implementing the models described above, we tried a few experiments to improve the prediction error of these models. The details of these experiments are as below:

\

- Output Quantization: In the ELLIPSE corpus, the score for each analytic measure ranges from 1.0 to 5.0 in increments of 0.5 with greater scores corresponding to greater proficiency in that measure. We have modified our neural network such that the output is constrained between 1 and 5. We did this by incorporating a sigmoid layer through which the output passes and then we multiply this output by 4 and add 1 to it. Furthermore, once the results are generated from the neural net we perform a mathematical operation score = int[(2 * score + 0.5) / 2] to make sure the output increments in steps of 0.5 only. This operation aimed to replicate the format of the original scores and check if such a modification improves the accuracy.

\

- Weighted RMSE: In the ELLIPSE corpus, the score for each analytic measure ranges from 1.0 to 5.0 in increments of 0.5. However, the distribution of each score in the dataset is not similar. Certain scores such as 2.5, 3, and 3.5 occur more often in our dataset for each of the analytic measures whereas scores like 1, and 5 occur rarely throughout the dataset. To account for this imbalance we utilized a weighted root mean square error (WRMSE) function where the inverse of the frequency of a particular score is utilized as weight and we clip this weight if it happens to be extremely high compared to other weights.

\

- MultiHead Architecture: As mentioned in the previous section, since the distribution of each score in the dataset is not similar, we experimented with having a measure-specific final two-layer neural network to predict the scores. So instead of a single output head that predicts 6 different score values, we implemented 6 different output heads to predict the score for each analytical measure.

\

- Autoencoder: The dataset provided for the current task of multi-class scoring of an essay is only about 4k samples. However, in the ELLIPSE and PERSUADE corpus together, there are more than 180k essays for other AES tasks, such as single scores for entire essays, and parts of essays. Therefore, autoencoders are used to leverage this larger database and perform semi-supervised learning. Stated briefly, the encodings from language models such as BERT, T5 are passed through an autoencoder network trained using all the 180k samples. Then, either the bottleneck layer encoding or the denoised language model encodings from the decoder part of the autoencoder are used to predict the multi-class scores using a 2-layer neural network for the regression head, similar to the fully supervised scenario. Thus, by leveraging the larger set of unlabelled data to train an autoencoder as a preprocessor, we seek to improve the supervised learning predictions. In this study, we considered both the denoised encodings based on DistilBERT encodings.

Effect of Pre-trained Encodings: Table 1 summarizes the performance metric obtained by varying the pre-trained models described in Section 2.2. In these runs, the encodings from the pre-trained models are directly passed through a 2-layer neural network which is trained using the MSE loss, and none of the potential improvements discussed in Section 2.4 are implemented. As this is a multi-class regression, the performance of the models for each scoring metric is shown in Table 3.

\ Among the transformer architectures listed in Table 1, we see that the masked language models DistilBERT, RoBERTa, and Longformer perform better than the generative model T5 - possibly because the masked models are more tuned towards discriminative tasks with numeric outputs. Further research is necessary to conclude if this can be generalized for multiple generative language models. Overall, RoBERTa has the best prediction score among the various models, plausibly due to its much larger training corpus and superior masking.

Table 1: Overall MCRMSE score for various models| Model | MCRMSE metric | |----|----| | Baseline | 1.36 | | DistilBERT | 0.4934 | | T5-base | 0.5320 | | RoBERTa | 0.4746 | | Longformer | 0.4899 |

\ Effect of improvements to regression head: Previously, we explored the effect of varying inputs to the regression head (i.e., by varying the pre-trained models and the encodings therein), whilst holding the regression head training constant. In this section, we explore the effect of varying the training of the regression head while holding the encodings constant. Section 2.4 lists the various changes to regression training that are explored in this study. Note that throughout this section, DistilBERT model is used since it is the fastest model and has lower GPU requirements. The results for various training schemes/enhancements are shown in Table 2.

Table 2: MCRMSE score for various models| Experiment | MCRMSE | |----|----| | Output Quantization | 0.5294 | | Weighted RMSE | 0.5628 | | MultiHead Architecture | 0.508 | | Autoencoder Denoising | 0.575 |

\ Unfortunately, none of these variations to training the regression model result in a significant improvement in prediction accuracy when compared to our original models. In fact, the performance metric on the validation set in Table 2 indicates a drop in performance with these modifications. It is not clear as to why this reduction occurs and further study with a larger dataset is essential to verify that this reduction in performance is not an artifact.

\ For all variations in the text encoding and regression head training, we notice from the validation MCRMSE scores for individual measures that cohesion and grammar seem to be the toughest to predict across all models (see Table 3). This could be a limitation of the pre-trained language models used in AES and not our modeling. Kim et al. (2020) [5] show the limitations of current language models in being grammatically well-informed and provide directions for further progress in language models.

Table 3: MCRMSE score for the individual analytic measure| Model (or Exp.) | Cohesion | Syntax | Vocabulary | Phraseology | Grammar | Conventions | |----|----|----|----|----|----|----| | Baseline | 1.37 | 1.35 | 1.32 | 1.34 | 1.44 | 1.36 | | distilBERT | 0.54 | 0.51 | 0.46 | 0.52 | 0.57 | 0.49 | | T5-Base | 0.55 | 0.52 | 0.48 | 0.54 | 0.58 | 0.53 | | RoBERTa | 0.51 | 0.47 | 0.42 | 0.47 | 0.51 | 0.46 | | Longformer | 0.54 | 0.48 | 0.46 | 0.49 | 0.53 | 0.47 | | distilBERT + output quantization | 0.55 | 0.53 | 0.48 | 0.53 | 0.57 | 0.51 | | distilBERT + WRMSE | 0.56 | 0.56 | 0.55 | 0.56 | 0.61 | 0.53 | | distilBERT + Multi Head Arch. | 0.53 | 0.50 | 0.45 | 0.51 | 0.56 | 0.49 | | Autoencoder + distilBERT | 0.59 | 0.56 | 0.52 | 0.56 | 0.61 | 0.55 |

\

4. ConclusionIn this work, we investigated the effect of various pre-trained architectures and methods to train regression head on Automated Essay Scoring task, where we score each essay on a scale of 1 to 5 for six linguistic metrics (e.g., cohesion, grammar, vocabulary, etc.). The dataset is taken from the ELLIPSE corpus, specifically the subset of the data listed in Kaggle competitions. We considered five deep-learning architectures and five ways to train the regression head and observed using RoBERTa-base with a simple 2-layer feed-forward layer to predict the scores as a multi-class output gave the best result.

\ As expected, transformer architectures significantly outperformed the baseline model of GloVe+LSTM. Furthermore, within the transformer architectures, we see that the masked language models (DistilBERT, RoBERTa, Longformer) give superior performance when compared to the generative language model T5. Although this observation does not generalize to all generative models, intuitively the dominance of MLM seems consistent as they are trained specifically for numerical outputs.

\ Another interesting observation of this study is that varying the training of the regression head by changing the loss functions, constraining the outputs, and autoencoder-based dimensionality reduction/denoising, along with data augmentation, did not improve the model performance. This is rather unexpected, and we do not fully understand the reasons behind this phenomenon. In a future study, these approaches may be repeated with a larger dataset - this helps determine whether these observations regarding training the regression head can be generalized.

\ In summary, we observe that using RoBERTa encodings with a 2-layer feed-forward neural net to predict the six scores simultaneously, similar to multi-task learning, provides the best performance. Particularly, given the small size of the dataset, the effect of using a robust pre-trained model is seen to significantly improve the predictive performance of the model. Also, the performance in evaluating the grammar of the essay is worse than any other evaluation metric, and this is inherent to the language model. Hence, future works should focus on improving language models to better capture the grammatical aspects of the language.

References- Iz Beltagy, Matthew E Peters, and Arman Cohan. 2020. Longformer: The long-document transformer. arXiv preprint arXiv:2004.05150.

- Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2018. BERT: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805.

- Joeri R Hermans, Gerasimos Spanakis, and Rico Möckel. 2017. Accumulated gradient normalization. In Asian Conference on Machine Learning, pages 439–454. PMLR.

- Zixuan Ke and Vincent Ng. 2019. Automated essay scoring: A survey of the state of the art. In IJCAI, vol. 19, pp. 6300-6308.

- Taeuk Kim, Jihun Choi, Daniel Edmiston, and Sang-goo Lee. 2020. Are pre-trained language models aware of phrases? Simple but strong baselines for grammar induction.

- The Learning Agency Lab. 2022a. Feedback prize - English language learning.

- The Learning Agency Lab. 2022b. Feedback prize - Evaluating student writing.

- The Learning Agency Lab. 2022c. Feedback prize - Predicting effective arguments.

- Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, and Veselin Stoyanov. 2019. Roberta: A robustly optimized bert pretraining approach. arXiv preprint arXiv:1907.11692.

- Sue Lottridge, Ben Godek, Amir Jafari, and Milan Patel. 2021. Comparing the robustness of deep learning and classical automated scoring approaches to gaming strategies. Technical report - Cambium Assessment Inc.

- Huyen Nguyen and Lucio Dery. 2016. Neural networks for automated essay grading. CS224d Stanford Reports: 1-11.

- Adam Roberts and Colin Raffel. 2020. Exploring transfer learning with T5: the text-to-text transfer transformer. Accessed on, pages 23–07.

- Kaveh Taghipour and Hwee Tou Ng. 2016. A neural approach to automated essay scoring. In Proceedings of the 2016 conference on empirical methods in natural language processing, pp. 1882-1891.

- Noam Shazeer Niki Parmar Jakob Uszkoreit Llion Jones Aidan N. Gomez Łukasz Kaiser Vaswani, Ashish and Illia Polosukhin. 2017. Attention is all you need. Advances in neural information processing systems, 30.

\

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.