and the distribution of digital products.

DM Television

Are we really testing 3D AI? Study reveals a major flaw in 3D benchmarks

Artificial intelligence is getting better at understanding the world in three dimensions, but a new study suggests that many 3D Large Language Model (LLM) benchmarks might not actually be testing 3D capabilities at all. Instead, these evaluations may be falling victim to what researchers call the “2D-Cheating” problem—where AI models trained primarily on 2D images can outperform dedicated 3D models simply by rendering point clouds into images.

A team from Shanghai Jiao Tong University, including Jiahe Jin, Yanheng He, and Mingyan Yang, has found in their study, “Revisiting 3D LLM Benchmarks: Are We Really Testing 3D Capabilities?”, that many tasks used to evaluate 3D AI can be solved just as easily by Vision-Language Models (VLMs)—AI systems primarily trained on 2D images and text. This raises a crucial question: Are current benchmarks truly measuring 3D understanding, or are they just testing how well AI can process 2D renderings of 3D data?

2D-Cheating: The shortcut that exposes weak benchmarksThe problem stems from how 3D LLMs are evaluated. Many existing benchmarks rely on Q&A and captioning tasks, where an AI is asked to describe a 3D object or answer questions about it. The assumption is that a 3D-trained AI should perform better than one that only understands 2D images.

However, the researchers found that VLMs like GPT-4o and Qwen2-VL, which were not specifically designed for 3D tasks, could often outperform state-of-the-art 3D models—simply by looking at 2D images of point clouds. This suggests that these benchmarks aren’t actually testing whether AI understands 3D structures, but rather how well it can infer 3D information from flat images.

To demonstrate this, the team developed VLM3D, a method that converts 3D point clouds into rendered images before feeding them into a VLM. When tested on major 3D AI benchmarks—including 3D MM-Vet, ObjaverseXL-LVIS, ScanQA, and SQA3D—VLM3D consistently outperformed or matched leading 3D models in many tasks.

Why some 3D tasks are more vulnerable than othersWhile VLMs excelled at tasks like object recognition and basic scene description, they struggled on tasks that required a deeper understanding of spatial relationships, occlusions, and multi-view consistency.

- Simple 3D object benchmarks: Many object recognition tasks in 3D benchmarks could be easily solved using 2D images. The study found that VLMs could match or exceed 3D models in these cases, proving that the benchmarks weren’t effectively testing 3D understanding.

- Complex scene benchmarks: Tasks requiring AI to understand full 3D environments, such as scene-level Q&A, navigation, and situational reasoning, were much harder for VLMs to cheat on. Here, 3D LLMs consistently performed better, showing that these tasks do rely on true 3D spatial reasoning.

This means that not all 3D benchmarks are flawed—but many of them are failing to distinguish between genuine 3D capability and 2D inference tricks.

When 2D models get stuckOne of the biggest weaknesses of VLMs in 3D tasks comes from viewpoint selection—the fact that a single 2D image only captures part of a 3D scene.

The researchers tested three different rendering approaches to evaluate how much this impacts performance:

- Single View: The simplest approach, where an object or scene is rendered from a fixed perspective. VLMs performed best here, as they could extract enough information from just one image.

- Multi-View: Images were captured from four different angles, giving AI models more context. Surprisingly, VLMs did not improve significantly compared to single-view models, suggesting that they struggle to combine multiple perspectives into a unified 3D understanding.

- Oracle View: The AI was given the best possible viewpoint for answering each question. Here, VLMs performed much better, but still fell short of dedicated 3D LLMs, showing that even when given perfect images, they lack true 3D reasoning abilities.

This reinforces the idea that VLMs don’t “understand” 3D—they just do well when given the right 2D views.

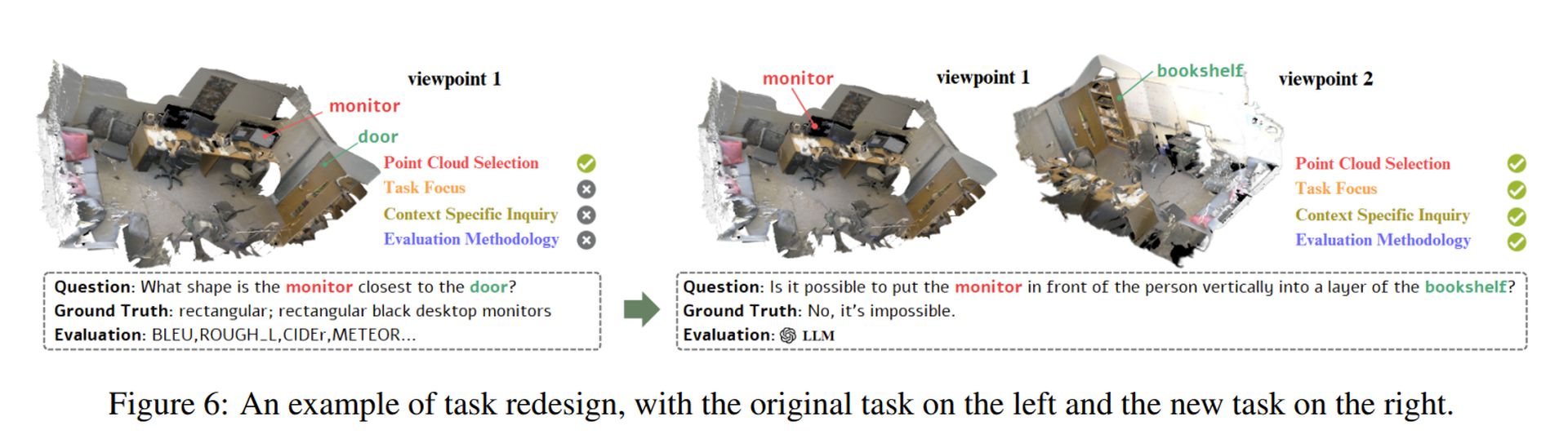

What needs to changeTo truly evaluate 3D AI models, the study proposes four key principles for designing better benchmarks:

- More complex 3D data: Instead of using simple object point clouds, benchmarks should include detailed and realistic 3D scenes with structural complexity.

- Tasks that require real 3D reasoning: Rather than just recognizing objects, AI should be tested on tasks that require spatial understanding, such as predicting hidden surfaces or reasoning about object interactions.

- Context-aware challenges: Questions should be designed to focus on unique 3D properties, ensuring that they can’t be answered using just a single 2D image.

- Better evaluation metrics: Current benchmarks often rely on text similarity scores, which fail to capture true 3D understanding. Instead, AI models should be assessed based on their ability to infer depth, structure, and object relationships in three-dimensional space.

(Image credit)

(Image credit)

The researchers demonstrated how these principles could be applied by redesigning a flawed task (see above image). Their approach ensures that AI models must actually process 3D structures rather than relying on 2D shortcuts.

Featured image credit: Sebastian Svenson/Unsplash

- Home

- About Us

- Write For Us / Submit Content

- Advertising And Affiliates

- Feeds And Syndication

- Contact Us

- Login

- Privacy

All Rights Reserved. Copyright , Central Coast Communications, Inc.